Problem:

Auto-Indexing of LabVIEW is extended to LabVIEW FPGA, only with one small caveat. You can easily auto-index into a loop, but not out of it. You will understand this better if you've already worked with LV FPGA.

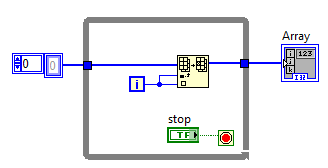

In the FPGA paradigm, we enforce compile time resource determinism, by making sure all our arrays are of a fixed pre-determined size. In auto-indexing out of a loop, we may not know what the size of the array is, and hence it breaks the VI with the error "Arrays must be of fixed size". Try to write the following code in LV FPGA, for a better picture:

Solution:

The current workaround is that we have a fixed size Array which we then use in and out of the loop, replacing its

elements, as shown below.

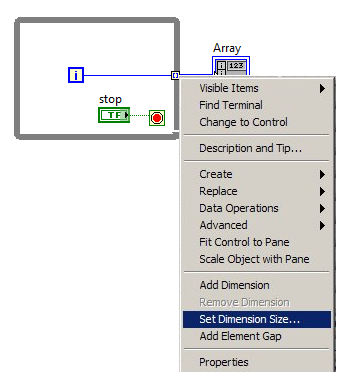

However, an easier and much much more intuitive solution for users would be to just right click the auto-indexed tunnel and set the dimension size.

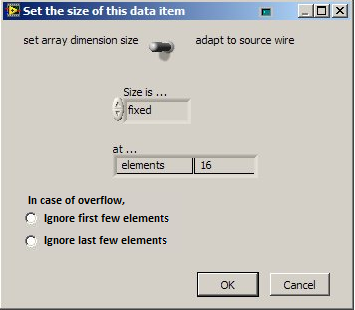

This definitely means that the number of data flowing out of the loop could be more than our fixed size. We handle that case by providing the user with the "In case of overflow" option.

This would ease our effort in coding LV FPGA as much as it would would improve intuitive coding. Vote for this idea if you think it would make your life a tad bit easier.