- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

FlexLogger Plug-In - Process.vi Timing - limited to 10 hz?

11-11-2022 02:54 PM - edited 11-11-2022 03:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm trying to stream data in and out of FlexLogger using a Network Streams based Plug-In to perform some complex and repeated triggering.

I noticed the latency was pretty poor. I traced it down to Process.vi only running at 5 Hz. Sure enough, the default Resampling block size in the Initialize method was set to 0.2s. So I tried various permutations of Resampling OR Set Timing configurations (never used both at the same time). I'd like to get Process.vi to fire at 1000 Hz, even 100 Hz would be ok, but with the timing parameters set to Periodic timing with a 1ms period (and all other configurations) it it never ran faster than 10 Hz. (I also manually set the timing of the return channel to 1 kHz as well.)

Is there a hard limit to how quickly Process.vi will run? Or do I just have something configured incorrectly in the attached project.

To run the attached "Triggers" Plug-In:

- create a single FlexLogger input channel set for "fast" acquisition (1000 Hz)

- load the plug-in in FlexLogger and select the input channel

- run the TargetsExternal.vi. This is the external VI that receives the network streams.

- watch the received waveform size in TargetsExternal.vi. It comes in chunks of 100 data points, meaning Process.vi is running at 10 Hz since the acquisition rate is 1000 Hz.

11-14-2022 09:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If you configure the Plugin so Process is called very quickly, and there's no new waveform data available, it will go through the case where nothing happens and so no Network Stream data will be output. I modified your Plugin to use the Immediate mode and not configure the resample rate to see how fast the Process.vi can be called:

With this change, the process VI was called almost every 0.1ms, but it didn't have any waveforms for most of those calls, so nothing happened in the Process VI. Since your Plugin requires waveform data from other producers, you Plugin is limited by how quickly the producers send data to your plugin, which is typically about 10 times per second. You can't configure any settings to change how often producers send data to your plugin. The DAQ Plugins can actually vary how quickly they output data depending on whether they get behind and so the amount of data and the frequency of the data from DAQ Plugins can vary depending on how stressed the system is, but typically it's about 10 times a second. So if your plugin uses data from a producer plugin, you won't get data and wont be able to use the network stream with that data any faster than the producer sends data to your plugin.

I hope this helps explain the behavior you are seeing.

Thanks,

Brad

11-28-2022 06:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Brad wrote:

... Since your Plugin requires waveform data from other producers, you Plugin is limited by how quickly the producers send data to your plugin, which is typically about 10 times per second...

Just to 100% verify, in this context the Producer is internal to FlexLogger, correct? Thus there is nothing that the Plug-In developer can do to affect how quickly the internal FlexLogger Producer produces data for the Plug-In to consume.

If this is true, then I will mark this as the solution that FlexLogger plugins are indeed limited to no faster than 10 Hz.

If this is true, then a feature request would be some way to go faster to decrease Plug-In latency. 1 kHz would be a nice order-of-magnitude target as this is a sustainable rate in DAQmx producer/consumer loops even on average PC's with an attached cDAQ.

11-29-2022 01:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Just to be clear, the DAQ plugin typically tries to output data 10 times a second, regardless of the sample rate, so the length of the waveform can vary based on the sample rate, but if the DAQ Plugin gets behind, it can send more data to catch up and avoid buffer overflows. You can force your plugin to call Process.vi more frequently, but you may have no data from the DAQ plugin available since it batches up samples to avoid sending small chunks to downstream plugins as often, which can be inefficient. Because the DAQ plugin can read more data, less often for improved performance, this means there can be a longer periods of time between data being available for your plugin to use, but that doesn't prevent your plugin from executing faster if you want, you just won't have any new data to analyze.

Hope this helps,

Brad

12-06-2022 06:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for the follow up. I agree it's clear that the ~10 hz rate of data availability is a limitation of some producer process internal to FlexLogger,

Can someone from the FlexLogger development team chime in on the possibility of increasing that rate in the future? Or at least exposing it so that the End User or Plug-In developer has the ability to adjust it?

12-06-2022 07:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Another option would be to add the DAQ data to an array whenever data is available, but have your Process.vi only analyze a few points (or a single point depending on your algorithm) and remove the processed data from the array when you're done. This would allow you to run your process VI closer to the sample rate of the DAQ plugin. Even though you may only get new data every 10Hz, you could get 1,000 samples every 10Hz and then your Process.vi could do the processing on a few samples at a much faster rate (i.e. analyze 10 samples every millisecond).

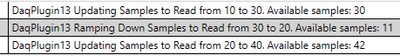

If the main problem is the lag between when the data is acquired and when it arrives at your plugin, you can also add the attached file to C:\Users\<username>\Documents\LabVIEW Data\PEF\PEF.ini. It sets the max number of desired samples to read each iteration for DAQ to 10 (feel free to change as needed). It's important to note that this is a desired amount and if DAQ is getting behind, it may increase the number of samples read to avoid a buffer overflow, but it will likely result in getting fewer data samples to your plugin more quickly. You can monitor how many samples DAQ is actually outputting by using ETW to view the debug output. Here is a sample of that output when I was using a "DAQMaxDesiredSamplesToRead" of 10:

The sample rate of my DAQ was 1613Hz, so normally, it would default to 161 samples per period, so this is at least 4X faster, but it may be better (or worse) for your setup, but it might be worth a try if this is what you want.

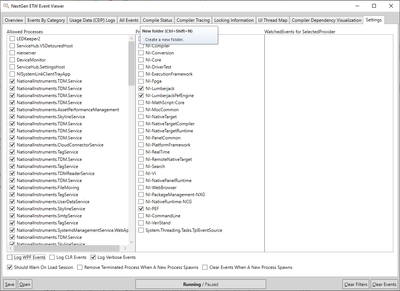

I also attached an ETW viewer in case you want to check out your DAQ behavior. To use it:

- Unzip the ETW Viewer.zip

- Run the ETW Viewer.exe inside the unzipped folder

- Go to the "Settings" tab and check the following options in the Providers column (don't worry about the first column)

- I would close and re-launch after making changes to settings and then go to "All Events" tab.

- Run FlexLogger and configure your DAQ channels and then using the string "samples to read" for the filter to remove a lot of extra debugging info so you just have the debug info of interest:

Hope that helps

12-15-2022 03:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Lag is the main concern, yes.

Using the PEF.ini file did allow some control and decreased the lag. Too low of a value caused a crash, but a "DAQMaxDesiredSamplesToRead" value of 10 on a 1000 Hz acquisition rate was stable on my system at least. That at least improved the lag to 10 ms rather than 100 ms.

It would be nice to be able to change the value of "DAQMaxDesiredSamplesToRead" programmatically in the plug-in code so that it can be set on a per plug-in basis rather than hardcoded in an .ini for all FlexLogger projects. This is a parameter I would have expected to be exposed in the Advanced Pallet when setting up the plug-in timing.

Thanks for that info.

12-15-2022 03:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The DAQ plugin's default behavior is to try and update the table/UI 10 times a second, regardless of the sample rate and it will automatically adjust the samples to read if it is getting behind. We don't expose the "DAQMaxDesiredSamplesToRead" parameter since we want to have a balance between responsive UI and not getting behind and resulting in overflow errors, and so the samples to read can adjust dynamically based on the acquisition state. I'm glad the INI change allowed you to get a more responsive behavior, but I don't think this is something we will expose as a public parameter since it can have negative consequences and it would be a pain to tune this value for every DAQ module, which is why we dynamically change the sampling rate behind the scenes to optimize performance and avoid buffer overflows since this is the main use case most of our users care about.

Hope this helps explain the philosophy behind this behavior.

Brad