From 04:00 PM CDT – 08:00 PM CDT (09:00 PM UTC – 01:00 AM UTC) Tuesday, April 16, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

From 04:00 PM CDT – 08:00 PM CDT (09:00 PM UTC – 01:00 AM UTC) Tuesday, April 16, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

If you saw me doing Fab`s presentation "How to Polish Your Software and Development Process to Wow Your End Users" I'd like to apologise for making you uncomfortable, ah who am I kidding! I found it very funny indeed. Just to explain I stopped for 30 seconds just before talking about splash screens, causing lots of uncomfortable seat shuffling and worried looking attendees. Had a very good day, thanks to all who worked so hard.

In the last blog I laid out that you will likely be hit with changes at the most inconvenient time in a project i.e. at the end. This got me to thinking about one of the key benefits of visual programming and that is Rapid Modification. Some call this debugging and Glass rather directly (and accurately) calls the process error removal. The trouble with both of these terms is that they have the taint of failure about them. So if you are debugging you must be an idiot for putting bugs in in the first place!! Error removal!, WHAT I DON'T PAY $xxx/hour FOR YOU TO PUT ERRORS IN! I think you know where I'm coming from.

With a software project the standard view is that maintenance is a significant part of the cost of a project (40%-80% from various studies, 60% is an average), and a majority of this cost is not error-removal but adding new capabilities. Adding new capabilities and rapid modification is a positive thing, successful software is modified.

So a more grown-up view is that modification at the end of the project is normal, and if like us, you classify maintenance as the phase that begins from the moment you deliver the software to the customer that 60% doesn't sound so extreme. In short this is a very very important part of the software process and making this easy for ourselves will pay big dividends.

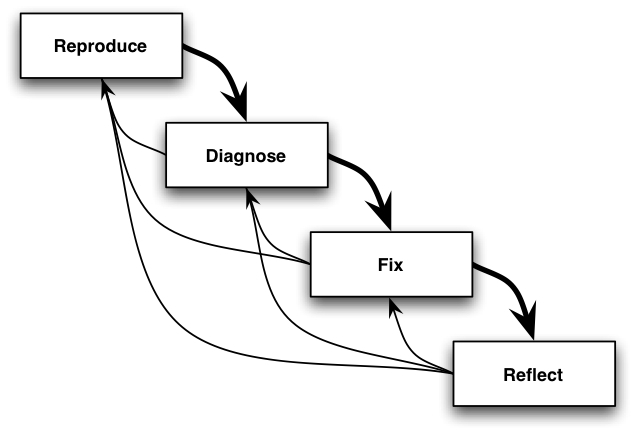

What is the debugging process?

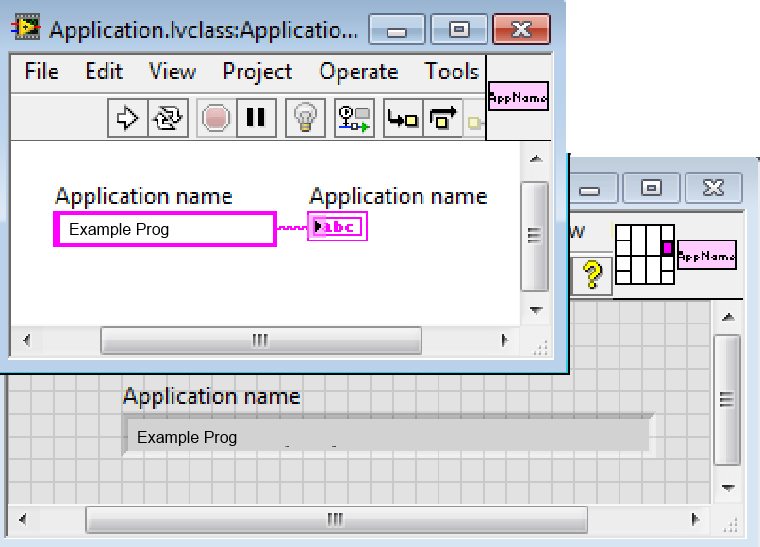

As I mentioned earlier LabVIEW has some help to give us and this is one of its fundamental advantages.

The block diagram is an enormously powerful visualisation tool, cherish it please.

Probes, breakpoints, execution trace are all helpful.

Searching (please improve it NI), even with it's limitations it's sooo useful.

We can also help ourselves, here are some techniques to use..

Harlan Mills has it right, software should primarily be designed to be read. The decision to sacrifice readability is an expensive one (not necessarily for the original developer, but definitely for anyone else involved in the process)

Labeling Loops

Labeling Cases

Bookmarks

Type Defs

Enumerated Types

Putting control in event case rather than just leaving it floating, that way the event is only a click away.

Comprehension is the most important factor in doing maintenance

Ned Chapin 1983

Part of the design process is to think about how our design affects the various aspects the project lifecycle.

The SMoRE acronym is used quite a lot, the trick is to adjust how much you concentrate your design efforts on where you get maximum benefit. I have removed Extensibility from my projects because they were pushing the hierarchy too deep and this was affecting Simplicity and Reusability.

Cohesion

Encapsulation

Keeping hierarchy as shallow as possible, you don't want to be trekking through layers and layers of abstraction to find the logical decision that tackles the problem. At my age I often walk into a room to do something and then completely forget what it is. Now if Watts Towers had 25 rooms that I had to walk through 1st it is very likely I would be in this situation more often! For all my facetiousness this is a very important point. I want to rapidly get to the point of logical decision and this process has to flow, anything that inhibits this fluidity makes visualisation harder and me grumpier.

Another important facet of visualisation is getting the solution to represent the problem, this relatedness is very important to simplifying your code. This is why I'm wary of all this talk of patterns, I fear the day when I come to sort out a job and I'm presented with Factory Patterns, Singletons, Facades etc etc when all I'm looking for is the bit telling me what the software is doing and when. All these things have their place, but if they hinder debugging they are costly indeed.

Using searchable structures like Controls and subVIs. The Constant VI is a very good example of this as a technique.

Exercise some constraint when using dynamic loading and dynamic dispatch. Especially where the dispatched vi change the functional decisions of the software. In my experience dynamic dispatch can simplify a block diagram if used correctly, but if the method changes the function of the software it can act as a block to visualisation.

I might do some funky graphics stuff in my next article.

Hugs, Kisses and Psychedelic Shirts to you all

Steve

Steve

Opportunity to learn from experienced developers / entrepeneurs (Fab,Joerg and Brian amongst them):

DSH Pragmatic Software Development Workshop

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.