- Document History

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

Ar Drone Kinect Control

Introduction.

This project follows the development of a natural user interface for controlling a Parrot 2.0 AR Drone using body gestures with a Microsoft Kinect (v1). Through this document I will share the different techniques used in the gesture recognition task and implementation. This project was made some time ago, but finally took the time to publish it here, I started the project first as a hobby, but later new team members added, thanks to all of them for all the help with tests and documentation. (Which I attach at the end)

Requirements:

*NI Labview ≥ 2012

*Parrot AR Drone 2.0

*Microsoft / Xbox Kinect V1, and Microsoft Kinect SDK (https://www.microsoft.com/en-us/download/details.aspx?id=40278)

*Toolkits (Credits to their respective developers):

- Kinesthesia Toolkit for Microsoft Kinect v1.0.0.5 by University of Leeds.

- AR Drone Toolkit v0.1.0.34 by LVH.

The challenges of gesture recognition.

The main challenge found when trying to make a body gestural interaction interface, is to select which are going to be the movements or patterns you are going to use. First they have to be easy and natural actions to the body, and moreover, you need to be able to detect them with all kind of body complexities. With this I refer to a problem found commonly when smaller or taller people than the average (i.e kids) tried to use the system, it would be hard for them to execute a good control.

As the gestures selected for the control are the following:

*Takeoff: Both hands to the front of the chest, fully extended.

*Emergency stop: Both hands at the head.

*Landing: Take drone to low position and stay still.

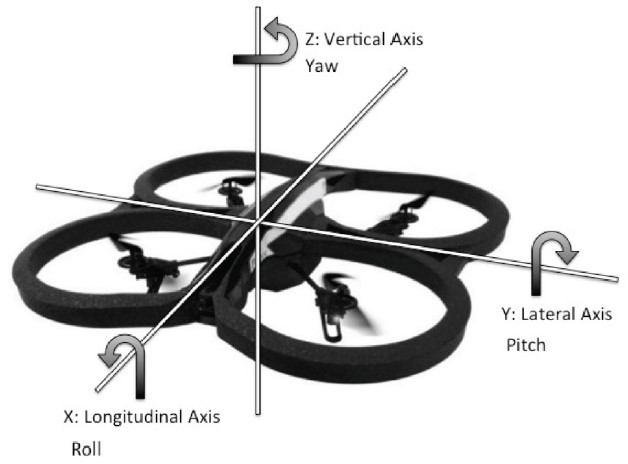

*Flight control was made to resemble the control used in the Parrot app, with the two joysticks in which right hand would control pitch and roll, and left hand controls yaw and altitude.

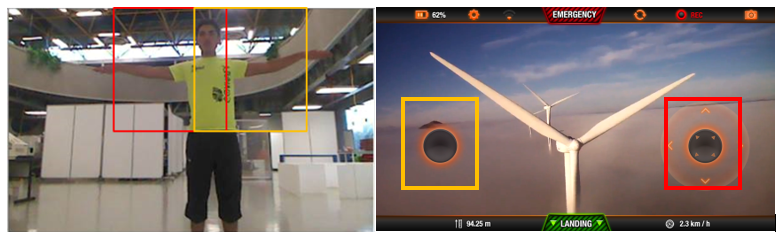

Fig. 1. Working area for gesture detection. (Note the colors inverted to match left & right hand of the user with left and right joystick in the app)

Fig 2. Reference for roll, pitch and yaw movements.

Readings normalization.

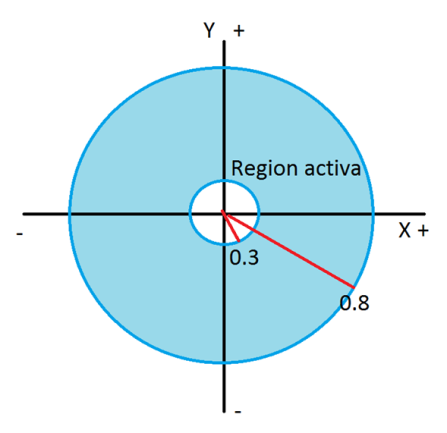

In order to avoid problems when little or tall people used the control system, a coordinate normalization was proposed using the distance from the head to the hip of the user and all further measurements would be divided by this distance to be just a proportion of the height of the person, and not to take absolute coordinates. To detect the desired gesture, a simple subtraction was made between the distance of the hand of the user and his/her shoulders.

After taking some measurements of the minimum and maximum distances made by a natural extension of the arms, the following mean values were found.

So with this, an active region of gesture detection was made as shown in the next figure by the blue surface. Be aware that outside of that region, no signal would be send to the drone for safety reasons. This also applies to Z axis, so that you need to have your hands extended to the front in order to take control.

Figure 3. Normalized active region.

Sending commands.

The first approach to control the drone was to send velocities proportional to how far your hand was extended. This is still documented in the attached documentation, but was later found to be better to just have an ON-OFF control with a limited max velocity. Explanation on how the program works can also be found there. (Btw, the document is still in Spanish –since I´m from México-, I’ll try to upload an English version later)

Implementation.

As mentioned before, you´ll need to have all the previous requirements. There´s also a document attached – “Labview changes”- which explains almost step by step how to install and run the program. If you´re new to this, I would recommend running first the examples of each toolkit run separately to ensure they work correctly… and if you are not that new, well it´s also good to try first.

Actually a good hint, when working with the drone, I found it easier to manually configure the network settings to stablish the Wi-Fi connection.

Figure 4. TCP/IP settings for AR Drone connection.

Further work.

Well this is now actually previous work, on which on the same drone an EEG – mind control system was implemented using an Epoc Emotiv+ Headset in LV. There were found some usable results controlling up to four movements of the drone, but there are still many things left to work around.

Any help you need or problems you found, don´t hesitate in posting here of mail me at a00811157@itesm.mx.