- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Webcast: New Open Source Testbed Platform for Smart Grid and Microgrid Research- Go from IEEE Paper to Working Prototype in Days

04-18-2017 01:08 PM - edited 04-26-2017 08:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Join me live tomorrow at 11am Central for a live demonstration and webcast about a New Open Source Testbed Platform for Smart Grid and Microgrid Research (http://us.ni.com/smart-grid-webinar).

New Open Source Testbed Platform for Smart Grid and Microgrid Research: Now Go from Paper to Prototype Deployment in Days

April 19th, 2017

11:00 AM – 12:00 PM

(GMT-06:00) Central Time (US & Canada)

3 Minute Demo: Grid Forming, Grid Stabilizing Sync Inverters

The testbed enables productive, risk free, team based development and testing of advanced intellectual property related to the following priority research areas:

1. Grid stabilizing bidirectional grid tied inverters and DC converter control systems

2. Distributed Energy Resources

3. Demand Response

4. Energy Storage Systems

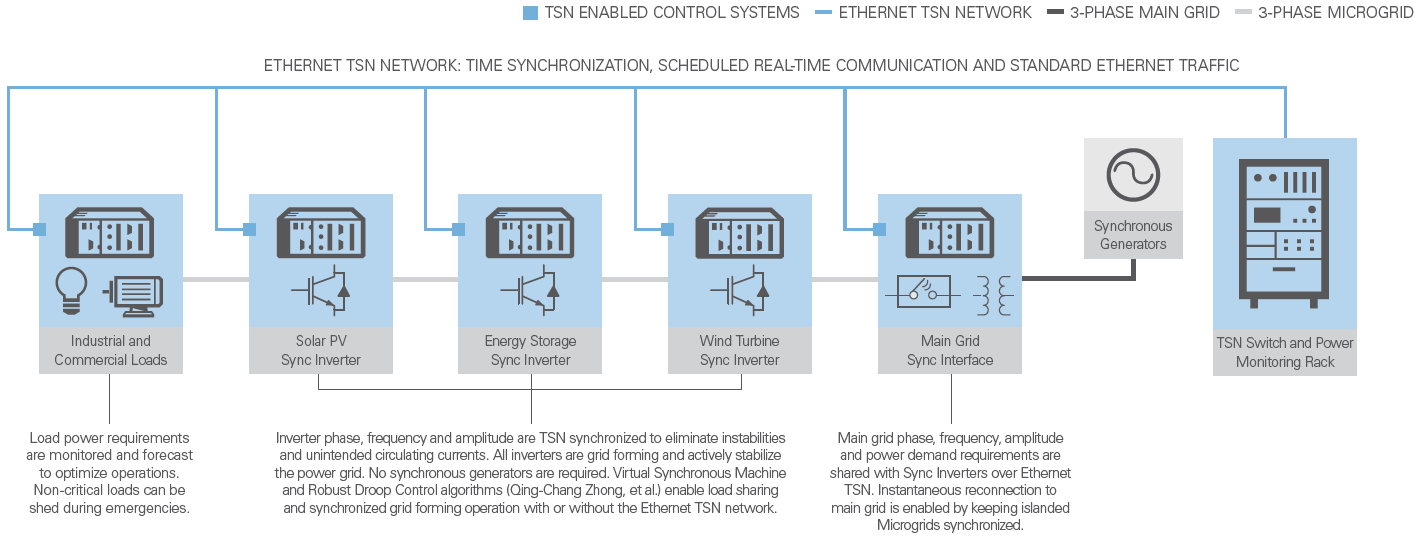

5. Communication infrastructure and emerging network standards (IEEE 802.1 Time Sensitive Networking)

6. Multiple communication protocols (61850, DNP3, C37.118, Modbus)

7. Distribution Grid Management

8. Cyber Security, Private Communication, and Cyber Attack Resilience

9. Wide Array Situational Awareness

10. Phasor Measurement Units and Intelligent Electric Devices

11. Multi-Agent Distributed Control Schemes

12. Wireless communication and Software Defined Networks

13. Electric Transportation

14. GPS and Network-based Time Synchronization

15. Embedded Real-Time Digital Twins

16. Machine Learning (Deep Reinforcement Learning)

17. Real-Time Hardware-In-the-Loop (HIL) Simulation

18. Grid compliance and interoperability testing such as IEEE 1547 anti-islanding tests

19. Voltage and frequency and control loop stability analysis

20. Islanding, Reconnection and Sectionalizing

21. Resiliency, Fault Recovery, Self Healing

22. A/B comparison testing of new control schemes

23. Automated real-time test execution

24. Remote connection access

25. Data interoperability

Register Now: http://us.ni.com/smart-grid-webinar

04-19-2017 12:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Will video recording of the event be available online for viewing?

04-19-2017 05:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yes, the recorded version of the webcast should be available within a week.

Meanwhile, please see the attached slide presentation from the webcast, which includes download links and also enhancements such as a comparison between physical and real-time simulation results using the Opal-RT EHS64 Embedded Digital Twin solver and the grid connected inverter.

04-20-2017 03:54 PM - edited 04-20-2017 03:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The webcast recording is now live. Follow the link below to view. Please respond to this post with any comments or questions.

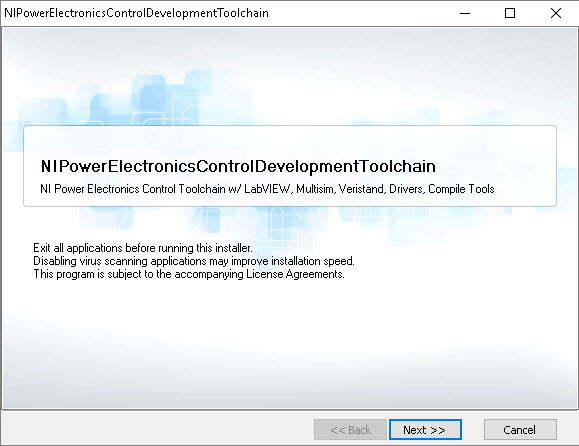

Also, there is a new single click installer for the entire NI power electronics development toolchain which includes 45 days of evaluation mode support:

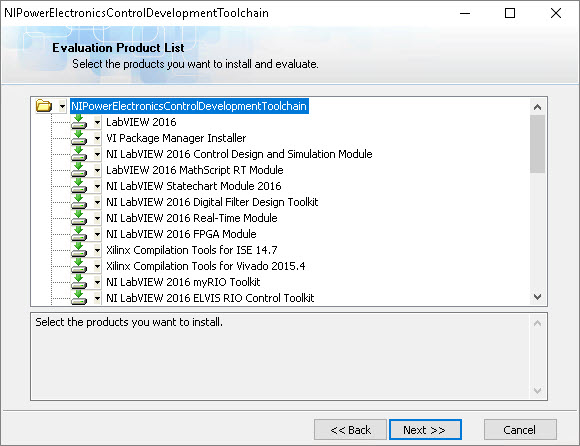

New Single Click Installer: NI Power Electronics Control Development Toolchain (18.2 GB, English)

Note: If you are running Windows 10, deselect "Xilinx Compile Tools for ISE 14.7" since it is not supported. These tools are only needed for the older sbRIO-9606 (PowerPC, Spartan-6) based General Purpose Inverter Controller (GPIC).

04-20-2017 05:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Excellent webcast Brian. Thank you for doing this. Definitely "a lot of information in a very short time".

I'm very curious about the TSN sync technology that you talked about. I will be digging deeper into this topic. I had implemented a similar grid-synchronization technique in my thesis that I completed last year, where I had used a GPS time-stamp for synchronization of 3-phase inverters. I had used a 9076 with a 9467 as time source.

05-01-2017 10:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Brian,

Can you point me to the latest co-simulation tutorial that is available online please?

05-05-2017 08:58 AM - edited 05-03-2018 04:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

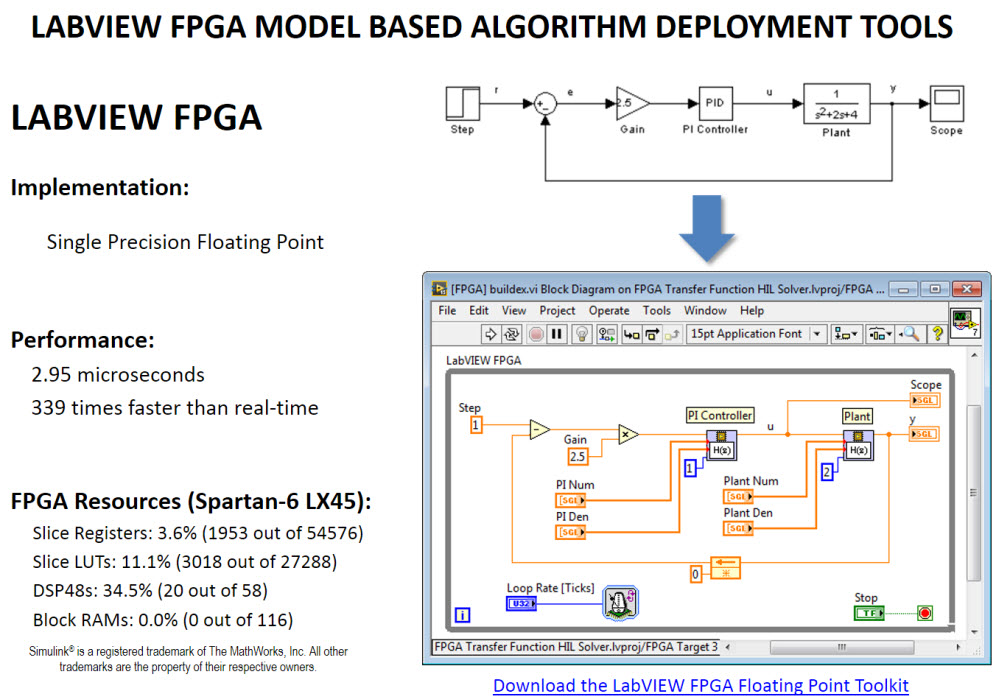

You bet. The latest co-simulation tutorial project is here. You must unzip in a short path (like C:\LabVIEW 2016) or files will be broken due to the long paths created by Xilinx Coregen. You can also find recorded training workshops that go with this tutorial code here.

ftp://ftp.ni.com/evaluation/powerdev/training/FPGADNNLV2016.zip

Also some news related to machine learning. This project now also includes a new open source LabVIEW FPGA Deep Neural Network (DNN) Solver for fully connected Multi-Layer Perceptron (MLP) with ReLU activation.

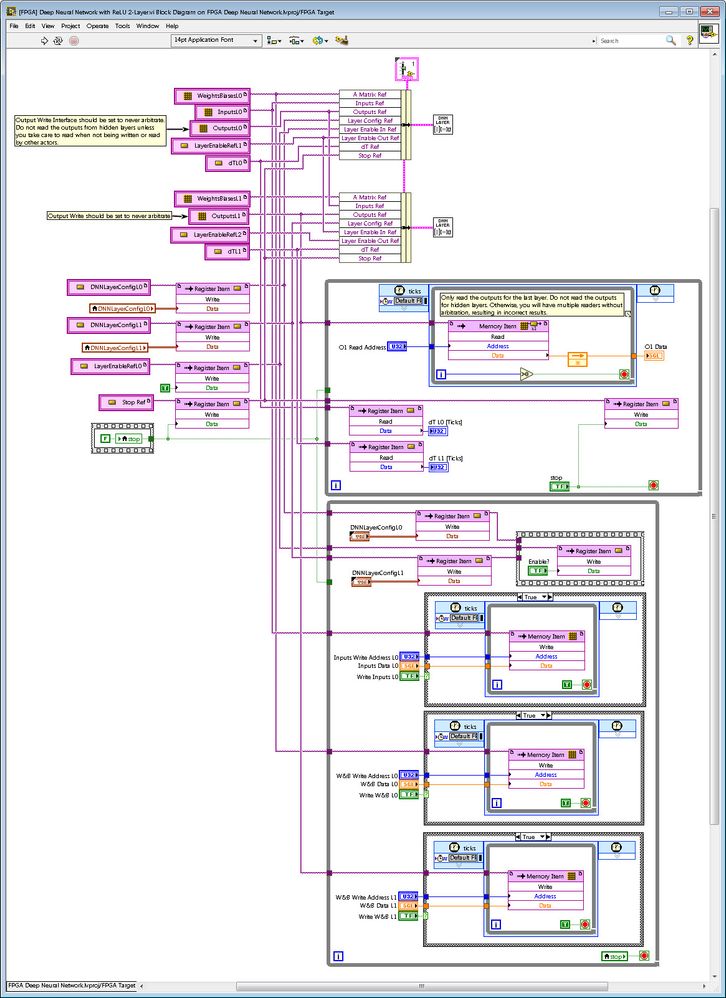

Here is a screenshot of the LabVIEW FPGA code for a two layer DNN using the new solver. As you can see, the output of the top layer is the input to the layer below. There is also layer enable handshaking built in which provides the absolutely minimum latency. The top layer triggers the layer below to read it's first output on the FPGA clock tick immediately after it is written.

This is a resource optimized implementation designed to take a relatively small portion of FPGA resources so the rest of the FPGA is free for other application code. On the Zynq-7020 sbRIO-9607 GPIC, you can fit up to a 15 layer deep neural network. The goal for this FPGA DNN solver is to be as efficient as possible: use the minimum amount of FPGA resources while achieving the maximum theoretical performance for the resources used. The floating point FPGA code is fully pipelined, which means that every math operation (i.e. multiply, add, ReLU) is executing on every FPGA clock tick- no wasting time! Since each layer executes in parallel, there is no reduction in loop rate as more layers are added.

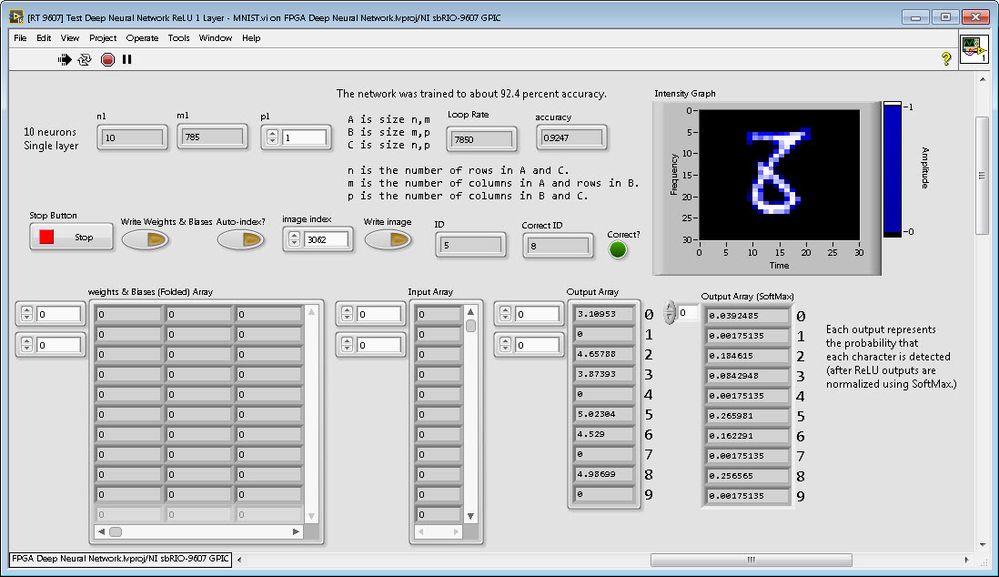

All machine learning tools must include an MNIST example, so here is a screenshot of the "required" MNIST example application running! The execution time is 7850 FPGA clock ticks per image (196 microseconds). If you want even faster, we now have a highly parallelized performance optimized DNN solver that takes just 3.5 microseconds per image (56 times faster).

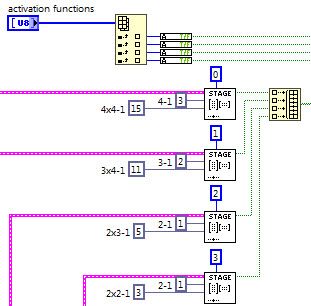

Here is a screenshot of the performance optimized LabVIEW FPGA DNN solver running a 4 layer DNN with FPGA hardware acceleration. You can download the code here (you must unzip in a very short path like C:\LabVIEW 2016 or files will be broken). This project also includes bidirectional active front end (AFE) inverter control code for the new MyRIO Mycrogrid inverter boards.

A big advantage of the FPGA solvers is that they run in dedicated FPGA hardware. Therefore, the latency from physical input to-DNN-to physical output is extremely low, you can execute multiple DNNs in a true hardware parallel fashion, you can stream data in and out (using DMA or the new Host Memory Buffer) to keep the DNN crunching on every clock tick, the performance per watt is better than running on a GPU, etc.

Here is some notes on performance for a couple of typical applications.

Power electronics control application: You can execute an N layer DNN with 16 inputs, 100 neurons per layer, and 16 outputs at 25 kHz (40 microseconds.) Thus, the performance is sufficient for high bandwidth power electronics control. Loop rate execution speed does not depend on the number of layers. The number of layers is only limited by the number of DSP cores on the target (each layer consumes 9 DSP cores while a Zynq-7020 has 220 total DSP cores) and the amount of block RAM. There is about 4.8 Mbits of block RAM on a Zynq-7020, which is enough to hold about 150,000 weights and biases or roughly 6-layers with 158 neurons/layer.

DSA waveform analysis application (i.e. accelerometer vibration or electric power quality analysis): You can execute an N layer DNN with 8 inputs, 100 neurons per layer, and 8 outputs at 50 kHz (20 microseconds.) Thus the performance is high enough to perform advanced real-time analytics on every data sample! Also keep in mind that execution speed does not depend on the number of layers- only the execution rate of the slowest DNN layer. Loop rate performance (in 25 nanosecond FPGA clock ticks) for a layer is equal to the number of inputs * number of neurons, or in Hz it is f_loop = 40e6/(Num_inputs*Num_Neurons).

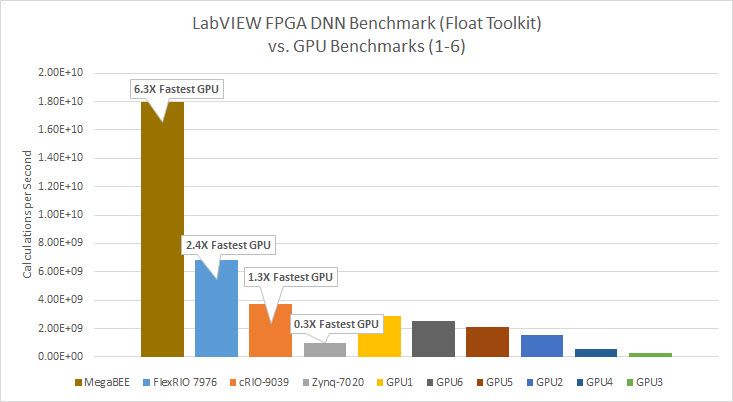

Because the DNN IP core is fully pipelined and takes advantage of the parallel distributed memory and DSP computing cores in the FPGA, the performance is quite excellent. Here is a benchmark comparison for various FPGA targets (in which all DSP cores are used) versus some GPU benchmarks I found online.

Please respond with any questions, comments or suggestions!

05-05-2017

11:33 AM

- last edited on

05-03-2018

05:00 PM

by

![]() BMac

BMac

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks.

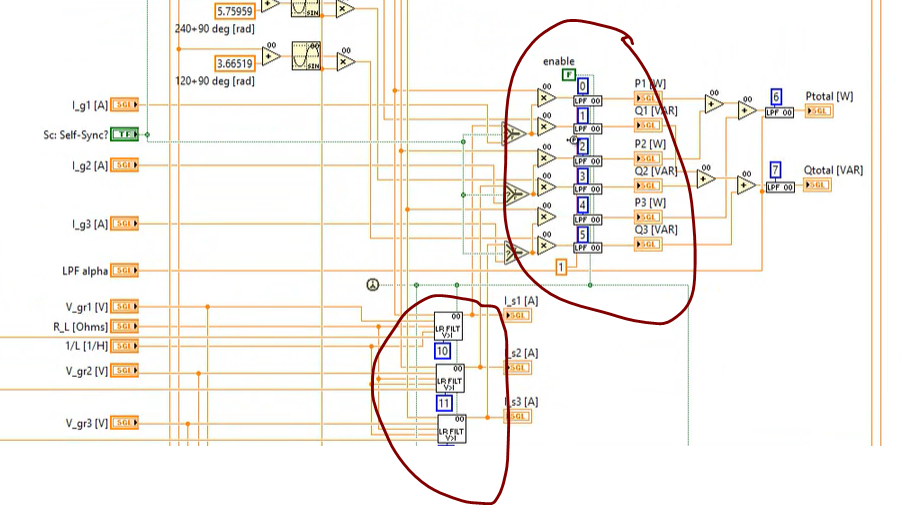

In the videos that you recorded I noticed a rather new way of using the multichannel VIs (eg LPF). In the screenshot below, I see that you have used the same LPF block at separate locations and simply providing corresponding channel numbers as constants. I am assuming these are "Multichannel LPF00 blocks" configured for "non re-entrant execution".

I have always been using such multichannel subVIs within a for loop and passing indexed arrays of corresponding signals. Is this method, in effect, identical to the for-loop method in terms of FPGA resource usage and timing considerations? Do you have any tips/cautions in using this method in place of for-loop method?

I wasn't able to find an example VI in the latest GPIC Reference Design project for labview 2016 but I see you have posted the link for the codes here.

Do you have any documentation on the n-channel collector.vi that is used for graph plots in co-sim?