From 04:00 PM CDT – 08:00 PM CDT (09:00 PM UTC – 01:00 AM UTC) Tuesday, April 16, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

From 04:00 PM CDT – 08:00 PM CDT (09:00 PM UTC – 01:00 AM UTC) Tuesday, April 16, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

07-09-2014 08:39 AM

Hi,

I'm doing the blockiness test on PQA to a basic video stream (just frames one-color, one second has black frames, one second has white frames, one second green frames and so on). I made a test with provider AVI single and another test with provider digital AV (banc 8133 with modules 6545 and 2172 for HDMI). I get almost a similar behavior for two tests but numerical values are totally different. I want to know what is the ideal blockiness value for a perfect frame (no-blockiness at all) ? is it 1?

Carolina

07-09-2014 10:34 AM

Carolina1: The quick answer to your question is that the perfect score is 0, no difference in macroblock edges. This can be demonstrated by loading attached AVI in PQA.

Blockiness:

Checkers:

07-10-2014 04:34 AM

Hi,

I think for me it is better to use checkers processor because I have to test decoder devices (Set-top-box) instead encoder devices. Thank you for your answer and video. Do you have the same video but HD format 1080p?

Carolina

07-10-2014 09:40 AM

About the AVI I created:

I built that video using the IMAQ AVI functionality by creating frames in Paint for the resolution I wanted, then built them up. I am attaching the code and source that I used to make a 1080p video that you could expand and change up if you'd like. The code isn't perfect, just a quick concept.

About Checkers:

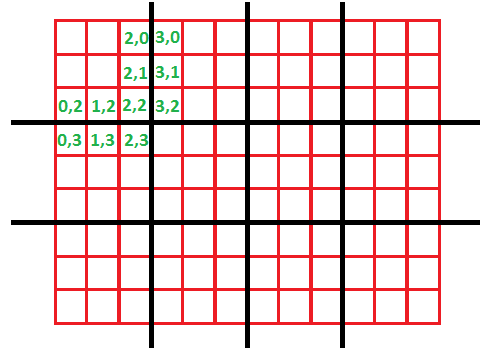

Using the Checkers processor is most likely going to require some post processing work in LabVIEW. At a minimum, it is going to require you analyzing the multi-point metrics from within PQA rather than the single-point. Single-point averages out the entire frame, so if you have 1 bad block, its hard to tell that from other things that might be going on. Multipoint breaks the frame up in the 'Block size' that you specify, and effectively returns that 2D array as a 1D array (all of row 0 data, followed by all of row 1 data, followed by all of row 2 data, etc) , so its results can be challenging to look at too. Basically, you'll be looking for one or more multi-point block that had a spiked value, indicating that it ran into a hard edge.

If you bring the Checkers data back into LabVIEW, you can re-compose it into a 2D array, and also use an intensity graph to better see what the returned data is indicating. I have a demonstration of this that I can share, but would prefer not to post that to the public forum. If you'd like to contact your local NI Support team and open a service request, I can share that internally through them. It will be provided as-is as a demonstration, I can't fully support it.

One other tip, it's much more CPU intensive, but to get the most granular Checkers results, I would recommend reducing the block size to 4 (the lowest it can go). This block size is different than the one used for Blockiness.

About SSIM:

Using Checkers is ultimately challenging unless you can control your video source, and use a source that doesn't contain hard unnatural lines in it (like on-screen text or borders). If you can control your source, and you can control the replaying of it to your DUT, I would recommend the SSIM processor with a reference video. Using this method you create a 'Golden' known-good reference, and then compare your future acquisitions to it. SSIM is an advanced vision algoritihm that detects unnatural defects in pictures the way that humans perceive them. Something like a macroblocking error will score very high because it is not natural in a scene.

07-10-2014 10:05 AM

Hi,

Really I didn't know that checkers has a lot of work and I don't have Labview. But I can control my video, I'm just using images as you send me and I create avi videos as you. Then I use this video with the AVI single provider and then I send it to my set-top-box, finally I made the comparison between two videos. Actually I haven't worked with the SSIM or PSNR processors, but I will do it next week.

Carolina

07-10-2014 10:20 AM

If you can control your video source, I would highly recommend using the SSIM metric. It's going to take a lot of work off your end, because its really strong at detecting defects. The nice thing about SSIM is that it returns a result of 1 (perfect match) to 0 (no match). You can use PQA Metrics tab to set a high Pass/Fail threshold, like .95 or more (I'd do testing to figure out the best limit to use), and it will take care of most of the work for you.

PSNR performs a similar task, but has a few drawbacks. It is much more processor intensive than SSIM. It returns an unbounded result, 0 to Infnity. And overall the algorithm is a different approach.

The algorithim and work behind SSIM is some very advanced work in the field of studying how humans perceive images and defects in them. Humans are very good at naturally detecting very small distortions in an image, and SSIM was designed to emulate that process.

There's no harm in trying both in your design phase, however. If you see similar results, I would recommend sticking with SSIM as it will be simpler to work with.

07-11-2014 04:35 AM

I will take your advice, I will work with the SSIM and PSNR and see what happen with them.

On the other side, I want to use MTF processor too. I found this page and I made a video with the same image that their. But I don't understand what is the resolution I need to put and the MTF in the result info (image)?

I want to know how MTF and resolution are calculated by PQA?

07-11-2014 12:30 PM

We used our own implementation of MTF in PQA, and as such can't share all of the details of that implementation, which are also very complex.

Reviewing PQA Help at: I PQA Executive and the NI PQA Configuration Panel>>NI PQA Executive Components>>NI PQA Tabs>>Processors Tab>>Video Metric Processors>>MTF Contrast Processor gives us a start in how the processor works.

The 'Resolution' input affects cycles per pixel. In some ways 'Resolution' and 'Subsampling' relate to how we're going to subdivide up pixels as we're scanning for edges and contrast.

The 'MTF' output gives us contrast at the edge that was detected.

The 'Resolution' output gives us resolution in cycles per pixel at the MTF that was output.

Vision Processing and MTF methodology take a good bit of explanation beyond what I will be able to offer here. I think these next 2 links do a better job of explaining the concepts than I would be able to here. They further explain the idea of cycles per pixels, and how this relates to the Fourier transform that is performed on the edge data to return an MTF value:

http://www.imatest.com/docs/sharpness/

http://www.normankoren.com/Tutorials/MTF.html

I'd also like to point out that, SSIM will help you detect problems with sharpness and contrast in your incoming video stream as compared to a reference stream. You may not need to use the MTF contrast processor at atll.

Think of SSIM as kind of a catch-all processor. It can detect the problems we've discussed above: compression artifacts from video encoding, macroblocking errors in decoding, issues that cause a picture to be less sharp than it should be, if a picture has been shifted or disorted, and much more.

If your test just requires that you detect these problems, you may not need to specifically test for each one individually. SSIM is great at giving a general pass/fail against a bunch of different types of problems you would encounter, it's just not going to tell you which specific problem caused it to fail (macroblocking error, sharpness, etc).

07-17-2014 07:24 AM

Thanks so much for your help!!

Now I'm working with SSIM processor and I have good results. But in my video I realized that when I have black frames or black and white frames, SSIM is lower than in the ohters colorfull frames. Is it normal?

Carolina

07-17-2014 08:54 AM

I created a simple AVI (attached), and got a perfect score of 1 from the SSIM processor when I created a reference and ran it against it. Can you share your test frame or frames that cause the issue?

If its normal video subject matter, just in black/white or grayscale, I would expect the SSIM algorithm to still work properly. If it is more like a black and white video test pattern with unnatural shapes and hard edges, then I could possibly see the SSIM algorithm having trouble with this since the focus of the research was on processing natural scenes. However, I just tried that test pattern also, and it seemed to score perfectly.

In general, I would still expect SSIM to score properly. You may want to verify that you aren't introducing some issues with the codec using to encode the video, or something similar.