- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Time delay in long-term recording for USB DAQ device

Solved!06-19-2017 10:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

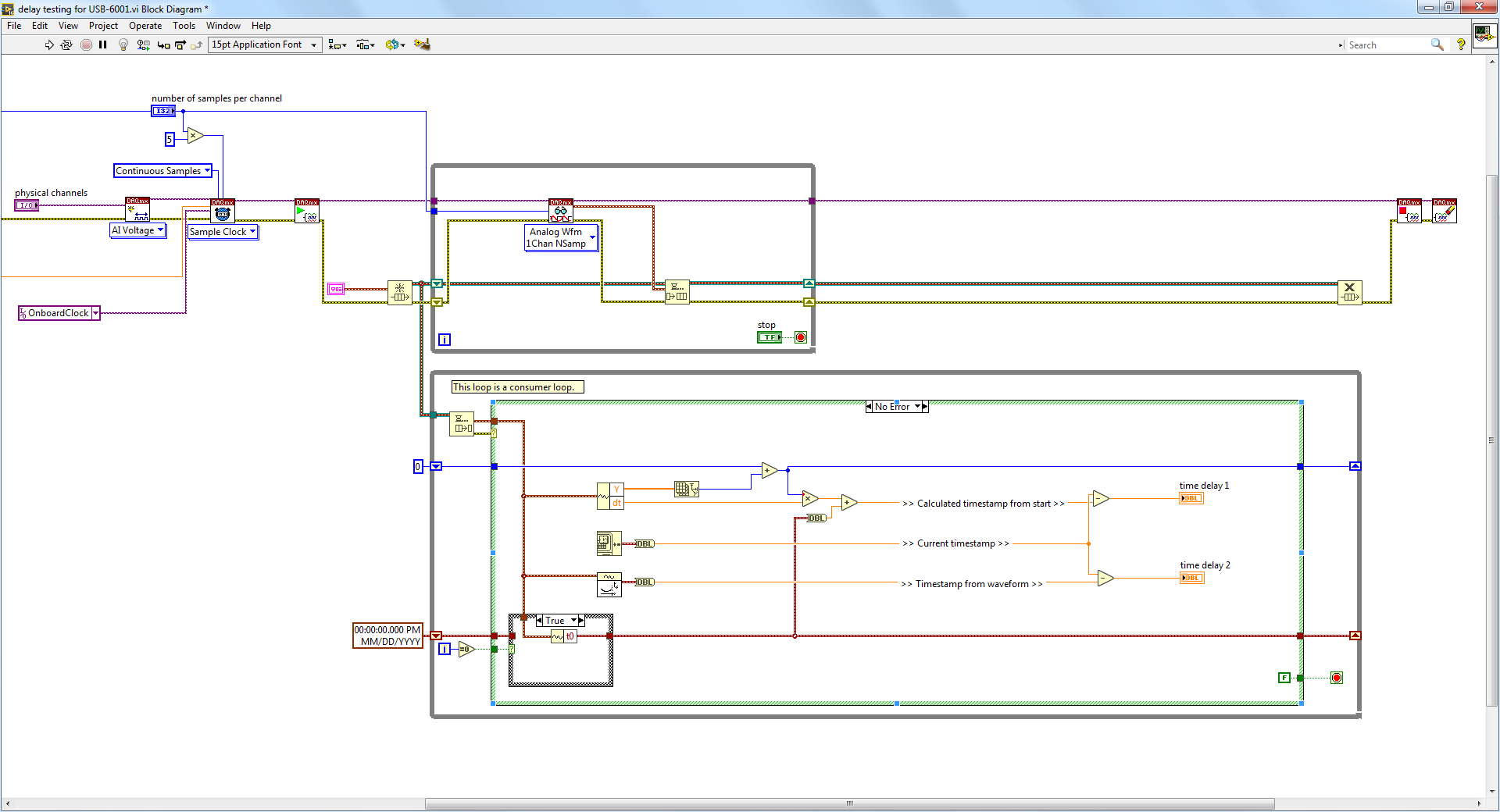

I am working on a project recording signal with a relatively lower sample rate of 250 Hz. My problem is the USB device (USB-6001) introduces a couple of seconds delay every day. I am not sure if this latency is normal for a USB device. Is there any way to improve it without switching to a PCI device?

http://www.ni.com/white-paper/3509/en/

The time delay I mean is the difference between the real timestamp and the one supposed to be after many hours. I wrote a vi to demonstrate what it is. Also have screenshots attached.

Any advice or suggestion will be greatly appreciated. Thanks.

Solved! Go to Solution.

06-19-2017 12:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

What you're seeing is not due to *latency* and switching to PCI won't fix it. What you're seeing is a small amount of *inaccuracy* between two timekeeping systems, manifesting as a difference that changes at a fairly consistent rate.

Time reported from the DAQmx task is calculated by something like:

start_time + (num_samples / sample_rate).

I don't know the typical accuracy specs for a PC's real-time clock. I don't know specific specs for the USB-6001 but a lot of DAQ boards have a timebase spec'ed to 50 parts-per-million base accuracy plus an additional temperature-based coefficient. So let's look at the effect of 50 ppm. In 1 hour, you have 3600 sec which is 3.6 million msec. The accuracy spec would still be met if you accumulated 3.6*50 = 180 msec of error per hour. In your graph, you seem to be heading toward well below 100 msec per hour.

You're very likely well within spec for your board timebase and you can't count on improvement via a desktop board. What you need to do is make a decision about how you'll handle timekeeping in a long-running program. You can believe either the PC's real-time clock or the DAQ board's sample clock, but you're going to need to pick one.

-Kevin P

06-21-2017 08:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks Kevin.

My explanation makes sense. I just don't believe the NI product has so much accumulated time delay per hour. Thought these mature products should have some calibration done to make it as accurate as possible. And if so, NI should provides some solutions for long term recording from their end.

06-21-2017 08:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yeah, it is a little surprising at first when you track down the long-term effect of a small parts-per-million inaccuracy. On the other hand, the 50 ppm timebase error *is* in the same order of magnitude as the the 1 bit-in-16 resolution of a lot of their A/D converters. In other words, the timebase isn't *uniquely* carrying all the uncertainty.

And FWIW, the discrepancy doesn't necessarily mean that the board is at fault. The internal PC real-time clock has error/uncertainty of its own, and *could* be the bigger culprit here.

Quick little note: querying the PC time-of-day clock is fraught with its own potential issues. On modern OS'es, there's often a time sync service that occasionally corrects the real-time clock. A long-running program that relies on querying time-of-day may find time to jump backwards at these moments.

-Kevin P

06-21-2017 08:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Kevin,

I really appreciate your help and prompt reply. That explains a lot. But is there a 'official' solution for this? Or I just correct the timing with PC time clock once a while?

06-21-2017 09:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm not aware of an "official" solution that doesn't involve specialized hardware, and am not experienced with those specialized setups.

I'm not sure what you mean about "correcting the timing with PC time clock once in a while". I'd only recommend you think very carefully about how you do this "correction" and why.

Are you running a clocked sampling task continuously for days at a time? Exactly how are you impacted if "task time" differs from "PC time" by a few seconds per day? Are you correlating dynamic data from the task to infrequent events that are timestamped with PC time?

In a scenario like that, I would let the task be my master time reference. Then if I needed to mark an event, I'd query the task for time or sample #.

-Kevin P

06-21-2017 02:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks Kevin.

My main issue is that when I using a graph indicator to display the real-time acquired signal with calculated timestamp, which is t0 (the timestamp of the very first sample) + sample number * df (reciprocal of the sample rate), the absolute time format on the graph will be a few seconds delayed after days.

If i run my code for a month, the accumulated time delay will be like about 1 minute. That's my concern. I always feel like I am losing data samples in a long recording. Also the difference between the timestamp of my last sample and the current PC time always accumulating, just like I demonstrated in the attached vi.

Did I make myself clear?

06-21-2017 03:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Common solution: An better external timebase that triggers your DAQ. (Never used a 6k unit, do they support an external trigger clock?)

A GPS disciplined rubidium clock ? 😉 ...

How good is your wristwatch? (and keep in mind the internal XO is 'human oven' stabilized :D)

Seismic recorders often have the same problem at similar SR. Some not only record XYZ velocity , they have an extra channel for GPS signals and software to resample the data later.

Since you wrote a vi to compare the USB device XO with the system clock, you can try different temperatures at your device and maybe you find a suitable temperature that match your system clock 🙂

And yes, PXI systems have solutions for such problems, but not a low cost USB 6k (and the spec answers most of these questions)

Henrik

LV since v3.1

“ground” is a convenient fantasy

'˙˙˙˙uıɐƃɐ lɐıp puɐ °06 ǝuoɥd ɹnoʎ uɹnʇ ǝsɐǝld 'ʎɹɐuıƃɐɯı sı pǝlɐıp ǝʌɐɥ noʎ ɹǝqɯnu ǝɥʇ'

06-21-2017 03:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks henrik.

I got your point. If I use an Arduino as a external timebase, I would get a better timing accuracy. This is how it is for a USB device and no work around.

06-21-2017 05:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

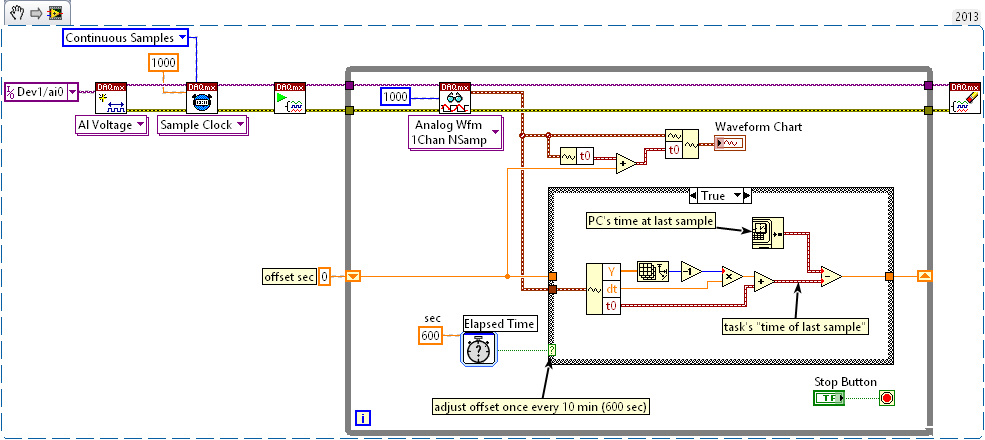

A little workaround would be to manipulate the t0 of the DAQmx waveform *ONLY* along the path that feeds the visible graph. If you're also writing data to file, this kind of simple step-function adjustment is not such a good idea.

Below's a snippet to illustrate how you might adjust time every 10 minutes. Treat it as a starting point. For example, it'll be an improvement to use dataflow to force the query of PC time to occur immediately after returning from DAQmx Read. There could be other little things too, but this should be a head start in the right direction.

I'm gonna let it run overnight to see how it acts over a longer period of time on a desktop data acq board.

-Kevin P