- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Kinethesia toolkit with NI vision Development Module Data Type

08-25-2014 12:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

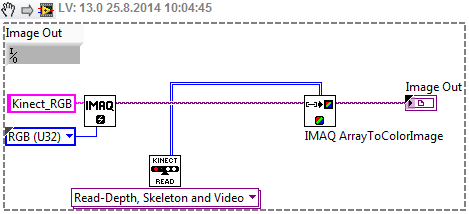

I am working on a project where I need to use kinect camera as vision sensor.I already managed to get depth and RGB data from the camera using kinethesia toolkit.Now

I am trying to use NI IMAQ vision to do object detection.I am having trouble converting the data between the kinthesia toolkit and IMAQ. I am not sure how to convert the data between

kinethesia toolkit VI and IMAQ VI. Where should I start looking for information Data type conversion so that I can use kinethesia VI data to IMAQ VI as input. Is it even possible to use

Kinethesia with IMAQ for image processing? Whatelse do I need to know to get the job done?

Thank you.

08-25-2014 03:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

I have never used Kinesthesia toolkit, but I suppose it would be as easy as this:

If I am not mistaken, Kinesthesia is primarly used to track the body/joint movement. If you want to do general object/position detection I suggest using the OpenNI/PCL framework. There you can map any 2D image coordinate to the corresponding 3D position.

If you are interested, you can take a look at the link in my signature for more information.

Best regards,

K

https://decibel.ni.com/content/blogs/kl3m3n

"Kudos: Users may give one another Kudos on the forums for posts that they found particularly helpful or insightful."

08-25-2014 09:32 PM - edited 08-25-2014 09:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

HI k,

Thank you for the suggestion and the example. Your blog is very intresting and there are plenty of things to learn.I will try out OpenNI.I am working on a Exoskeleton Robot to help Stroke Patient to reach objects on the table.So I need to both track body/joint movment and object detection.I thought of using NI vision to detect objects and kinethesia to track the joint moviements.Am I heading in the right direction? I am experimenting with OpenCV for the object detection part.

What do you think of OpenCV compared to NI Vision?

Regards,

Renga.

08-26-2014 06:37 AM - edited 08-26-2014 06:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

one problem I see about this is that if you want to guide the robot to an object in space, you would need its 3D position. As far as I have looked, Kinesthesia can give you 3D joint positions, but not a 3D position of any arbitrary point in space. I suppose that you want to ideally match the 3D coordiantes of the joint/hand to the 3D corrdinates of the object that needs to be picked up?

I have used Kinect to track 2D object and find its corresponding 3D position. This is fairly easy to do with PCL - I used Labview for image processing (and display) and PCL for 3D data processing. The PCL libraries were "integrated" into Labview via a .dll.

I belive the PCL itself has no skeleton/joint tracking functionality, but i've seen some talk about OpenNI to PCL integration... You can try searching for this online.

You could also take a look at the OpenCV implementation for grabbing images (and 3D data) from Kinect: http://docs.opencv.org/doc/user_guide/ug_highgui.html

Regarding OpenCV and Labview, I have used both and both got the job done :). I do not have any preferences, since both have their pros and cons. It's a matter of taste and your project goal - I think OpenCV has more "low-level" functions, where as Labview IMAQ is more end-goal oriented and is based mainly on "high-level" functions. Also, OpenCV uses some GPU functionalities.

Best regards,

K

https://decibel.ni.com/content/blogs/kl3m3n

"Kudos: Users may give one another Kudos on the forums for posts that they found particularly helpful or insightful."