- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ImageToArray, Flatten Image To String speed

03-22-2017 09:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi everyone,

I'm using a NI cDAQ-9136 to acquire images with a GigE camera. I also want to do some light image processing on that machine. Granted, it's only an Atom processor, but I saw that dedicated 'Vision' systems e.g. CVS-1459 feature the same CPU.

By light image processing I mean image moment calculation. I did not find a suitable block in the Vision Toolbox, so now I'm using this one: http://forums.ni.com/t5/forums/v3_1/forumtopicpage/board-id/170/thread-id/724141/page/3

Which means, that I have to convert the image to array.

Due to the limited storage capacity on the cDAQ, I also want to send the acquired images to another machine via TCP/IP. So, I flatten the image to string and send it.

So far, so good. But: For a 2048x2048 16 bit image both operations take a whopping 30...36 ms on average. Actually sending the images takes another 37 ms. Add some overhead and the machine can't keep up with the 15 fps that the camera is sending.

I'm just curious what you think about and how you would approach the problem. I'm a bit surprised that there seems to be no implemention of image moment calculation (then I could probably avoid converting to array).

I think I will switch to a more powerful machine for image processing. But maybe there is another solution?

03-22-2017 05:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Flattening is really nice in that it encapsulates all info about the image and other metadata into a string that can be unflattened anywhere and is self-describing. If all you want to do is move images around efficiently, it is overkill and has more overhead than a more raw approach.

Here's some suggestions:

-See if you can use the IMAQdx Ring API functions. These have some restrictions but will let you access the image buffers without any copying on the CPU. Using the Grab APIs entails one extra copy.

-Use Vision's Image to EDVR to gain access to image buffers as array data without copying them (this could be used for your processing, too)

-Send image data as raw array through TCP using above EDVR

-On receiving end, create image of appropriate parameters/size and get EDVR handle, then copy from TCP read directly into it

-Finally, since both of these are quad-core CPUs, you could probably consider pipelining or parallelizing some of these steps. There are some IMAQdx Ring examples that show multiple worker loops processing images from a single queue.

Hope this helps,

Eric

03-22-2017 09:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

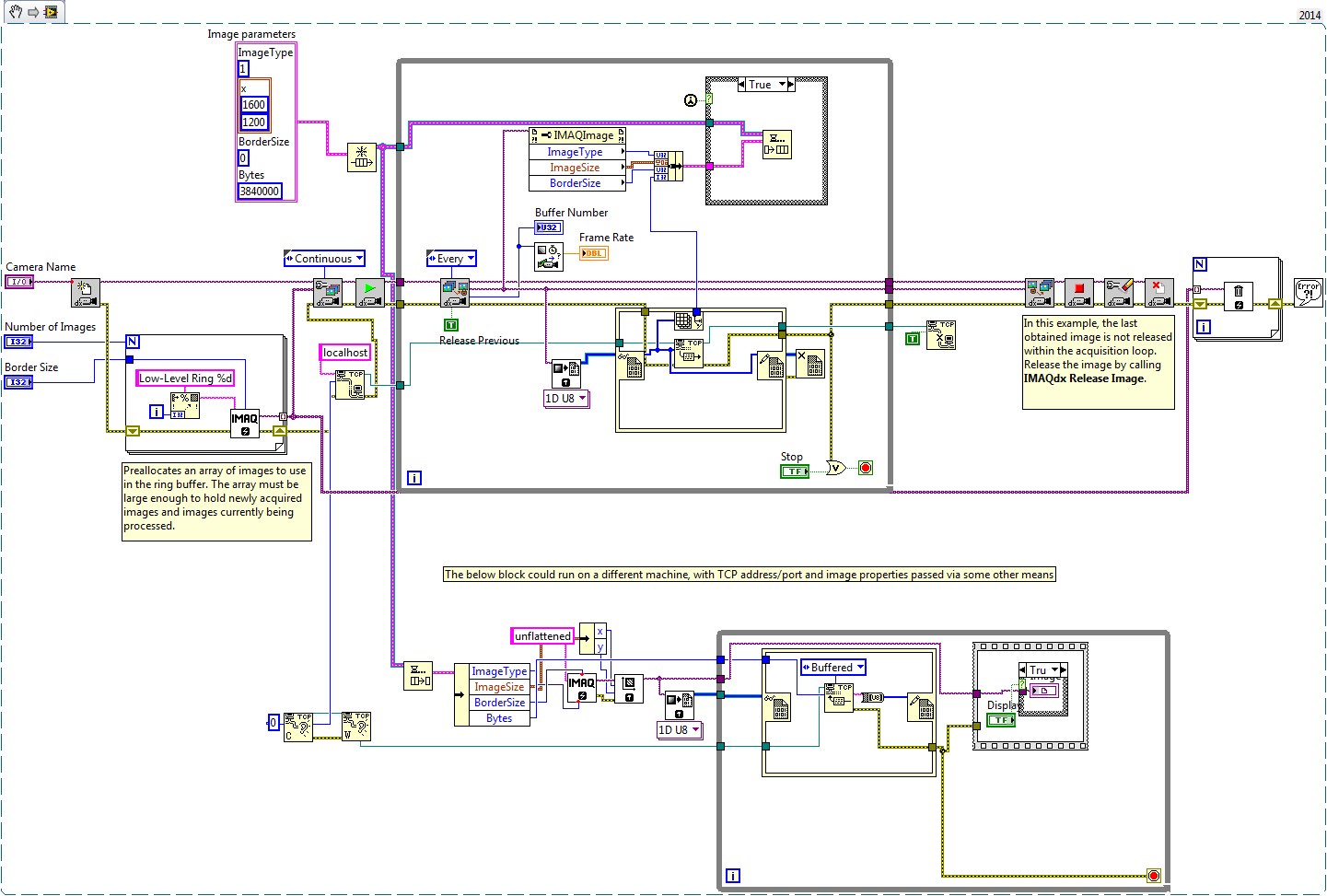

I decided to make a quick example in the hopes that it may be useful. This does the ring, EDVR, and TCP transmission in one loop, with another loop that retrieves the data via TCP and puts it back into an image. You could add an additional conversion to a 2D EDVR for processing, but I used a 1D EDVR so it could be passed directly to/from the TCP VIs.

On my quad-core i7 it takes a couple percent of my CPU to run this at 1600x1200x16bit @ 30FPS (maxing out my gigabit connection). I assume it would get a bit more taxing not simply using a loopback TCP connection to the same PC, but probably not substantially. However, only one side would be on each device instead of both ends like this example.

Hope this helps,

Eric

03-23-2017 10:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you very much!

Never heard about EDVR before. But it seems very promising. Do you know if passing the wire array data out of the in-place-element structure is faster than ImageToArray?

Not sure about parallel processing, yet, but I'll try to implement it in my code.

03-23-2017 11:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Passing the array data out of the in-place-element structure will almost certainly require LabVIEW to make an additional copy of the data. This seems like it would be just as expensive as ImageToArray, but maybe slightly faster if LabVIEW is smarter about re-using buffers instead of re-allocating them each time like ImageToArray would do. You'd certainly be better off just moving your code inside the in-place structure if possible.

03-28-2017 08:29 AM - edited 03-28-2017 08:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Eric, thanks again!

I implemented your solution in my code and basically it seems to work. But I think I'm not sending _every_ frame (Haven't really checked, yet).

The camera produces 2048x2048x16 bit images at 13.3 fps, so approx. 106.4 MBytes/s. However, what I'm sending via TCP is only about 75 MBytes/s. CPU load is 10...15 %.

Any idea where the remaining frames are? Or is there some compression happening that I don't know about?

Edit: From what I read so far, TCP is very robust. It's not maxing out the connection, so I assume the image acquisition is the problem. But 'Every' is set as the buffer mode in Extract Image, so not every image is transfered to memory although the camera is clearly sending at full throttle? I'm confused ![]()

03-28-2017 09:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi,

The code I posted has a sort of "flow-control" that will limit where images can be dropped. Extracting an image from the ring will lock it and prevent it from being recycled for the acquisition until it is released (in my example I had it set to release on extracting another buffer, but you can also explicitly release them, which is helpful if you queue them to other worker loops). Then both the writer and reader for TCP has flow control so no data is lost there, either, but if either is slow, it will push back on the other (and eventually to the acquisition). When the buffers get behind on a GigE camera acquisition, images that are sent from the camera without a buffer to go into will simply be dropped. There are counters that you can read ("Skipped buffers", I think) that will tell you this.

While the buffer mode may be set to "Every", this only affects which particular buffer number is selected to be waited on. It doesn't affect the behavior if you get behind, and the buffer you request is no longer available. There are a few ways you can monitor this:

-Calculate the difference of one buffer number returned to the next one, looking for discontinuities

-Use the "Processed frame rate" output in Calculate Frame Rate VI included in the example, in addition to the acquired frame rate output. This does the same as the first suggestion to calculate the two values.

-Configure the acquisition attributes to error on extracting a buffer that is no longer available instead of returning a different one (the default behavior)

Also note that "skipped" buffers (ones that the GigE acquisition has to drop) are not counted in the sequential buffer numbers. Because of the nature of the ring acquisition code I posted, where a buffer is not released until the next loop iteration, it is likely that these are where the buffers are being dropped.

As to why you are hitting this, my suspicion is that something along the TCP path is slowing things down. I am pretty sure the x64 cRIO or CVS can push line rate TCP to another target of the same type without much issue, but I am not 100% sure about stock LabVIEW code. It could also be the receiver end---what sort of system is that?

03-29-2017 02:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks again for your insight!

I haven't checked buffer numbers yet, but you were right about the receiving system. It's an identical cDAQ. Currently I don't have any other system available so I was trying to display each received image. Unsurprisingly, that is a considerable task for a machine that is not supposed to display anything... So, I disconnected the image display and I can now transmit around 11 fps. Not quite there, yet, but it's a start ![]()

I am pretty sure the x64 cRIO or CVS can push line rate TCP to another target of the same type without much issue, but I am not 100% sure about stock LabVIEW code.

Can you elaborate on that? Do you mean that LabVIEWs TCP vi's could ultimately restrict the data rate?

03-29-2017 01:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I am pretty sure the x64 cRIO or CVS can push line rate TCP to another target of the same type without much issue, but I am not 100% sure about stock LabVIEW code.

Can you elaborate on that? Do you mean that LabVIEWs TCP vi's could ultimately restrict the data rate?

Possibly. There's quite a lot of factors that go into TCP performance:

-APIs used (OSes like Windows have more than one way to access TCP)

-TCP window sizes (LabVIEW doesn't really let you tweak this, though there may be KBs out there that say how to change it)

-Sizes of reads/writes to API (in some cases LabVIEW may directly translate to a BSD socket read/write of the same size, but others it does its own buffering)

I know for sure that with decent options passed, running "iperf" between multiple x64 cRIO/cDAQ devices can hit line rate gigabit. I haven't attempted the same with LabVIEW TCP VIs. I know LabVIEW uses very different implementations to talk to the TCP stack on Windows vs Linux (Linux uses standard BSD sockets, while the Windows code does not), so there may be some performance differences compared to running iperf.