- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

using a C++ DLL in LabVIEW

Solved!05-26-2016 07:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I have a DLL that has been written in C++, and the typedef inside the C++ code is defined as:

CY_BUFFER

Definition

typedef struct

{

int size;

char* data;

} CY_BUFFER;

There is a function that should return the version number as shown below:

C Function

CY_INT version(CY_BUFFER* buffer);

A sample of C code to write to call the DLL is listed here:

Example in C

#include "cy_runtime.h"

const int CUDA_DEVICE = 0;

if(initialize(CUDA_DEVICE) != CY_SUCCESS)

return -1;

CY_BUFFER buffer;

if(init_buffer(&buffer) != CY_SUCCESS)

return -1;

if(version(&buffer) != CY_SUCCESS)

return -1;

if(free_buffer(&buffer) != CY_SUCCESS)

return -1;

I have built LabVIEW wrapper using the import DLL wizard, but cannot figure out what I need to do. I have read the Dereferencing pointers from C/C++ DLLs in LabVIEW, but I cannot understand how to use. Is there anybody that can point me in the right direction here. I cannot modify the C++ DLL, but I do know in theory the data types, I just cannot figure out what the Wizard is trying to do when it creates the GetValueByPointer VI code that it automatically generates. If its C++, do I need to not use the Wizard at all, and create from scratch? Any help would be gratefully appreciated.

Solved! Go to Solution.

05-26-2016 08:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If you built the wrapper with the import wizard, why is anything else in your post important? The wrapper already gives you the data types.

If you didn't do so successfully, where did things run into problems and what exactly are the problems?

05-27-2016 09:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Good question, I appreciate the request for clarification.

The wrappers that are automatically created run but do not correctly pull the data types out. I think this is a known issue for C++ generated DLLs (from what I've read), but I could be wrong on this.

Anyway the challenge is the newly wrapped DLL's run, and there are no errors, but there is nothing that resembles the data that I am expecting.

I have attatched the code that is generated, which seems strange in itself, as it contains a possible race condition where the data could be overwritten before it is translated into what it is supposed to be. Anyway the code that tries to undertake a memory pointer lookup (I presume) does not do anything resembling the result, so I'm assuming that the wizard does not understand the data type from the h file, so attempts to do something but it isn't correct. When looking on the forums I found a post describing how you should dereference pointers for C++ DLLs (https://decibel.ni.com/content/docs/DOC-9091), but I cannot figure out what I am supposed to do. It seems that I have all the information but there is a disconnect between what needs to be done (for me at least) with a relatively simple example. Maybe there is no way around it, you have to wrap the C++ DLL in a C DLL and then call from LabVIEW, but that just seems wrong! Again, any help would be appreciated. Thanks,

05-27-2016 05:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

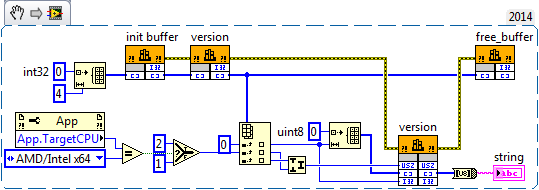

Based on the C sample code you provided, the problem isn't C++. Rather, it's that you're not duplicating the C sample code. There's an "init_buffer" function that you should call to initialize the buffer before you try to call the "version" function (and, make sure you call the "free_buffer" function after you're done with the buffer).

You can delete the pointer allocation and deallocation from the function the wizard created, because the init_bufer and free_buffer functions take care of that for you. There is no potential for a race condition - dataflow guarantees that you will always read the pointer value from data before it gets set to 0.

Do you know that the string returned by "version" is null-terminated? Otherwise, you should inspect the size element, and only read that number of elements (you'll need to use MoveBlock for this since GetPointerByValue doesn't have an option to limit the string size). Are you sure the version information is returned as a human-readable string?

05-30-2016 05:07 AM - edited 05-30-2016 05:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

In addition to what Nathan said you have most likely an alignment problem. C compilers tend to align elements in a struct to their natural inherent size. That means the char pointer will be aligned to 4 bytes when you use LabVIEW 32 bit bit and to 8 bytes when you use LabVIEW 64 bit. Now since the DLL provides also both the init and the free function, this makes things a little easier. you can simply create a byte array of 4 32 bit integers, pass it as pointer to array to the init(), version(), and free() function and the only thing you need to do before freeing is calling an extra MoveBlock() function where you adjust the offset into that array accordingly to the bitness of LabVIEW.

This does assume a few things:

1) That your struct is indeed using 8 byte alignement, resulting in the pointer to be at byte offset 4 in the structure for 32 bit LabVIEW and at byte offset 8 for 64 bit LabVIEW. Are you by any change using 64 bit LabVIEW now as you have these 4 extra elements in the cluster between size and the pointer?

2) The size element in the struct actually indicates how many data is in the char buffer.

And error handling for the DLL functions has been completely omitted. To match the C code you have shown you should always check the function return value too before continuing.

06-03-2016 10:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you so much for your help. This was proving very challenging for me to overcome, now I understand and your code worked directly after repointing the DLL. Much appreciated, now moving onto the rest of the functions. Question for NI Team, why don't you incorporate the 32 bit or 64bit item into the Wizard (Import DLL)?.

Thanks again.

06-03-2016 10:44 AM - edited 06-03-2016 10:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The Import Library Wizard is already complex as hell to implement and make sure it does the right thing for what it is able to determine. There is no way that it could automatically detect things like used alignment and such. These things can be set in the header with #pragma's but pragmas are notorously non-portable across C compilers. But usually they are not even set in the header but rather as compiler option switch and usually forgotten to document anywhere (and it's not safe to assume that if it is not documented that it is the default used by the C compiler, also because there are different C compilers which all have their own idea what are the best defaults to use).

So the only way that could be supported would be through even more configuration settings. Now, most Import Shared Library Wizard users struggle already with the few settings that you can provide ("additional include directories" and "preprocessor defines") and adding even more possible selections would not make this any better.

The fact is that the C syntax in the header files is not suitable to describe the interface of a function library completely, but there is also no other commonly accepted standard to describe a shared library interface. And because of that there is simply not any possible way to make an Import Library Wizard that could create a fully finished LabVIEW library for anything but the most trivial functional interface. So if you want to interface to shared libraries you really need to understand the issues involved, need to read the prosa documentation of the DLL library and understand how the function would need to be called in a C program and then figure out how to do that in LabVIEW. There is simply no other way, even if NI would invest an infinite amount of resources in the Import Shared Library Wizard (which they of course can't). The C header file simply doesn't give enough information to do this all automatically (and the DLL gives besides the name of the callable functions absolutely nothing).

And since you seem to have run this on a 64 bit LabVIEW system the Import Library Wizard actually seems to have accounted for the common default alignment of 8 bytes, since it added those 4 filler elements. That it didn't create bit neutral code so it would work on both 32 bit and 64 bit systems equally is very understandable. It adds a lot of complexity to the Wizard code generation for pretty dubious benefits. It's not even clear that there is a 32 bit library available. And even if there is, it is not clear that it uses the same interface definition. There could be a32 bit library with very different function parameters or even function names.

As it is, the Wizard is a tool that eases the integration but it simply can't create a VI library that is guaranteed to be correct for functions that use any kind of pointers or structs. The limitations of what can be expressed by the C syntax simply doesn't allow to do that. Therefore use the Wizard and then review the created code scrutinously, and I mean really scrutinously. Assuming that the Wizard created fully functional code just because it doesn't crash when you run it, is a sure way into huge trouble if you ever intend to use that library in anything but hobby projects.

06-16-2016 02:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I think I need to add the following question to the same forum, as it is related I believe.

I have another function that I would like to call that returns an image.

I have the definition of the image in C below:

06-17-2016 03:03 AM - edited 06-17-2016 03:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well there is a good reason why there are not many alternatives to the LabVIEW IMAQ Vision toolkit. And that reason is, that image data is notoriously complicated and difficult to understand when you talk about the memory layout.

As you can see from your struct you have a height and a width which indicates the pixels in each direction. Then you have the number of channels which is usually the number of color planes, so 1 for grey scale, 3 for RGB and 4 for RGBA (the A in there is for Alpha which is a channel that can indicate the translucent value of a pixel. And besides of RGB you also have YUV, CMYK, RGBs, CIELab and several others. To make matters even more interesting you also have the possibility to arrange the order of the data for RGB for instance as GBR, BGR, GRB, and every other possible combination of these 3, and a possible Alpha channel in front, somewhere in the middle or at the end. And it doesn't end there because the different channels can be interleaved such as RGBRGBRGB, or they can be separated in memory as RRRRRGGGGGGBBBBBB. Last but not least you have often implicit or explicit padding to make the boundary of a pixel or a pixel line start on an even or multiple of 4 address to make it more efficient for optimalized machine code to process through the data.

And then you have also the channel_depth which would indicate how many bits (or maybe bytes) each channel element for a pixel contains.

As you can see there are MANY variations and also often implicit assumptions that you have to extract from the according library documentation before you can even start to consider writing a single line of code.

Once you know that for your external library and the IMAQ Vision data, you can think about converting one to the other. For that you want to reference the "data" pointer in your structure which contains the data as a continous stream of bytes in the format that you have to determine from all the above and the library documentation and copy it over into the data pointer that you can retrieve from the IMAQ Vision image through the "IMAQ GetImagePixelPtr" vi. But wait!!!!

Before you even try to retrieve that pointer make sure you have first created the picture with the right image format through "IMAQ Create" and set its width and height to the necessary dimensions through "IMAQ SetImageSize". The allocated memory behind that pointer will not automagically resize as you reference its contents to write your data into it. You have to make sure it is allocated correctly before trying to get that pointer, by setting the image type and image size first!!

If this all sounds complicated then it is because it is complicated and if you want to work on that level you really should understand some low level programming like C pretty good.

@ADLADL wrote:

I understand the last code that was posted, but couldn't figure out how you knew to interpret the memory buffer in this manner from the code that I posted. i am familiar with the first byte typically describing the length of the bytes to read, but couldn't see what that was spelled out. I guess if someone call help me with the image, then maybe everything else becomes easy. Is this one of the most complex data types?

There is nothing magic about understanding how that works. It's simply a combination of knowing how a C compiler translates a struct like

typedef struct

{

int size;

char* data;

} CY_BUFFER;into a memory area and how that correlates to LabVIEW, which in essence is written in C(++) too and arranges the data internally in the same way, although with certain LabVIEW specific constraints, which can also differ between LabVIEW platforms (Windows, Mac, Linux, RT targets and 32 bit and 64 bit variants thereof).

Unfortunately there is not really a good book that would describe all these things in an easy to understand way. Most of what I know I have gathered from varous tidbits here and there in the course of 25 years of LabVIEW and C programming and lots of trial and error. And here and there looking at disassembled code to understand how a certain C code construct translates into according machine code.

I have already many times developed some code for customers to interface such libraries to LabVIEW but that usually also meant that they wouldn't like me to post the work they paid us for on a forum as an example how to do this. If you want to set a precedent here, we can talk about that. ![]()

01-10-2018 09:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thanks for your posts, rolfk, I have been reading quite a few over the past few days trying to figure out issues I'm having with a C++ DLL in LabVIEW. Unfortunately, I do not know C++ and am not sure how to move forward with my project.

I am trying to automate data acquisition with a Hitachi Vortex-EX X-ray detector but am getting inconsistent results. The software that comes with the detector consistently functions correctly but cannot be automated, hence the use of LV.

I am running 32-bit LV2017 on a 64 bit Windows 10 environment, both are fully updated.

The library (VTXDLL-NT.dll) has many dependencies, all of which I keep in the same folder as my VIs. The manual states that the library was "developed on the Win32 platform with Microsoft VC++ 6.0 under Windows 2000/XP and was designed to accept calls from C++, C or VB programs."

I have used both the "Import Shared Library" wizard as well as the "Call Function Library Node" to try to connect. After a call fails, I power cycle the instruments and copy over "clean" versions of all the DLLs. Sometimes this causes the functions to work (about 30% of the time).

Based on other posts, I am wondering if the library requires a C wrapper before being wrapped in a LVwrapper, but I could be mistaken.

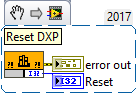

As an example, I have what appears to be a simple call to the function vtxDXPReset(). From the manual:

int vtxDXPReset( );

Arguments : None.

Remarks : Function performs DXP reset. It can be called whenever an abnormal DXP condition occurs. For example, in the cases of sudden appearance of abnormal noise peaks, sudden peak broadening or shift, the Status or Rate indicator on the electronic box becomes red constantly or the yellow I/O indicator stays on without flashing during the acquisition, etc. It returns 0 if successful, or an error code if function fails (see Appendix).

My LabVIEW code is as follows:

and the Call Function Library Node is configured as:

As I've said, this request works every time through the accompanied Pi-Spect software but only sometimes in LV. There are two different results when it fails, 1) I will either get the 1097 error through the LV error handler or 2) the function will return with a 1001 error code, "Error loading XIA Systems." XIA refers to the manufacturer of the electronics downstream of the pre-amplifier and there are several associated DLLs that have "xia" in the name.

I have tried both calling conventions and specifying the full path in the VI diagram without consistent success. I have also tried all the different data types including "void".

Please let me know if I am leaving out any important pieces of information. Thank you for your time and assistance.