- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

pipeline daqmx read task in a while loop

01-14-2016 05:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi guys,

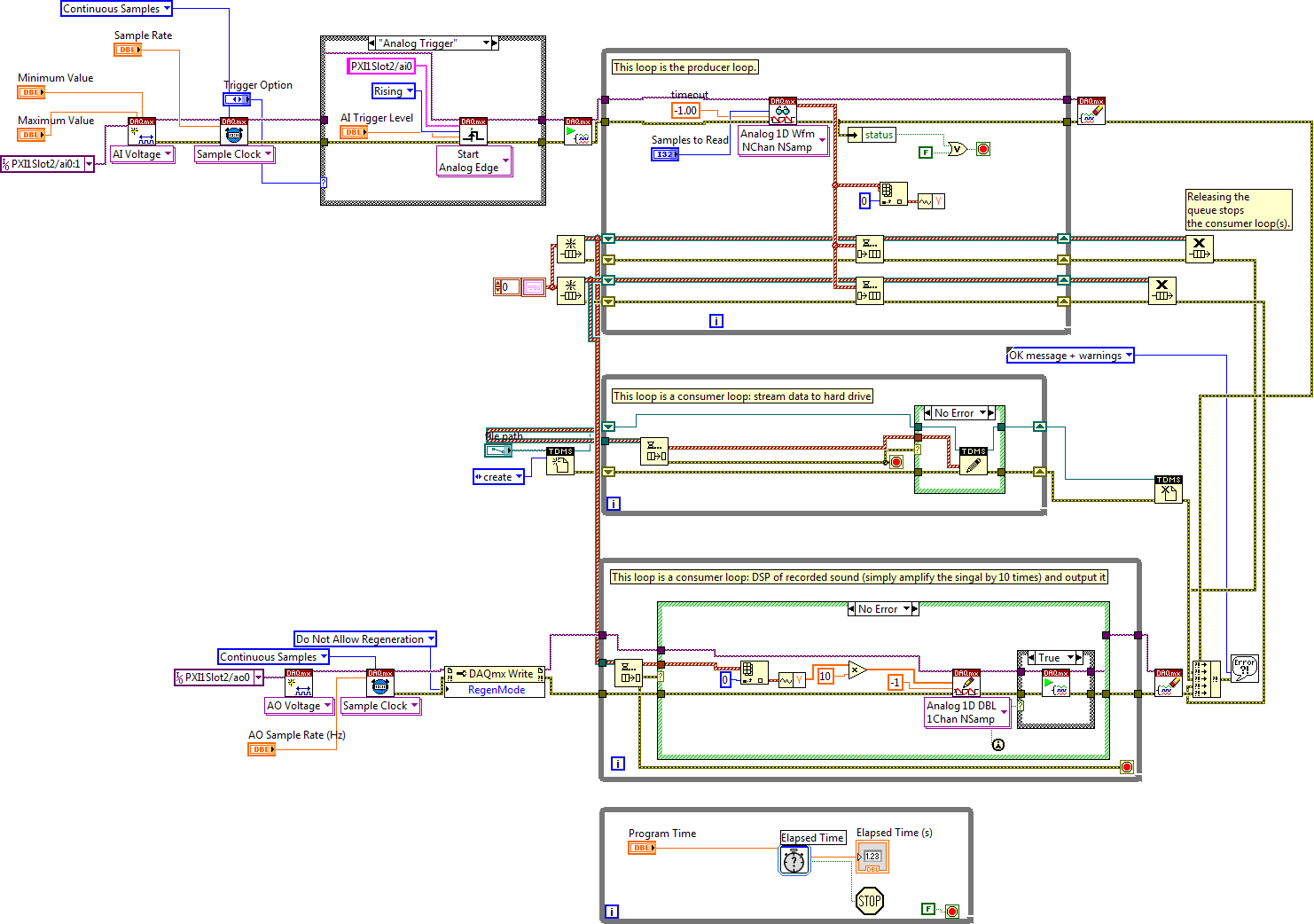

My task is to output recorded sounds after certain signal processing, i.e. a real-time task. This is a routine task and many people are working on it. It is not difficult to make a Labview program for these tasks. However, the really challenging part is how to achieve a mimimum delay between ai and ao task. The NI engeener suggested me to go for Producer/Consumer design. After searching online, I found a really nice example called " DAQmx Generate Acquired Data with Queues" (https://decibel.ni.com/content/docs/DOC-7202).

Using queues, I am able to reach a minimum ai-ao delay of about 5 ms, which is 4-5 times faster than seqential programming without using queues. This is because my machine is 4-cored processor and with PXIe bus conections. However, my project requires a even smaller ai-ao delay, which should be about 2 ms.

After reading more about multicore programming, I found another example illustrating the idea of combining queues and pipeline (http://www.ni.com/white-paper/5899/en/). In Figure 10, the second while loop use pipeline to improve the efficiency of signal processing. This might be helpful if the DSP part is computingly heavy, which is not my current situation. Since 5 ms of delay is fairly short already, my best guess that this 5 ms delay is caused by the data producer while loop. Particularly, the daqmx read function. Thus, I am wondering whether it is possible to apply the pipeline idea for the daqmx read task. Obviously, simply combining mulitple daqmx read causes errors.

I have snapshot my program so experts can have a better understanding of my problem. I also hope you guys can have ideas on how to reduce the ai-ao delay further. I would very much appreciate it.

01-16-2016 07:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

YOu posted in the Real Time Idea Exchange, but you aren't proposing any new ideas for a Real Time system. You need to post in the LabVIEW forum.

01-18-2016 11:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

... and when you post a picture (but not a LabVIEW Snippet) of a large VI, you'll get scolded by the Resident "Attach Code" Nag (that would be me).

Please attach the VI (or, if multiple VIs, zip them together in a compressed folder and attach the Zip file), itself. Large VIs are difficult/impossible to see when reduced to fit the Forum. Also, we can't see the not-shown cases of Case statements, can't look for wiring mistakes (by jiggling the wires), can't edit the code, and can't try executing the code.

With actual code, we can poke, prod, test, and maybe be helpful quickly (we need to be quick, given what we are being paid to do this -- if you actually hired us, we could then afford to take our time and work slowly ![]() ).

).

Having Beaten my Dead Horse, let me ask some questions about what I could see, and about your task. You want to synchronize an AI and an AO task. First question -- does the AO task involve data from the AI task, or are the data streams independent? What is the sampling rate for each Task? What is the buffer size for each task?

I assume you realize that if you are, say, taking 100 points at a time at 1 KHz, you will get data 10 times/second. If you want to "play back" the input data through an AO device, this "injects" an obligatory 100 msec delay between input and output (unless, of course, you use the special "Future-predicting Circuit" that NI has been secretly developing for this purpose).

Look forward to being able to examine/test your VI, and having a better understanding of your question.

Bob Schor

01-18-2016 06:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Dear Bob,

First, thanks for your reply. The main goal here for me is to reduce the ai-ao delay. This moment, with the default settings, tested with PXIe 6358 DAQmx board and PXIe 8135 Controller (4 cores of Windows Operating System), the delay is 5 ms. More test results are texted in the Front Panel.

Yes, ao task relies on ai input. So this is truely playback. I did not specify the buffer size this moment. Is it necessary?

I understand that the delay is determined by the ratio of No. of Samples to Read each time to the Sample Rate. Here I am sampling at 1 MHZ and taking 1000 samples each time. Thus, the theoretical delay is 1 ms.

For the attachment, I don't know why I could not attach it. I tried many times but always get the error message:

Correct the highlighted errors and try again.

- The attachment's test2.vi content type (application/octetstream) does not match its file extension and has been removed.

Just send me an email (luojh135@gmail), so I can email the attachment to you.

Best,

Jinhong

01-18-2016 08:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Is your VI part of a LabVIEW Project? If so, the VI and any sub-VIs are probably all in a Windows Folder. Compress the Folder, which creates a ZIP file, and attach the Zip file. I don't understand the message, except that your mailer may think a .vi file is some special format that the LabVIEW file doesn't match, and throws the error.

Bob Schor

01-19-2016 07:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

No, it is not a part of a project. No clue. I've wasted more than 2 hours in hope of searching for an answer. No success.

So I attached the code to google drive. You can access to it following this link.

https://drive.google.com/file/d/0B0rv5WyOEK8iVDlVTFpINTBXVVE/view?usp=sharing