- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

memory manager optimization

10-07-2015 04:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I have just been looking at memory usage, not speed. That being said a quuick test reveals it uses 3 MBy, referenced to the recent snippets.

mcduff

10-07-2015 05:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@mcduff wrote:

I have just been looking at memory usage, not speed. That being said a quuick test reveals it uses 3 MBy, referenced to the recent snippets.

My general point was there's no need for a DVR here; in fact, there's rarely a need for a DVR at all, particularly if it's just for carrying around an array. There's a reason DVRs weren't introduced until LabVIEW OOP - DVRs were introduced mostly to make it possible to make a by-reference object, and it's just a side effect that you can put any LabVIEW data type in them. That doesn't mean you should, though. From http://www.ni.com/white-paper/3574/en/: "Reference types should be used extremely rarely in your VIs."

The simplest LabVIEW code - the one using the minimum necessary number of nodes - is often the most efficient. When trying to improve performance, both memory and speed, you're more likely to succeed through subtraction rather than addition. Altenbach demonstrates this regularly in the optimized solutions he suggests on this forum, for example in this thread: http://forums.ni.com/t5/BreakPoint/Code-Miniatures/td-p/3134761

10-07-2015 05:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

My use case may differ, and by no means am I a programmer or any expert, but I like DVRs for the following reason:

I usually have multiple loops in my application, one that handles instruments, one that handles data, one that handles the UI, etc. When I download large data sets from an instrument, I like to put that data in a DVR, that way I am only sending a reference to the other loops, not a data copy. Within a loop, you are typically correct ...

The simplest LabVIEW code - the one using the minimum necessary number of nodes - is often the most efficient.

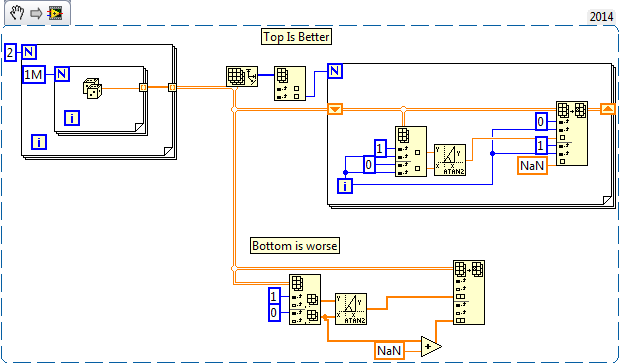

Look at the snippet the top case is more complicated but uses less memory in comparison with the bottom case. (Run them separately, I was too lazy to make two snippets)

Cheers,

mcduff

10-07-2015 05:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

For sharing data between loops, yes, a DVR might make sense, but it's definitely not the only way to share data between loops without making a copy. Queues and functional global variables can accomplish the same goal.

Maybe I over-simplified, but there was a reason I put the "often" qualifier there; I didn't mean to suggest that it's always the case. You do still need to evaluate the logic. Adding NaN may seem like a simple way to turn an entire array into NaN, but initializing an array of the same size to NaN would be better. More importantly, to evaluate the simplicity and effectiveness of this code, we'd need to know what the desired result is. Quite possibly neither of these versions is the best option (if I wanted a 2x1m array where one row is all NaN, I wouldn't do it either of these ways).

10-07-2015 06:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

we'd need to know what the desired result is. Quite possibly neither of these versions is the best option (if I wanted a 2x1m array where one row is all NaN, I wouldn't do it either of these ways).

Quite true. In this case, the user can look at I & Q data, I^2+Q^2 data, phase, unwrapped phase, or the magnitude of the FFT of the unwrapped phase.

I am using the same buffer over and over, so there are two data rows I & Q directly from a DAQmx device. Sometimes the user wants to plot both I &Q, other times the user wants to see the phase. So in that case, one row becomes a NaN so it is not plotted. The orginal data is not saved so there is no reason to keep it. There is probably a better way though. (In this manner I do not need to reshape my buffer, no do I need to switch to the UI thread to update a plot reference.)

Cheers,

mcduff

10-08-2015 06:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You are right, I do tend to attribute magical powers to the IPE.. ![]()

Now, since I have your eye (hopefully), and that of mcduff (hopefully), on the topic of this thread, is it possible to infer indirectly the ammount of memory re-sizing and/or the number of calls to the memory manager (at run-time) using e.g. the "Performance and Memory" profile tool?

At the core of all of this, is a legit desire to reduce calls to the memory manager. In a perfect world, my code would, after initialization and warm-up, never have any changes to its memory usage, everything would be pre-allocated and no further memory allocation or deallocations would ever be made.

Ignoring that this is practically impossible, what I frequently want to measure is "how often or how many times in a typical run will xyz (sub-vi) cause calls to the memory manager?" Looking at the tools I know of, none of them (RT trace tool-kit, profile performance and memory) seem to offer this directly.. When profiling memory, it shows me min, average and max blocks... these are probably the closest.. if min != avg != max then I know that the code is some degree of dynamic.. but it does not give a good full picture... It would be AWESOME if there was a column in this or another tool that said "number of alloc/dealloc" (or some variation of that phrase).

Still, if min, avg and max blocks are close or identical, I can at least infer that size-wise it is relatively static, unless it for some reason moves the same ammount of blocks around in memory for some reason..

Input or thoughts would be sooo appreciated!

-------------

CLD LabVIEW 7.1 to 2016

10-08-2015 10:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Have you tried the new Profile Buffer Allocations tool? You can find exactly where in VI the allocation is taking place.

The "Performance and Memory" profile tool does not always seem accurate, that is why I look at the task manager also, but, it is not a bad metric.

Good Luck.

mcduff

10-08-2015 11:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Why is your concern the number of allocations and deallocations? Allocating memory is fast (and is constant-time, within reason, regardless of the amount of memory); copying memory is slow (proportional to the amount of data to be copied). If your goal is performance, you should be looking at ways to reduce the number of times that you copy data from one location to another, regardless of when that memory is allocated. Of course, not surprisingly, if you're successful at this, you'll also reduce the number of allocations, because you'll be reusing more memory. You could have code that uses a constant amount of memory but moves the data so many times that it doesn't perform well.

I like the Show Buffer Allocations tool, but it requires some understanding and acceptance of its limitations. You probably can't eliminate all buffer allocations within a loop - and for scalars, small clusters, and subarrays I wouldn't bother - but you can work to reduce them, particularly any that are not the same size on every iteration. Generally if there's a buffer allocation inside a loop, but it stays the same size each cycle, then it's only a one-time buffer allocation (but a memory copy each time). There may also be situations where LabVIEW shows a buffer allocation that will never actually occur, if you know (but the compiler doesn't) that there is a limit to a set of inputs. For example, say you have a case structure with a default case that won't ever be reached. LabVIEW will show a buffers allocated to the default case outputs, but that case won't ever run.

10-08-2015 12:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Part of what you are saying is at odds to the internal group within NI that deals with RT systems. They told me that anytime you hit the memory manager on (vxWorks? older?) cRIO targets, you incur a fairly substantial penalty.

To make it worse, after drivers and the application VI's are loaded, I often find myself with around 30 MiB of free memory. Avoiding calls to the memory allocation part mainly does one thing besides reducing the (supposed) performance hit: it makes it faster and easier to test the overall application for memory leaks.

I still remember when I was greener still, and we started getting calls that our systems would freeze after about 3 months of working perfectly... It took me another 2 months to develop my skills at this type of troubleshooting, isolate the code module and start doing accellerated testing... That particular leak was caused by a 4 byte leak caused by the NI 'Get Volume info.vi' function, in a particular corner-case (so not on all/every call). That occasional 4 byte leak would fragment the memory until the largest contiguous block was too small for the cRIO to operate.. Back then, memory usage would swing around A LOT due to some ... less than ideal ... handling of data, which was part of the problem.. It would take a very very long time (couple of weeks) before you could spot a trend with enough accuracy to say 'yup, it is still leaking' or 'nope, the leak is gone'.

Since then, I have found and documented several memory leaks in various API's, most of them are tiny but build up over months, especially when we are memory constrained as it is on our target. I spend a fair bit of time just running (accelerated and normal) test cases to verify and validate, and as mentioned, the less fluctuations exist in my normal code, the faster it is to spot deviances. 🙂

-------------

CLD LabVIEW 7.1 to 2016

10-08-2015 12:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You know, it appears we've had this discussion before:

https://forums.ni.com/t5/LabVIEW/buffer-allocation-and-minimizing-memory-allocation/td-p/2314474

http://forums.ni.com/t5/LabVIEW/avoiding-data-memory-duplication-in-subVI-calls/td-p/2315880