- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Taking samples every exact second

02-21-2016 03:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello, guys !

Im super new to LabVIEW and there is a problem that I've faced and cannot resolve.

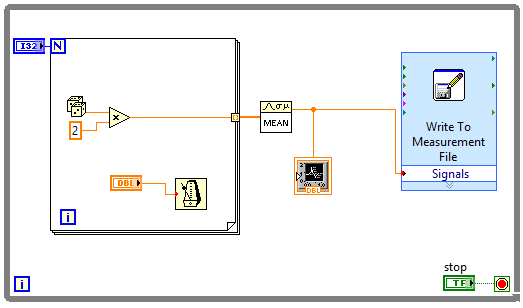

Can you take a look at the example:

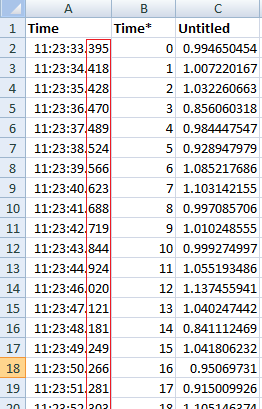

I want to find the mean value of 40 points and show it on the chart. Then take the mean value of the next 40 points and etc. I've set the time for taking 1 point to 25 milisec, so that should make 1 second for 40 points, right ? These are the results:

I really need my data to be taken every exact second. My question is can I avoid this fluctuation in the miliseconds somehow or it's not possible ? I don't even know if I'm using the right VI's.. 😄

I'm using LabVIEW 8.5.

Thanks !

02-21-2016 04:26 AM - edited 02-21-2016 04:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

What you see is the effect of a non real time OS. You cannot get better accuracy using Windows. What is your final goal here? You want to do data acquisition? There are DAQ products from NI which has on board clocks, and you can get more accurate timing.

edit: if you want to do DAQ with hardware, I would also advice to use a Producer/consumer design, so you only acquire data in the Producer loop, and you calculate the mean values and save the data to file in the Consumer loop. In this way the OS file operations, and the mean calculation will not disturbe occasionally the data acqusition.

02-21-2016 07:11 AM - edited 02-21-2016 07:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

"I really need my data to be taken every exact second."

Another thing which is important to emphasize: there is no technology which is able to do timing to give you ultimately a sampling at exactly every second. There are different reachable accuracies of timing, depending on the actual configuration and technology used. Windows will have an accuracy of a few milliseconds usually, depending on the code. The question is, what kind of measurement you want to use, and what is the required accuracy of timing. Milliseconds? Microseconds? Nanosec? picosec...?

Some related readings:

http://digital.ni.com/public.nsf/allkb/8F35B8099427B48686257A8B003A72D8

02-21-2016 01:06 PM - edited 02-21-2016 01:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Your times in the file are meaningless, because they don't even correspond to the time the data was taken (it seems the are created during the saving operation, because your code does not generate any timestamps).

How precise do the times need to be? Are you worried about correct tiems or the cosmetics or showing varying fractional seconds?

You are currently doing random numbers. I assume that you will later use real DAQ hardware, at which point you can do everything hardware timed.

@stoyk0v wrote:I really need my data to be taken every exact second. My question is can I avoid this fluctuation in the miliseconds somehow or it's not possible ? I don't even know if I'm using the right VI's.. 😄

Please define the term "exactly" (seconds, milliseconds, nanoseconds, picoseconds, ....?). That is a very soft term without context. How quickly is the signal actually changing? What kind of analog filters are in place? What is the response time of the entire hardware?

Your loop time cannot be very precise if file IO is part of it. Saving should be done asynchronously.

02-22-2016 09:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The others are right, but I wanted to mention, if you have a 1 second wait, that doesn't mean your loop will take one second to execute, it means it will take at least one second to execute. You have a write to disk that takes some time, after your wait, so your loop rate will be longer than that.

In addition to that being on Windows your timing is imprecise anyway. Have you ever had Windows just lock up for a few seconds? Or have your keyboard or mouse stop responding? What do you think your program will do if Windows locks up like this? My point is that the hardware should be taking the samples, and time samping them for you. Then you get the readings, and log them. If you log once every hour, or once every second it doesn't matter because your log will be given the data, with the time for each sample. You can see this demonstrated in several shipped examples in the Help >> Find Examples and search for Voltage - Continuous Input.

Unofficial Forum Rules and Guidelines

Get going with G! - LabVIEW Wiki.

16 Part Blog on Automotive CAN bus. - Hooovahh - LabVIEW Overlord

02-24-2016 01:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

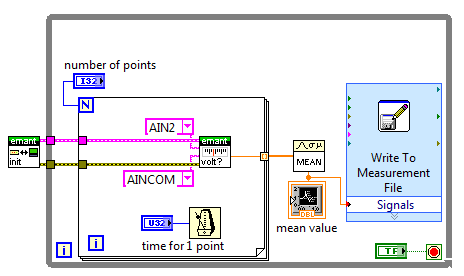

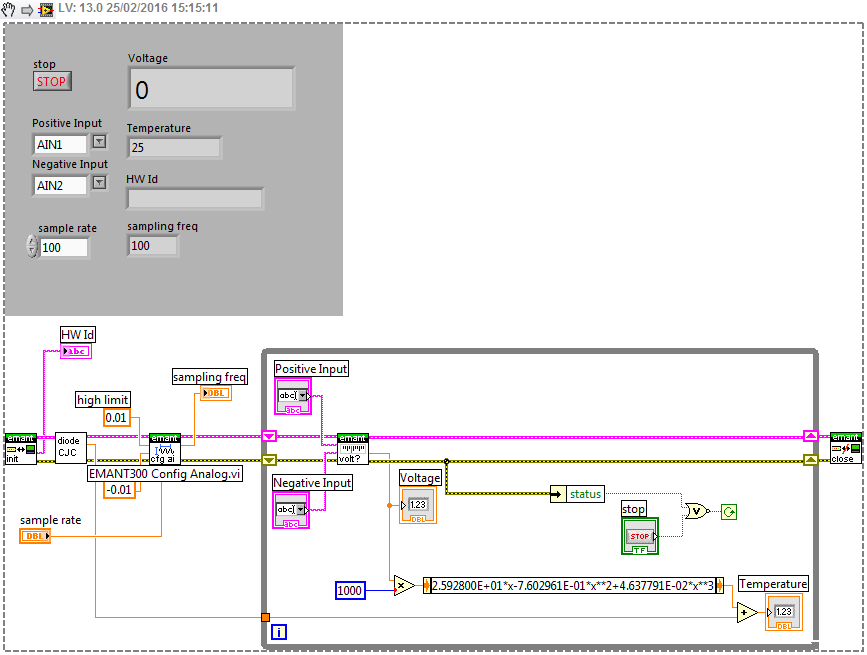

I need to do a simple code that will measure and display the temperature of a circuit breaker. The measurement will be done with K or J type thermocouple and EMANT300 device. The plan is to gather 40 or 50 points every second , find their mean value and display it. The next second to gather another 40 or 50 points and display their mean value again, and etc.. Im trying to do all that with this code (that's just the part of the code that a have a problem with, later I will need to convert the voltage into temperature.) :

So I needed to understand that if I can avoid the different intervals that I showed in my first post. I guess I cant without any special hardware.

If you have suggestions for a better code, please share them. Thanks !

02-24-2016 02:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

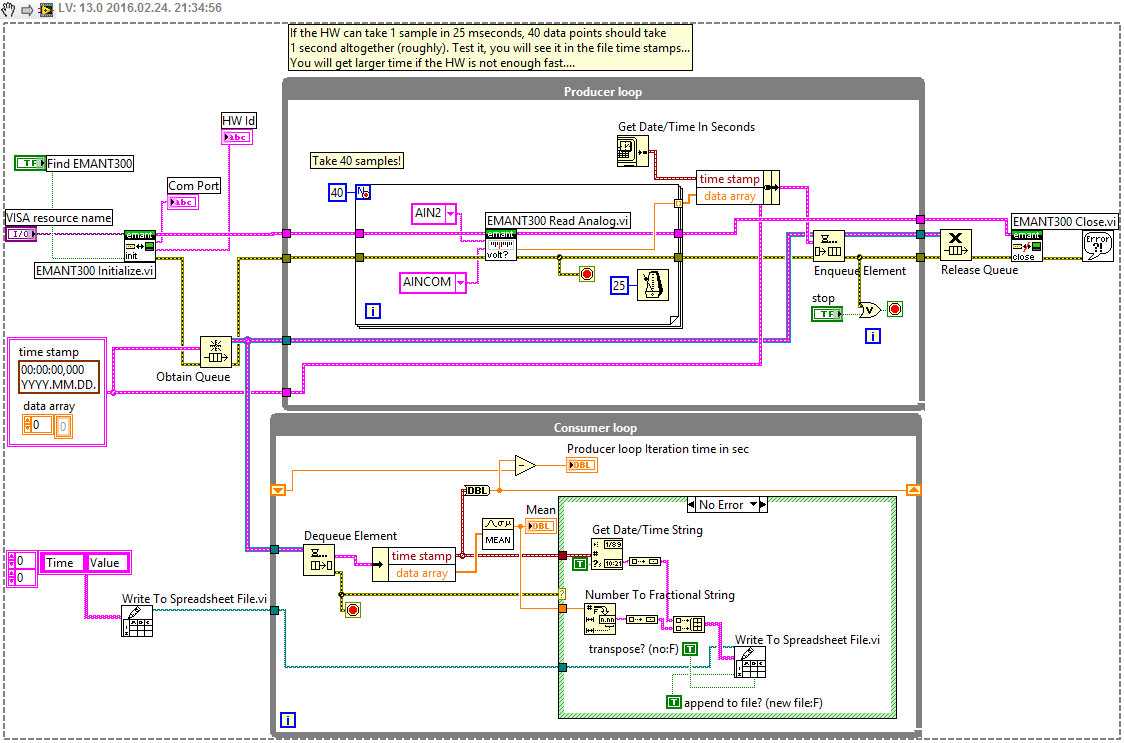

Actually I am not sure if you can collect 40 or 50 data points per second. But you can check this, if you measure the full iteration time when you request samples and measure the total required time. Use a producer-consumer design for this application. If the hardware cannot take samples at every 20 or 25 mseconds, then you have to live with lower sampling rate, like 10 samples per second...Anyway, you have the HW, you have to test this.

Here is a snippet, download it and put it into your BD:

02-25-2016 07:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well, you're going to require some kind of I/O hardware to interact with the real world - so make sure it's something with a hardware sample clock to time your reads. Even with a simple Arduino to read the thermocouples, it would be pretty damn accurate- and if there's a few ms jitter on your samples, is the thermocouple capable of responding to changes quickly enough that this would even notice in your acquired data? I doubt it.

02-25-2016 08:24 AM - edited 02-25-2016 08:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Yep, if the temperature does change slowly, it is not really needed to sample too fast. You could just take a sample at every second, it would be just fine probably. By the way, I have checked the manual of your device: http://emant.com/251004.page

These are the available rates, so the device should be able easily sample 40 or 50 samples per second (unless you want 22bit ADC...).

- Single channel 22 bit @ 10 samples/sec

- Single channel 16 bit waveform @ 2500 samples/sec (max)

Actually the DAQ device looks pretty nice, lots of options (for only 100 USD...), can you just connect a thermocouple directly to it? So it has a built-in amplifier i guess, doesn't it?

The VI which I posted earlier is not correct. I have downloaded the LV driver package for the device, and I would like to bring your attention to this example VI from the package, and I modified my example snippet (note that, the HW should do the timing regarding to the rate settings, so I deleted the software timing (Wait msec multiple function) from the FOR loop!). I cannot test these VIs without the device, but you can.

EDIT: Just a note, that it would be more elegant (and at even higher daq rates as the only option) not to use the FOR loop with the single value VI, but you could use the VI called "EMANT300 Driver.llb\EMANT300 Read Analog 16 bit Waveform.vi". Here you can specify the number of samples. More importantly, you would also get time stamps in the waveform created by the hardware, so you would have more accurate timing info of your measurement. Just to keep this in mind, if you need to measure higher rate analog signal in the future with this HW...

EMANT example (EMANT300 Example Thermocouple.VI):

My modified VI:

02-25-2016 12:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Just to be different...

A timed loop CAN give some surprising determinism under windows if you manage the sytem and talk nice to the PC.

I have acchieved 1000Hz under LV7 wiht NO finished lates errors using a hardware timing source.

So 100Hz should be doable if you use a producer/consumer architecture and you don't do anything stupid whil eth code is running.

Ben