- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Parallel program not using more than 1 CPU

05-17-2013 03:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

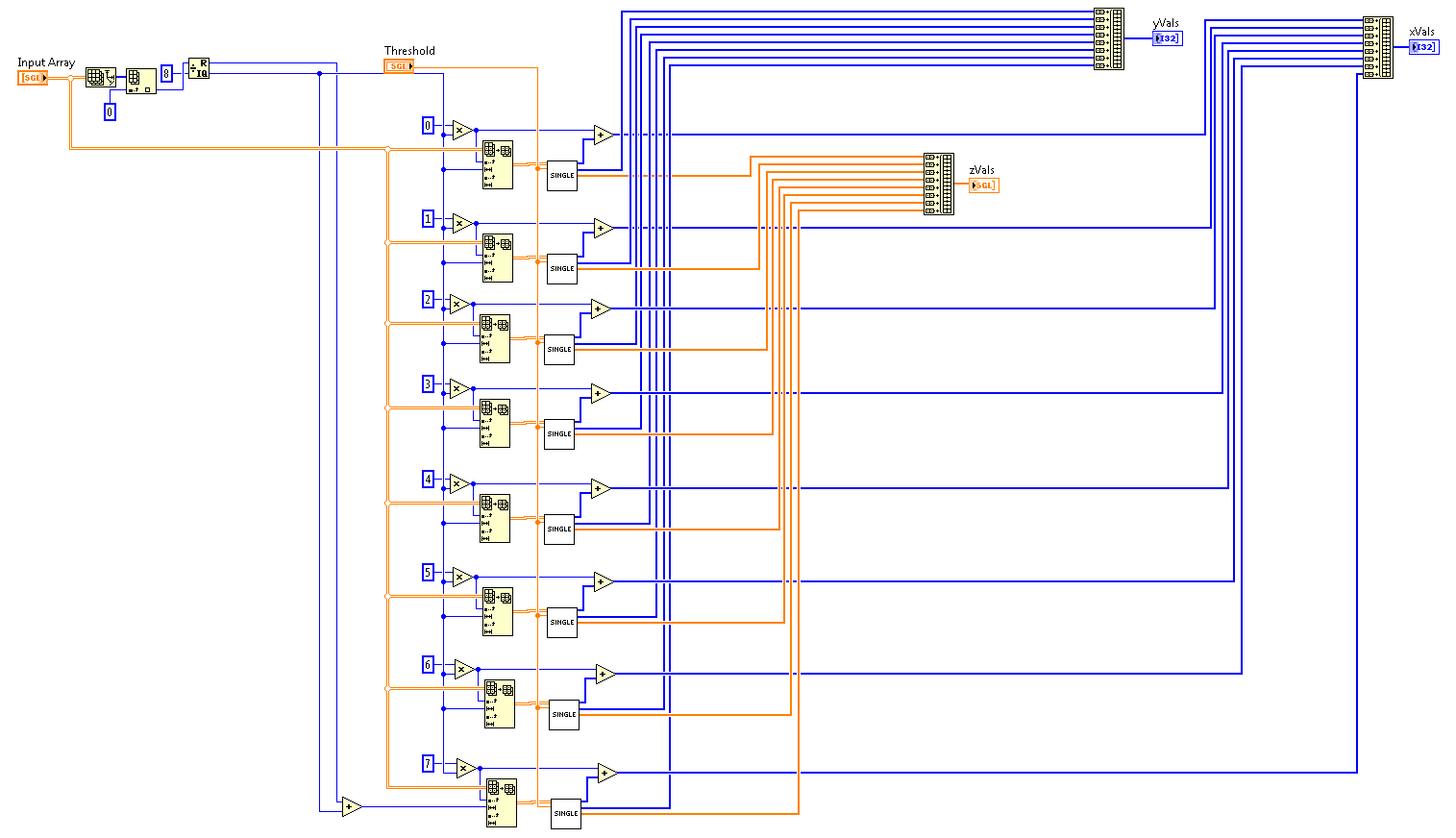

I have a program that is supposed to look through all of the rows of a very large 2D array (with dimensions M by N). For each row, I would like to get the "shallowest" point that is greater than a threshold (i.e. closest to element 0) or the deepest point that is greater than a threshold (i.e. closest to element N-1). Once I find my value, I add the row index, the column index and the value to a 1D array (I've tried using both the "build array" vi and by preallocating the vi and removing elements that didn't get filled).

This operation is not automagically handled in parallel-for loops because of the case structures withing the loops (also the shift registers might be an issue too), but I should be able to divide up the input array and parallelize as is done here (note, I also recreated this example for myself as a sanity check that I could get >12.5% CPU usage on an 8 core machine).I have created a subvi for the main "look for values and build array" portion and made it re-entrant. However, after doing all of this, I am still not seeing more than 12.5% CPU utilization. I have attached some example .vi's to help explain.

Could someone please guide me as to what I am doing wrong? Thanks for the help!

05-17-2013 05:04 PM - edited 05-17-2013 05:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Your inner subVI is very inefficient, constantly resizing arrays in shift registers. This probably interferes with the parallel operations. I think you could replace the innermost FOR loop with "threshold array".

Since you only add one scalar in the innermost loop, you should get rid of the shift register, output the last values, and autoindex on the outer loop boundary to form the 1D array. Now you can probably use a parallel FOR loop for the outer loop, simplifying your code.

05-17-2013 05:21 PM - edited 05-17-2013 05:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@altenbach wrote:

Since you only add one scalar in the innermost loop, you should get rid of the shift register, output the last values, and autoindex on the outer loop boundary to form the 1D array. Now you can probably use a parallel FOR loop for the outer loop, simplifying your code.

Here's a quick example to do all you probably need to do to find the first value above threshold

(for the other problem, you can reverse the array and multiply by -1 first (threshold and array). Modify as needed.

Note that this is the only subVI you need. Call it directly from the toplevel.

Of course as a first step you might want to make the simulated data a bit more realistic. WIth plan random nimbers, you get a match within the first few points. I would use a running sum of random values and divide all by the highest for each row, for example.

05-21-2013 11:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Altenbach,

Thank you for your reply. I did realize that my code was inefficient, but that structure is similar to what I expect to have to implement for other kinds of algorithm's as well. Also, I fully expect for my real data will have plenty of columns which will not have ANY values above my threshold, at which point it should be skipped entirely. In one of the versions I tested to see if I could get the multithreading to work, I initialized the xVal, yVal and zVal arrays and then deleted elements afterwards but this did nothing for the multithreading.

So I appreciate the advice on how to improve my vi, but I still don't have an answer to why it wasn't using 8 cores in the first place. As I mentioned above, I plan on having many different algorithms that perform similar tasks and most of them will not be able to use the parallel for-loop. Do you have any suggestions as to why that may be?

Thanks again for your time,

05-21-2013 02:33 PM - edited 05-21-2013 02:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@ColeVV wrote:

... but I still don't have an answer to why it wasn't using 8 cores in the first place. As I mentioned above, I plan on having many different algorithms that perform similar tasks and most of them will not be able to use the parallel for-loop. Do you have any suggestions as to why that may be?

I guess the slow part in your code is the constant array reallocations and this operation cannot be parallelized. As you can see from my code, the real computations are orders of magnitude faster, so even if they run in parallel, it won't make a difference.

My above code still does not take full advantage of all CPUs, because the single iteration computations are so fast that things like parallel loop overhead and data reassembly start to become visible. Instead of looking at CPU usage, you should run the same code with and without parallelization and look at the parallelization gain factor.

If done right, LabVIEW can scale over as many CPU cores you throw at it. Have a look at my multicomponent EPR fitting program where a 16 core dual Xeon is much faster than a 4 core I7, even though it runs a slightly lower clock frequency. Have a look at my algorithm description here. Still the same program runs fine on a netbook (just much slower).

05-21-2013 02:42 PM - edited 05-21-2013 02:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

What is your make and model of the 8 core CPU? In my experience, newer AMDs don't perform that well but I haven't done any benchmarks on the newest version. Whatever it is, I would like to include the results in my table.

(Feel free to download and run my program. Just open it, go to the "about" tab and click benchmark. send me the results to be included)

Of course I am always interested in benchmark results from anyone, especially for exotic CPUs :D. My e-mail is my forum name at gmail.com)

05-21-2013 03:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The CPU is an Intel i7- 3820 3.6GHz.

I think you may be getting a little hung up on the memory allocation. I know it was bad, but if you look at this vi

it only allocates memory before and after the for loop. Using this vi gives the same results as before (i.e. 1 core instead of 8), and when I time the allocation vs. processing vs. deallocation I get that the processing takes ~55 times longer than the allocation and the deallocation is negligible.

Again, I know that this vi may be inefficient compared with the threshold array vi approach, but I would still like to know why the parallelization doesn't work as advertised here.

05-21-2013 04:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

What should make that code being able to run on multiple cores on your system?

There is nothing in your code that can run in parallel. LabVIEW has to run the each iteration of the FOR loop one after the other.

To quote from the page you link to:

"The architecture of this VI prevents LabVIEW from taking advantage of any parallelism. There is a mandatory order for every operation in the loop. This order is enforced by dataflow, and there is no other execution order possible because every operation must wait for its inputs."

Because you have a wire, the lowest blue wire, that is counted up, AND used by the case structure, each iteration has to run one after the other, no parallel run, as the number from the previous iteration is used in the next iteration.

A quote:

"Parallelism requires that no single loop iteration depends on any other loop iteration."

05-21-2013 04:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@dkfire wrote:

What should make that code being able to run on multiple cores on your system?

I think the point was to call multiple instances of this reentrant VI as demonstrated in the original post.

05-21-2013 04:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

dkfire,

I am betting you did not look at the original post and notice that there was more than 1 vi. The "Single" vi, which I posted just previously is called 8 times by the "Parallel" vi. THAT I expect to use 8 Cores. See Below,