From Friday, April 19th (11:00 PM CDT) through Saturday, April 20th (2:00 PM CDT), 2024, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

From Friday, April 19th (11:00 PM CDT) through Saturday, April 20th (2:00 PM CDT), 2024, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

07-08-2013 02:33 PM

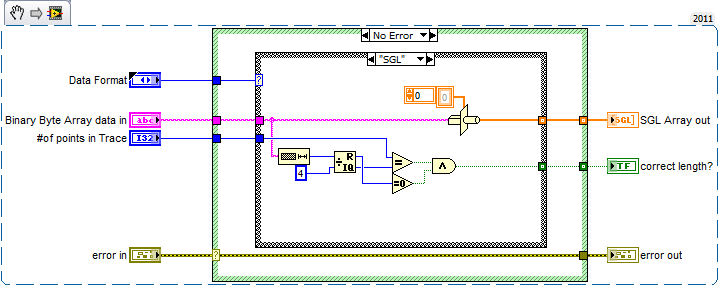

As the title suggests, I'm looking for input on a more efficient way (if any) to convert a given string to an array of SGL's.

The posting is just to get feedback on better ways to do things from a personal educational point of view. I don't actually need to improve the performance.. (Just need to continue to improve my codeing skills.)

"More efficient" should here be taken to be less CPU cycles and/or smaller memory footprint, in that order. (That is, smaller load on CPU is prefferred, so if smaller memory footprint is at the expense of additional CPU cycles, the hit on memory footprint is preferred.)

The input string length comes from a TCP read and contains only raw byte data of the format expected (SGL in this case) without any header/footer meta-data.

Currently, my implementation is very straight forward and since the expected array size is known, I'm relying on the for loop being smart in its auto-indexing allocation.

For the conversion, I'm doing a straight type-cast of 4 characters at a time.

The code below the for-loop is just intended to give a "true" boolean output if the string length matches the expected string lenght as determined by the "# of points in trace" input. No further error handling is required or necessary.

I would suspect the "string subset" combined with the iterations (for loop) are the two worst performance hits in this VI, but I can't think of a good way to do the conversion as an array operation, and if I could, then I would need to at least do a "string to U8 array" conversion first.

Thanks to any and all who bothered to read and look. Your time and input is as always appreciated!

-Q

Solved! Go to Solution.

07-08-2013 02:37 PM

07-08-2013 02:39 PM

Just typecast it to an array of SGL instead. Nearly no code needed.

07-08-2013 02:40 PM - edited 07-08-2013 02:43 PM

Gerd,

Well, I learned something new, just like I had hoped. I was unaware just how smar the type-cast function can be. I did not realize it was capable of interpreting a string as an array of a given data type..

Can't get much simpler than this I bet!

Thanks for taking the time to answer what turned out to be a trivial question!

-Q

07-08-2013 03:26 PM

Just to push the discussion along (and hopefully learn something myself), would a better alternative be to use the "Unflatten From String" primitive for this? I always worry that the Typecast node's behavior may vary depending upon the endianness of the platform it's running on. With the UFS, you have documented control of endianness, error terminals, and possibly useful leftover string data. (But I don't know about/haven't evaluated performance between the two methods.)

Dave

07-08-2013 03:32 PM - edited 07-08-2013 03:32 PM

The unflatten from string primitive has much richer features: error in/out, endianess, prepend size option, etc. and as such carries more baggage. It is important to know that LabVIEW is always big-endian (independent of platform), so the output of typecast is always predictable. I have not benchmarked in recent history, but they are probably similar in performance.

You only need to worry about endian-ness if you communicate with external, non-LabVIEW programs that expect little endian. In this case, UFS/FTS makes things simpler, of course.

07-08-2013 04:06 PM

Thanks, Chris, I couldn't remember the Typecast behavior.

So the only "problem", if you could even call it that, is that its behavior may be consistent with OR contrary to the OS's. In the present discussion, data over TCP should always be big-endian, so typecast works fine here.

If I were writing a cross-platform application that, say, processed data from a file system, I'd probably prefer the UFS.

Dave

07-08-2013 04:22 PM

Also remember that typecast is much more versatile, because it does not require one side to be a string.

You can typecast a U32 array to a SGL array of the same size, a DBL array to a complex DBL array with half the lenght, an U16 array to a complicated cluster, or a U8 array with 8 elements to a plain scalar DBL, for example. Mix & match!

Of course this also makes it somewhat dangerous unless you fully understand the underlying data. 😉

One annoying limitations is the fact that it does not accept multidimensional arrays as inputs. (It is understandable that multidimensional arrays as type or output cannot be allowed because of ambiguity, but inputs should be allowed!) ... I see an idea coming, stay tuned. :D)

07-08-2013 05:08 PM

@altenbach wrote:

One annoying limitations is the fact that it does not accept multidimensional arrays as inputs. (It is understandable that multidimensional arrays as type or output cannot be allowed because of ambiguity, but inputs should be allowed!) ... I see an idea coming, stay tuned. :D)

The idea has been posted here: The Type Cast function should accept higher dimensional arrays as inputs