- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Is it faster to index an initialized matrix or to use an auto-indexed tunnel, both within a for loop -- what is going on?

Solved!03-09-2016 01:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Disclaimer: MATLAB user here.

Note: This is a LabVIEW question, not a MathScript question. I just use MathScript to demonstrate the point.

This is background to the particular question.

In MATLAB if you want decent compute efficiency then you want pre-declared arrays, and want built-in functions.

That means this is slow:

tic

for i = 1:10000

x(i) = rand;

end

t1 = toc

(t1 = 0.266, so 37.6 elements/millisecond)

and this is faster

%run before the next loop, but not copy-pasted with it because it slows it waaaay down

clear x

tic

x=zeros(10000,1); %if not the ",1" then this overflows memory for MathScript

for i = 1:10000

x(i) = rand;

end

t2 = toc

(t2 = 0.205, so 48.8 elements/millisecond)

In MathScript this is a 23% improvement in runtime.

ratio = 100*(t2-t1)/t1

(ratio = -23.3)

So when I use LabVIEW for the same thing I get:

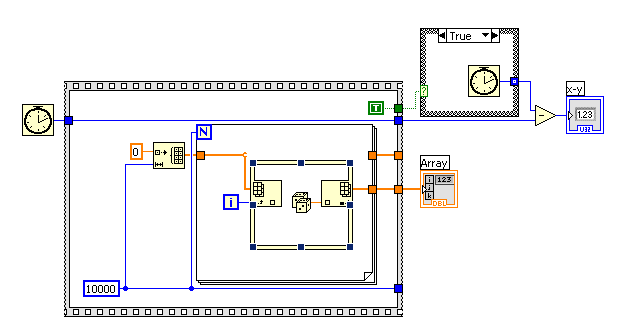

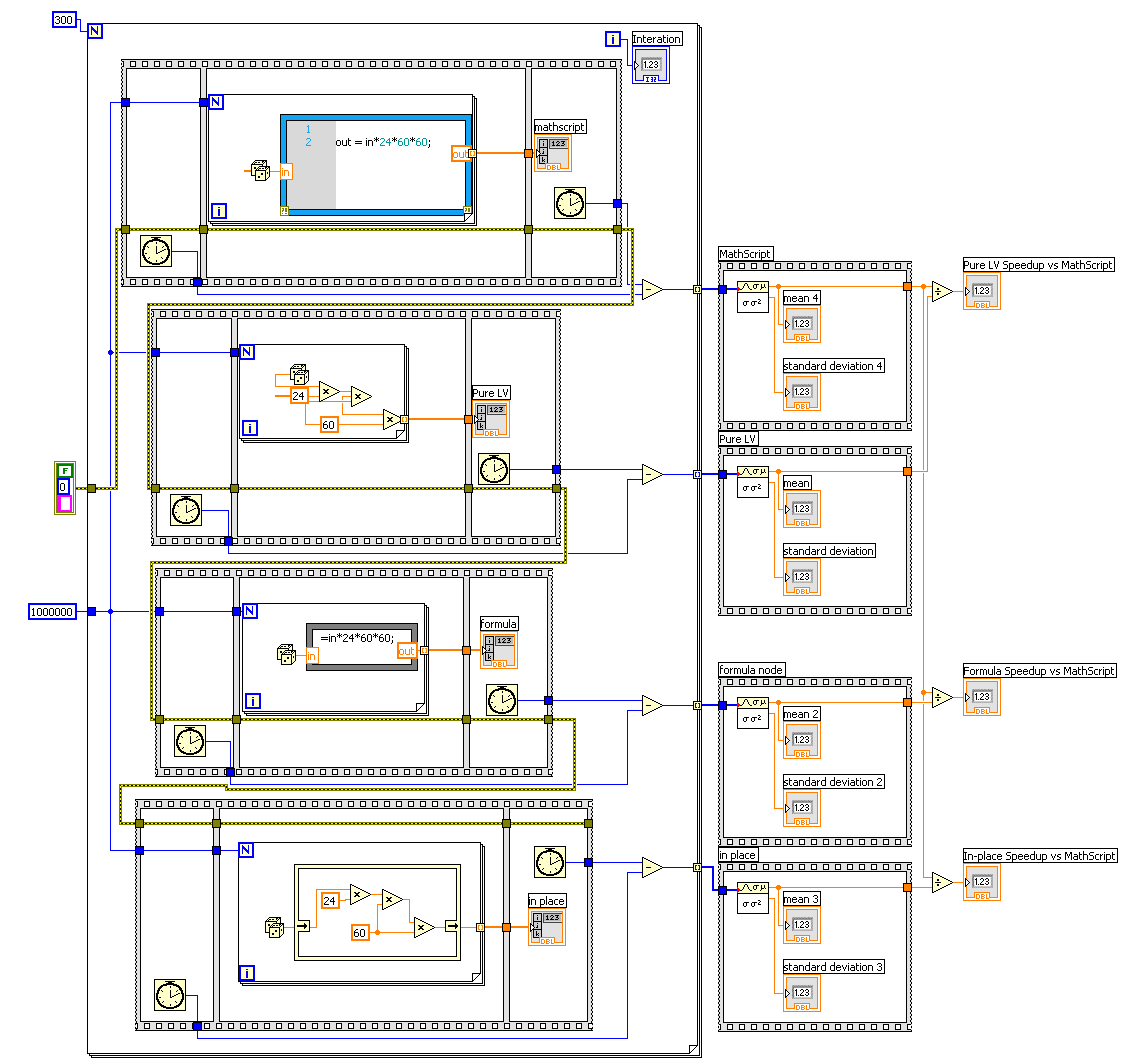

which results in a compute time of 269 ms for about 10,000,000 elements in a vector. (Note this is literally ~1000x faster than MathScript.) It created ~37k elements per millisecond.

If I change it out for indexing a created matrix, then the time gets strange.

When I run this for 10k elements, or about 1000 times less, then it completes in about 30ms, or about 333 elements per millisecond.

When I run it for 100k elements, or about 10x more than last run and 100x less than the previous vi, then it completes in about 5000 ms, or about 20 elements per millisecond.

At best the build and replace is running hundreds of times slower, and it is possibly running ~1800x slower.

Here is my problem:

One of these things is 1800x slower than the other. Why is that reasonable? Why is 111x reasonable? These are simple changes.

I absolutely adore that LabVIEW is literally 1000x faster than MatLab in some tasks. I need to understand why in some cases that 1000x multiplier drops down to a 2.4x DECREASE. The intuition that I have not only is wrong, it is stunningly wrong. It makes me lose the 1000x speed multiplier that I am so very happy with.

My thought:

If I did this in pseudo assembler the the first loop is as follows.

I point to the stack, call the random number generator to get a value on the stack, increment my counter, test if it is 10 million, update my pointer location and iterate.

The second loop would be as follows:

I point to the stack, write a zero, increment my counter, test if it is 10 million, update my pointer location and iterate.

then

I go back to the start of the stack location, call the random number generator to get a value on the stack, increment my counter, test if it is 10 million, update my pointer location and iterate.

The ratio of the runtime for the loops would be the difference in compute-write-increment time for a (pseudo)random number THEN for a zero versus write-increment of zero. I would expect it to be somewhere between 2x and 10x difference.

There is OS. Maybe the algo to compute a random number has a lot of steps, like thousands. There is the difference between On-die, L2, RAM, and disc. Given enough elements I would expect things to have to go through bottlenecks and get slow.

Solved! Go to Solution.

03-09-2016 02:21 PM - edited 03-09-2016 02:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

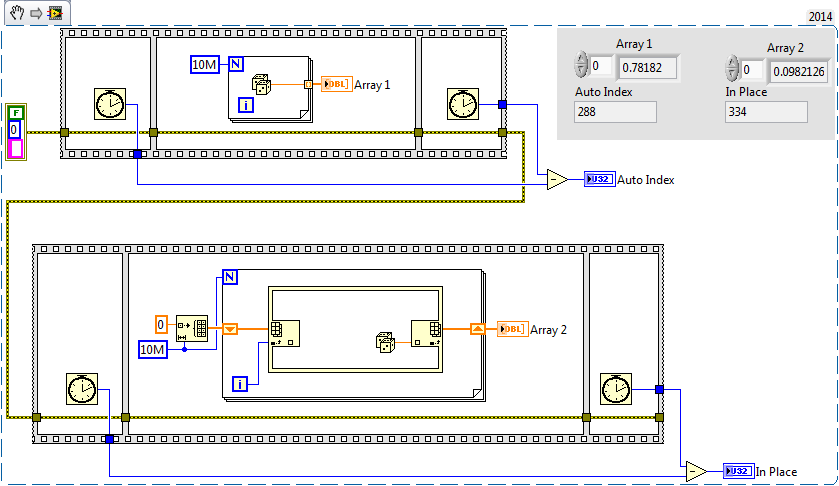

You need shift registers when using the in place element structure. I don't know why, but it's much faster (and a more accurate comparison) when you use the shift register.

03-09-2016 02:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

First of all, your second set of code is wrong. You are only setting the last value of the array in the end. You should be using a shift register to keep your array in and use Replace Array Subset.

Once you use Replace Array Subset, I would expect the times to be nearly identical. The beauty of the FOR loop is that it knows how many times it should run. Therefore when you use the autoindexing tunnel, LabVIEW preallocates the array.

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

03-09-2016 04:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@Gregory wrote:You need shift registers when using the in place element structure. I don't know why, but it's much faster (and a more accurate comparison) when you use the shift register.

Here's why: without the shift register, LabVIEW needs to make a copy of the entire array at the in-place element structure, because the original, unmodified array needs to be available at the loop tunnel on the next loop iteration. By using a shift register, you're telling LabVIEW to reuse the modified array, rather than make copies of the original one.

There is no need for an in-place element structure here. If you are replacing an element of an array, rather than modifying an existing value, use Replace Array Subset instead. Same thing with a cluster - if you are replacing a cluster element with an entirely new value, use bundle, not an in place element.

03-09-2016 05:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@nathand wrote:Here's why: without the shift register, LabVIEW needs to make a copy of the entire array at the in-place element structure, because the original, unmodified array needs to be available at the loop tunnel on the next loop iteration. By using a shift register, you're telling LabVIEW to reuse the modified array, rather than make copies of the original one.

Excellent, that makes it much clearer what is going on!

03-10-2016 03:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

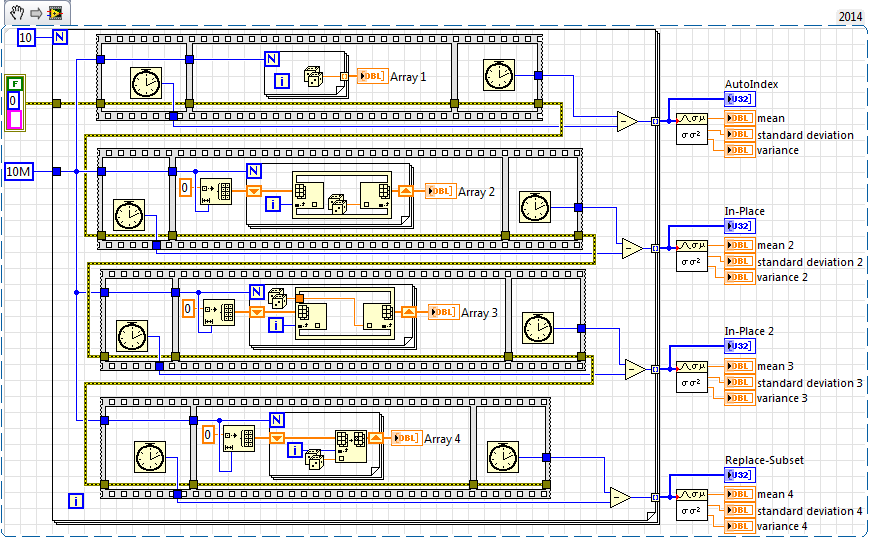

as you can see i added two more comparisons,

and what i am confused about is, the speed-up when taking the random-generator out of the in-place-structure.

from my "understanding" that shouldn't be the case

regards jwscs

If Tetris has taught me anything, it's errors pile up and accomplishments disappear.

03-10-2016 04:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

after removing the indicators for the created arrays,

and 50 iterations, it seems it doesn't matter if the random-generator is inside or outside the in-place-structure

and replace-subset is still faster 😉

:cheers:

If Tetris has taught me anything, it's errors pile up and accomplishments disappear.

03-10-2016 04:29 AM - edited 03-10-2016 04:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The thing I think being questioned here is "is it better/faster to preallocate arrays? (and which is the fastest way to do it)" - the answer is yes. As others have shown, there are quite few methods that have shown the differences between various methods - but all of the methods (when implemented correctly) all preallocate the array - the compiler is clever enough to know that it's going to need 10M elements when using autoindexing.

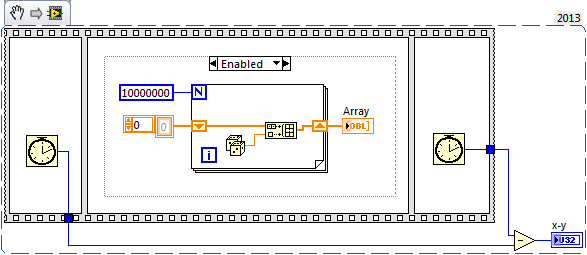

Let's not forget the other side which is where the array is dynamically created - this is the one that is slow:

Here, the compiler can't preallocate the array so it has to allocate memory as the VI runs which is what slows it down.

The build array version takes ~1300ms on my machine. In the disabled case is the random number to autoindexing tunnel - which takes ~250ms.

Using a conditional autoindexing tunnel is faster than build array - takes ~350ms. I think it allocates an array of maximum size and then trims it to the number of elements that were 'true'.

Edit: Also, the big difference between the mathscript version and the LabVIEW version could have something to do with the random number generator (e.g. they might use different complexity algorithms etc.). I wonder what the difference is like if you took that out (e.g. indexing the iteration count into the array).

03-10-2016 04:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

thx . that was clear .. i just started playing a little, and didn't want to open a new thread for that,

sorry if i got OT

If Tetris has taught me anything, it's errors pile up and accomplishments disappear.

07-15-2016 04:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

This is an update - finding a general use for this approach.

Here is the FP and block diagram (appended)

Observations:

- The formula has the highest speedup, slightly edging out the pure LV

- The in-place element has the lowest variation but it gets that by also having the lowest speedup

Other explorations:

- Moving the array display out of the central frame of the sequence also changed timing profiles. The in-place element decreased substantially in performance.

- When they are in parallel in the error cluster, not series, then the "pure LV" speedup profiles substantially change. Mathscript is done in 236 but the others are 116,117,and 123.

- When the random number generation is in MathScript - then it is much slower.

Thanks.