From Friday, April 19th (11:00 PM CDT) through Saturday, April 20th (2:00 PM CDT), 2024, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

From Friday, April 19th (11:00 PM CDT) through Saturday, April 20th (2:00 PM CDT), 2024, ni.com will undergo system upgrades that may result in temporary service interruption.

We appreciate your patience as we improve our online experience.

06-22-2014 11:06 PM

Dear Labvillians,

Highlight:

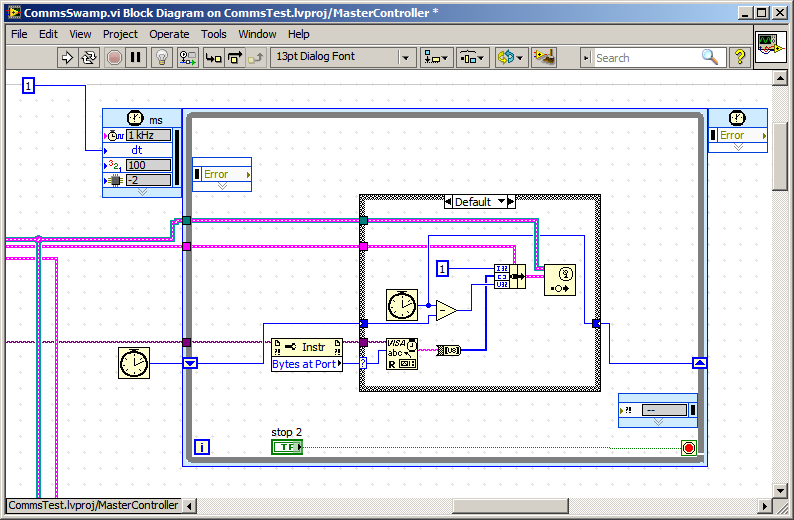

How do I stop serial "VISA read" from giving me packets instead of bytes?

Background:

I have a system that serially publishes 14 byte packets on a semi-regular interval.

At busy times, the producer of these these packets queues the data, effectively producing super-packets in multiples of 14 bytes sometimes as large as 8 packets (112 bytes).

My protocol handler has been designed to processes bytes, packets or super-packets.

My application now has multiple devices and the order of message processing is critical to correct functionality.

My observation is that the VISA read waits until the end of a packet/ super-packet before passing the data to the application code. (See Plot Below)

My expectation is that VISA read should give me available bytes, and not get too smart for itself and wait for a packet.

I have observed this on PXI, Embedded PC, cFP and most recently, cRIO

I have experimented with the cRIO's Scan interface rate, which helps with reducing the packet backlog but doesn't resolve to sub-packet byte read.

I understand that one solution is to Write FPGA code to handle it and pass the bytes through R/T-FIFO, and there are some great examples on this site.

Unfortunately this doesn't help with non FPGA devices.

I have also dabbled in event based serial reads but it is diabolical on vxWorks devices.

Any Help is appreciated

Solved! Go to Solution.

06-22-2014 11:16 PM

Sometimes Talking to yourself is helpful.

I hope this is a useful Nugget for someone in the future

06-22-2014 11:38 PM

So you did Rubber Duck Debugging. Even I have done few times and was able to solve the problems myself

06-22-2014 11:48 PM

Yup,

I have also heard it called Teddy Bear Code Review.

Rubber Duck Debug sounds better in this environment.

To me, the solution was a little counter Intuitive and required some experimentation to find the correct mode.

Option 0 - "None": Waits until the end of the packet before publishing - Default Mode if you disable Term Char

Option 1 - "Last Bit": Waits until the last bit of the Byte Before Publishing

Option 2 - "TermChar": Default, Assumes 0x0A if you don't wire one.

Kind regards,

Tim L.