- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How do I neatly replace my excel sheet logging with database capability? + general questions about distributed computing with LabVIEW

Solved!09-27-2015 12:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Amazingly enough, I'm almost done with a full blown control + simulation application I've been working on for just over a year now, thanks in no small part to this community. The final stride is to run about ~8k simulations, and be able to gather per-simulation and overall statistics on performance. Each simulation currently takes about 6 minutes of real time to run (~2 seconds of real time per hour of simulation time, run for 7 simulation days), so we're looking at about 800 hours of total time to simulate. I have 5 available computers and a raspberry Pi 2 to run these simulations on, so I'm looking to set up a sort of compute cluster to finish in about 2 weeks time.

The current logging capability is fairly rudimentary; I've got about 40 columns of data, and they're written to a tab-delimited spreadsheet with an .xls format. This works fine for individual simulations, but would be quite cumbersome to deal with if I had over 20,000 of them. I'm thinking this needs to be done with some sort of relational database, but my experience with databases is very limited, especially so when it comes to LabVIEW. Here are my questions:

- Should I have some sort of master-slave setup where one computer (probably the Pi) keeps track of which simulations are complete, which are currently running, and which haven't run yet? The slave computers would ask for simulation parameters, and the Pi would give them to it.

- How should I take care of the database? Each simulation is about 500kB in .xls format, so that's about 5GB of data all told. Should slave computers sync it every so often to take care of redundancy?

- How can I slim down my memory + disk I/O overhead? How do I find out which pieces of my application take the most of these?

- Do you have any suggestions for setting up relational databases/computing clusters with LabVIEW?

I've attached a picture of my logging setup + overall application structure. It's a state machine with an event structure for interrupts.

Solved! Go to Solution.

09-27-2015 12:37 PM - edited 09-27-2015 01:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

- There is really no way to comment based on the sparse inforrmation, but I am surprised to see stacked sequences. The "community" here considers that a sign of beginner code. You better have a very good reason for that. 😄

- Why is there a 1ms timeout event AND a 10ms wait? Doen't that slow you down significantly?

- Why does the event structure have tunnels that use the default in some cases?

- What does the "simulation" involve? Is the code optimized for speed? Sometimes improving the code can give you orders of magnitude in speedup while adding distributed computing nodes will only give you a proportional speedup in the best case.

- If logging to a formatted text (or excel) file is a bottleneck, I would strongly recommend to use binary files instead. They can be read and written much faster and, if structured correctly, any partial data can be accessed directly. Binary files are typically also smaller. You could write some offline code that converts the final binary data to a spreadsheet if really needed.

- "Write to spreadsheet file" is a high level tool that opens and closes the file with each call. Is it much more efficient to use lowlevel file function and keep the files open for the duration of the execution.

- Your gigantic build array with all these string diagram constants could be replaced with a few string array diagram constants and a few small built array nodes. You might even be able to use a simple formatting statement and just write a single line to the file using plain file IO. No need to create an array just to basically write a long string.

- In terms of code optimization, look for inplaceness and new memory allocations. Keep the front panel of all subVIs closed. Disable debugging.

- How is the CPU consumption during execution? How is the memory use as a function of execution time?

09-27-2015 12:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Good catch! The stacked sequence is purely for timing, so I can see if certain misbehaving VIs are taking too long to execute. I've got such a setup on everything involving some sort of disk I/O + heavy computation (the RK4 simulation VI that I use). The 1ms Timeout is there to just go ahead and run the state machine should the user not be trying to interact in some way (pausing or stopping the simulation). The 10ms in the While loop is something that's left over from some "LV best practices" I read about a while ago (it was something along the lines of, never make a while loop without a wait, for memory purposes). Both could probably be taken out.

The write to spreadsheet VI is something I started using a long time ago, and it's worked well so far; only takes 1-5ms per log cycle. However, I would be really interested to slim this down where possible.

The simulation involves solving a coupled set of differential equations using the built in RK4 VI. I let this run for 10 minutes at a time (h=.1) before feeding the updated value into the controller, which in turn generate flow rates that get fed into the system of DEs. 10 minutes worth of simulation time is usually 200-300ms of real time.

Binary files meaning just storing numbers and text directly as ASCII instead of using a file format? Is this faster than using a database? Also, would I just as easily be able to query per-simulation and overall for statistics?

09-27-2015 01:03 PM - edited 09-27-2015 01:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

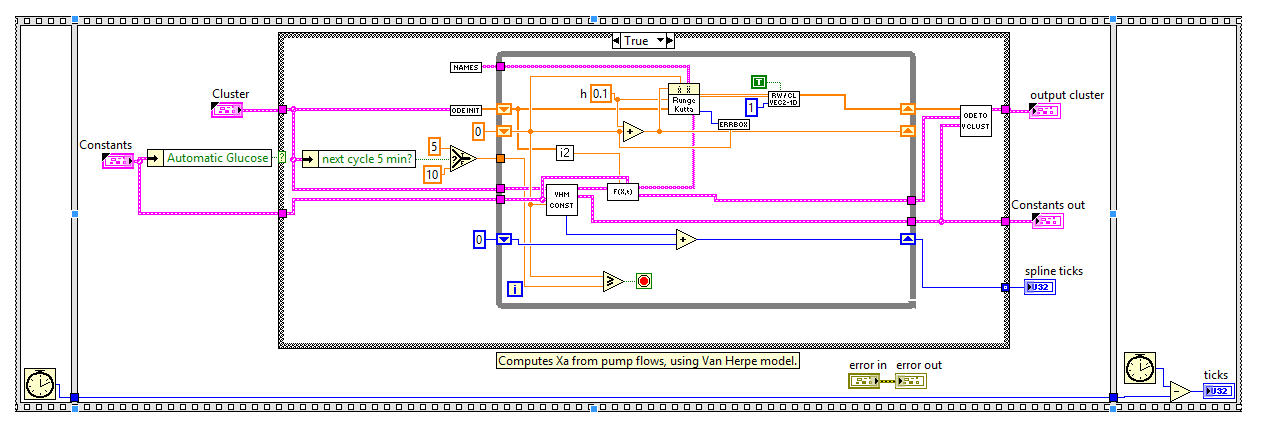

Here's the simulation portion of my application:

09-27-2015 01:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@ijustlovemath wrote:

Binary files meaning just storing numbers and text directly as ASCII instead of using a file format? Is this faster than using a database? Also, would I just as easily be able to query per-simulation and overall for statistics?

No, ASCII is an encoding scheme that assigns certain text characters to certain bit patterns. Binary files have nothing to do with ASCII. Each of the 256 possible bytes has equal rights! Leaving out details such as endianness, etc, numbers are stored flat exactly as they exist in memory (e.g. 8 bytes per DBL, 4 bytes per I32, etc.). This is extremely fast.

In contrast, writing formatted numbers to a spreadsheet limits you to a small subset of bytes (the one representing the characters 0..9 and some delimiters!). Your values take up much more room, you lose precision because of truncation to a certain number of decimal digits and the inherent inability to exactly represent binary data in decimal. you cannot predict accurately how many bytes a value will take, so the arrangement in the file is typically ragged. Formatting operations are computationally expensive.

09-27-2015 01:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ah I see! That seems very efficient. Would that fit into my use case? Having 8k binary files still seems very cumbersome.

09-27-2015 01:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@ijustlovemath wrote:

Here's the simulation portion of my application:

- How often does the output of the [Names] subVI change? If they don't change for the duration of the inner loop, that call belongs before the loop. One call is sufficient.

- Do you know if the code is all reentrant (except for some minor critical sections)? Can you run several simulations in parallel on a multicore machine?

- The "index array" seems to be dead code.

- Do you really need that array indicator in the inner loop?

- Debugging does not seem to be disabled yet.

09-27-2015 01:26 PM - edited 09-27-2015 01:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@ijustlovemath wrote:

Ah I see! That seems very efficient. Would that fit into my use case? Having 8k binary files still seems very cumbersome.

8k is the same as you have now. However the files will be smaller.

09-27-2015 01:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@ijustlovemath wrote:

Good catch! The stacked sequence is purely for timing, so I can see if certain misbehaving VIs are taking too long to execute. I've got such a setup on everything involving some sort of disk I/O + heavy computation (the RK4 simulation VI that I use). The 1ms Timeout is there to just go ahead and run the state machine should the user not be trying to interact in some way (pausing or stopping the simulation). The 10ms in the While loop is something that's left over from some "LV best practices" I read about a while ago (it was something along the lines of, never make a while loop without a wait, for memory purposes). Both could probably be taken out.

If the "0" case is just a Wait VI, just remove the Stacked Sequence and combine all of the functions in a simple Frame. Indeed, if this is the entire sub-VI, get rid of frames altogether and just use a single Wait function (if you really need it).

The write to spreadsheet VI is something I started using a long time ago, and it's worked well so far; only takes 1-5ms per log cycle. However, I would be really interested to slim this down where possible.

It may be a mistake to give this non-Excel file an extension of .xls, as it is more closely related to a Comma-separated-variables (.csv) file (which, admittedly, Excel can read and usually "captures" the .csv icon).

The simulation involves solving a coupled set of differential equations using the built in RK4 VI. I let this run for 10 minutes at a time (h=.1) before feeding the updated value into the controller, which in turn generate flow rates that get fed into the system of DEs. 10 minutes worth of simulation time is usually 200-300ms of real time.

Binary files meaning just storing numbers and text directly as ASCII instead of using a file format? Is this faster than using a database? Also, would I just as easily be able to query per-simulation and overall for statistics?

Binary files means that the data are stored, without formatting, just as they are represented in memory (which is why it is so fast). Thus floating point numbers are stored as 4, 8, or 16 byte quantities, strings (text) are stored as strings, integers are stored as 1, 2, 4, or 8 byte quantities (the number 0 will be 1 to 8 bytes of all zero bits), etc. The "trick" with binary is you have to know the exact data that are written, including (potentially) the length of strings and size of arrays, not to mention the data type "mix". [There are provisions to save string length and array size, but they can be "turned off" as well]. Because there is absolutely no formatting involved, just a "byte dump", this is about as fast as you can go. As for queries, as long as you know the format, you can read the data back and do with it what you will. If "random access" of the data is important to you (say you've got 100 "runs" of binary data, and want Run 50 without having to read runs 1 through 49), you can (in principle) build an "index" (either while you are writing the data or at post-processing time) that has the location of the beginning of each run. You could then open the file, say "Position me at run 50", and then read one run.

Bob Schor

09-27-2015 01:54 PM - edited 09-27-2015 01:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

altenbach, nice catch on the Names VI + the unused array function.

My code is currently using default execution settings, which admittedly I've never played around with, so I don't think you can call a VI that's currently being used. That honestly has bugged me before, I didn't know you could change that! Here are my current execution settings on this VI:

Also, I refactored the sequence according to Bob's suggestion:

Also, addressing Bob's concerns: Using an XLS format was mostly instinctual. Logging is one of the oldest pieces of this application, written when I was still very new to LabVIEW and didn't really know of alternatives. I've used .csv successfully as well, but I noticed that because I use a \t delimiter, xls is more readily recognized in my office suite. It's strange, but it's why it's still there. Also, that Array in the log headers VI will now be going away. It's admittedly silly to do it any other way.

Thanks again for the suggestions, guys. The binary file option is appealing, however it does make me a little uneasy. Should, for some reason, the files I create not match the spec I have in mind for them, I may have to run the simulations all over again to get that data back into a readable format. Is this not a great application for something like SQlite?