- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Generate and Write unicode characters to file

Solved!11-06-2014 09:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The genearted characters looks OK (up to x00FF) but after writing them to file those characters and their values are different. Also the characters after 0x00FF are not coming proper.

Any idea?

Solved! Go to Solution.

11-06-2014 09:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

When you do the conversion to U8, you guarantee that no value above x00FF can get to the file!

If you need multi-byte characters, you need to use mutliple U8s.

Lynn

11-06-2014 12:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

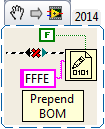

Byte order mark was missing while writing the file -

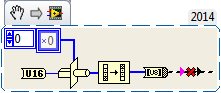

But for charcters after 0XFF tried this option, which is not working

Any other option?

11-06-2014 12:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

What do you mean by "...is not working"? The code works. It produces a string with the same bytes, reversed, as the number sent to the U16 conversion.

Please specify a value of the input, and what you expect to get from the output.

Lynn

11-06-2014 01:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

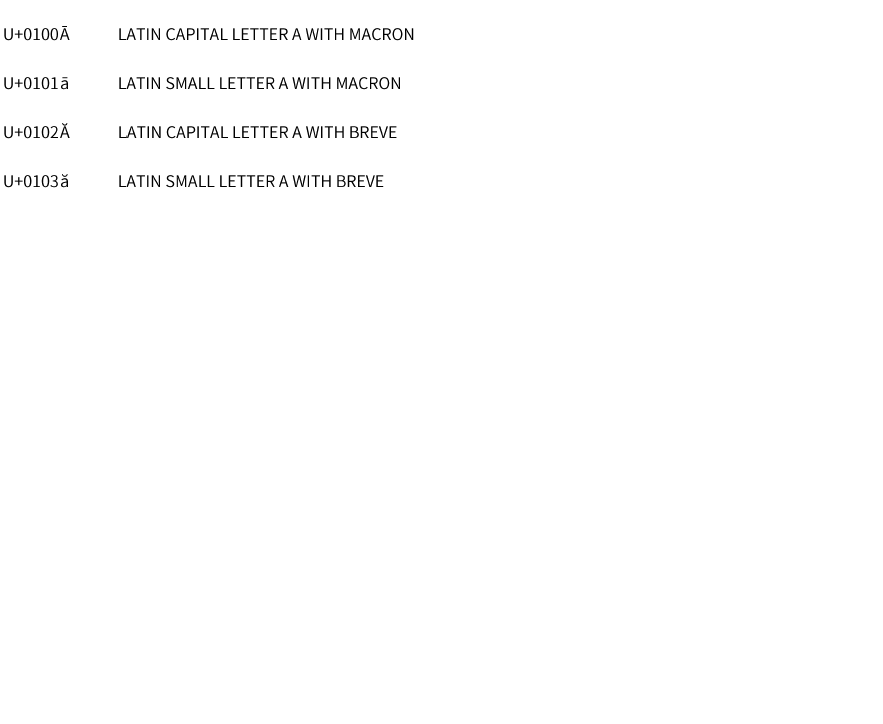

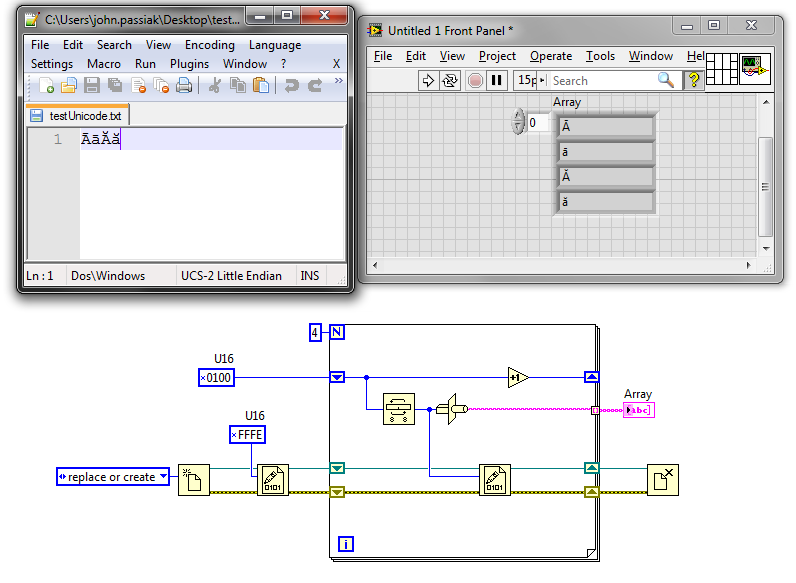

Still not able to generate these characters -

11-06-2014 02:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Is this file you're writing going to be read as a text file that has unicode in it? If it is, you have to put a Unicode header in front of your data.

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.

11-06-2014 02:26 PM - edited 11-06-2014 02:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

.

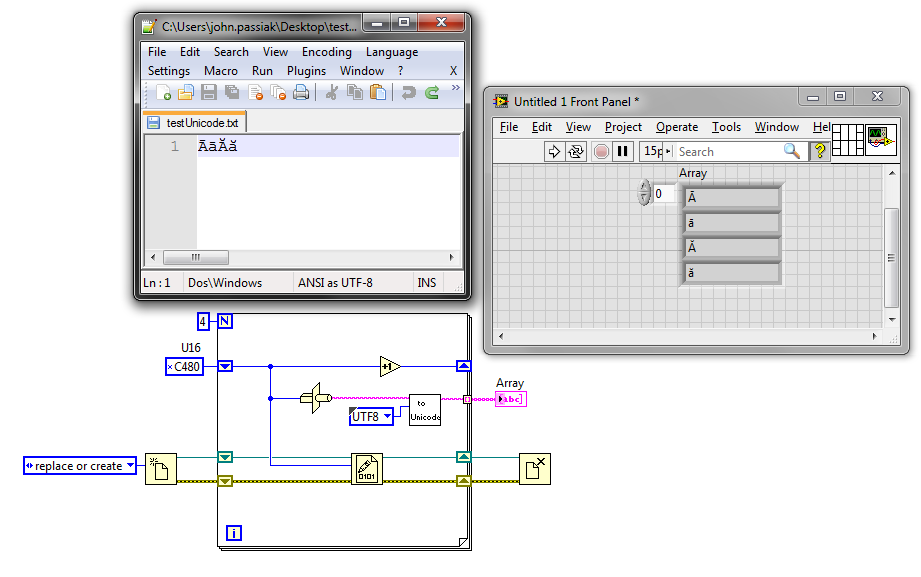

This little snippet produces a file that when opened in Wordpad contaains the text shown in the constant on the BD

"Should be" isn't "Is" -Jay

11-06-2014 04:31 PM - edited 11-06-2014 05:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You should probably give this page a thorough read if you are intending on using Unicode in your application. Here is a relevant excerpt:

ASCII technically only defines a 7-bit value and can accordingly represent 128 different characters including control characters such as newline (0x0A) and carriage return (0x0D). However ASCII characters in most applications including LabVIEW are stored as 8-bit values which can represent 256 different characters. The additional 128 characters in this extended ASCII range are defined by the operating system code page aka "Language for non-Unicode Programs". For example, on a Western system, Windows defaults to the character set defined by the Windows-1252 code page. Windows-1252 is an extension of another commonly used encoding called ISO-8859-1.

Windows-1252 gives you characters up to 0xFF (ÿ) but not anything greater than 8-bits (e.g. no 0x0100). By default LabVIEW only supports these 8-bit characters uses multi-byte character strings--interpretation is based on the current code page selected in the operating system. You may turn on Unicode through the instructions provided in my first link (it is unsupported and can be a bit buggy on occasion...) to obtain multibyte unicode character support to allow for multibyte characters not in the OS code page.

Unicode has multiple encodings, and the raw bits for a given character depend on the encoding used. LabVIEW's limited unicode support appears to use UTF-16LE (little-endian) encoding for anything displayed within the UI. So to get the characters to show up on the UI, you must enable unicode (instructions shown in my first link) and write the proper UTF-16LE codes:

UTF-8 is more common and thus easier to work with outside of LabVIEW (e.g. my version of Notepad++ evidently does not support UTF-16LE). I usually end up using UTF-8 encoded strings for files and converting them to UTF-16LE for display in LabVIEW.

The unicode library in my first link has the necessary subVIs for converting between UTF-8 and "Unicode" (i.e. UTF-16LE).

Best Regards,

11-06-2014 05:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Well... I learned something as well typing all of that out...

I just had neglected to write the byte order mark (BOM) when using the LabVIEW UTF-16LE. When I do this the file works in Notepad or Notepad++ (which detects the file format as UCS-2 Little Endian).

I still like UTF-8 personally as the BOM is not required.

Best Regards,

11-06-2014 06:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I've used the undocumented LabVIEW Unicode support quite often. They work fairly well, but they're kind of twitchy. If you can tame the beasts, they will serve you well.

(Mid-Level minion.)

My support system ensures that I don't look totally incompetent.

Proud to say that I've progressed beyond knowing just enough to be dangerous. I now know enough to know that I have no clue about anything at all.

Humble author of the CLAD Nugget.