- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

FPGA Target and Host synchronizing

06-29-2015 07:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello! I am working on my faculty project and I have to do acquisition on NI sbRIO-9636. How can I choose sampling period of acquisition? I am working according to an example on NI website below:

http://www.ni.com/tutorial/4534/en/

I can't figure it out which sampling period should I put in Host.vi and which in Target.vi in order to have proper acquisition? Also, in this example in Host VI is 10 ms sampling period, but which period is in Target VI?

For an example, I would like to have sampling period 10 milliseconds. How to configure loop-time in Host.vi and how in Target.vi?

Can anyone please help me?

06-29-2015 08:31 AM - edited 06-29-2015 08:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

That example explains everything you need to answer your question. The sample rate on the FPGA target is the rate you acquire samples. You transfer multiple samples at a time from the FPGA to the host using a DMA FIFO, so the loop rate on the host is less important so long as it's fast enough to prevent the DMA from filling.

06-29-2015 09:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

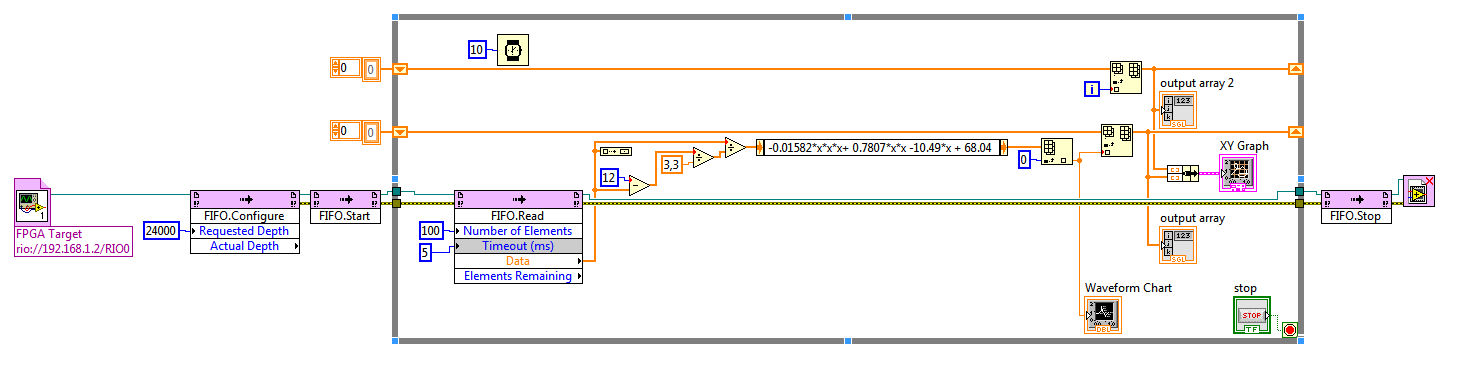

I will try to explain what did actually happen. I acquired data for about 25 or 30 minutes. I didn't put any loop timer in host loop and I put XY Graph to see what I acquire. The shape of my response was ok. But, I think the number of samples that I have recorded was not good. The image below shows my Target VI.

At Count control I have put 1000 Ticks. Well, after you said me that is the sampling interval, that is 1000* (1 / 40 000 000), because 40 MHz is onboard clock. So if the sampling interval is 25 microseconds, then the period of acquisition is number of samples * sampling interval.

So, that is 237332 * 25 microseconds, which is not the time I recorded data.

When I put Wait control in Host VI that looks like this one on the image below, and this means loop time of 10 milliseconds the thing become a bit different.

The number of samples I have got was 160657. I have done this:

acquisition time = number of samples * 10 * 10^-3 seconds = 1606.57 seconds, and that number in minutes is about 26 minutes. What am I doing wrong?

06-29-2015 10:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Ok, so you are taking a sample every 25us. On the host side, you have a 10ms delay. That means you should be reading 400 samples each loop iteration. Your DMA FIFO is likely filling up. And then you are only using 1 sample out of the 100 you collected. You are throwing away 99% of your data. So maybe we should take a step back.

What exactly are your requirements? At what sample rate do you actually need?

There are only two ways to tell somebody thanks: Kudos and Marked Solutions

Unofficial Forum Rules and Guidelines

"Not that we are sufficient in ourselves to claim anything as coming from us, but our sufficiency is from God" - 2 Corinthians 3:5

06-29-2015 10:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If I understood well:

Here I am using only 1 sample out of 100 an send that one on graph. What happens to the 99 unused samples? Are they just thrown away and in the next host loop iteration Host takes another 100 of samples, again uses only one and the rest throws away and so on?

Also FIFO buffer is filling in (but I suppose it is not overloaded yet) and I suppose data that I saved comes in host much later - it is not real time acquisition. Am I right about this?

My requirements:

I want to take a sample every 10 milliseconds, and I want to know how to increase or decrease sampling time (in order not to throw 99% of my data away).