- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

FPGA DMA performance Bitfile or VI

11-28-2012 06:43 AM - edited 11-28-2012 06:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi, I'm seeing a werid behaviour in my FPGA code.

We are looking at expanding the main DMA FIFO in our code and I wanted to run some benchmarks ont eh throughput with different data types (U8, U16, U32 U64 and so on) and I have noticed something quite unexpected.

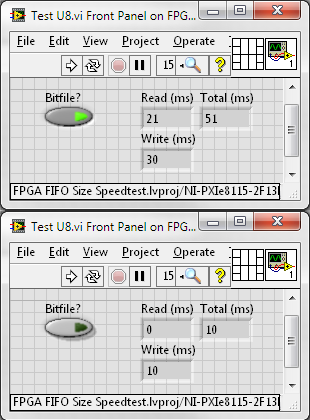

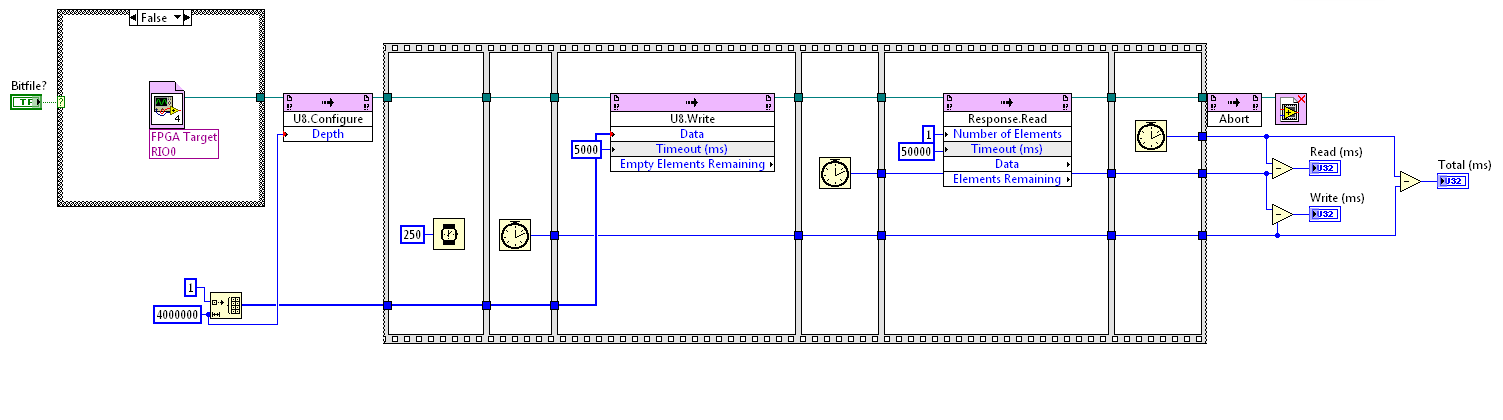

The performance of a VI (Running from the RT System) depends on whether I have the "Open FPGA Reference" running from a VI or a Bitfile. I have added a wait of 250ms before running any times operations but you can see the results of writing a 4M FIFO of U8 to the FPGA target below.

This is using the exact came code after the "Open Reference".

Any ideas ays to why this would be the case? It's super reproducible in my code.

Shane.

PS I'm using LV 2011 SP1

- Tags:

- fpga

11-28-2012 06:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Oh, I think my VI version might be throwing errors.... need to wait a while to compile before I can confirm.... D'OH!

11-28-2012 07:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Nope, I'm still seeing differences.

For sending 8M U8s per DMA I'm seeing write times of 42ms for VI and 81 ms for Bitfile. Read times are the same.

Weird.

11-28-2012 07:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Send times for 8M U16 is 60ms for Bitfile and 23ms for VI.

11-28-2012 07:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I found the soruce of the Weirdness.

If I attach a ocnstant to the "Open Reference" node with my Bitfile the format of the constant makes a difference in the timing.

If I have a format like rio://192.168.0.X/RIO0" then it takes longer than if I have an Alias defined as simple "RIO0".

I didn't say it's less werid, I just said I found the cause. Is there some kind of extra work being done AFTER opening the reference which is dependent ont he format of the location specifier? It seems like this should not matter in the slightest but what do I know?

Shane.

- Tags:

- fpga

11-28-2012 07:56 AM - edited 11-28-2012 08:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Oh man. My Alias was pointing to a different chassis. Different hardware was the reason for the different timings.

Ignore me, I'm full of useless information recently.

Bye. I need a coffee.

Shane.

PS No, Coffee didn't help. The differences are still there if I compare Bitfiles with Rio://192.168.0.X/RIO0 format device names.

11-28-2012 09:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I'm having a little difficulty following which cases you're looking at. I believe there are 4 possible permutations:

VI Mode with resource string of "RIO0"

VI Mode with resource string of "rio://192.168.0.X/RIO0"

Bitfile Mode with resource string of "RIO0"

Bitfile Mode with resource string of "rio://192.168.0.X/RIO0"

Have you tried each of these cases?

11-28-2012 09:47 AM - edited 11-28-2012 09:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I have only tried case 3 and 4 with the VI call not having any resource input since the VI itself has that defined. Of these the VI method is fast as is the Method 3 you list whereas Method 4 in your list is slower.

What I have also noticed is that the throughput scaling for U8 to U64 is as expected (U16 requires double the time of U8, U32 double that of U16 and so on) whereas ALL FXP values require the same time (Exactly the same as U64). It seems that ALL FXP datatypes are transmitted as 64-bit which is kind of inefficient.

Shane.

11-28-2012 09:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

As soon as I try the VI method with a resource control it tells me I need to recompile for the target.... I'm telling it to run on the same hardware but now I need to recompile....

11-28-2012 10:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

My guess/expectation is that if you tried cases 1 and 2, 1 would have the same performance as 3, and 2 as 4.

If that is indeed the case, I believe that is expected. "rio://..." is the format for accessing remote devices. So by using that format, it's involving at least some portion of the network stack.

>> It seems that ALL FXP datatypes are transmitted as 64-bit which is kind of inefficient.

I believe this is as designed, and actually avoids introducing worse performance. The format for fixed point values in software is always 64-bit (you can think of arrays of these in software as being 8-byte aligned). If the transferred buffer of 64-bit values did not have this alignment, the processor would have to "manually" copy these values out of the dma buffer and into another buffer (increasing cpu usage and memory usage).