- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

DAQ fast data storage and time filtered retrieval. Should I use citadel?

08-24-2015 01:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello

I have a DAQ card that's getting data from 5channels at 1Khz, so 5000 values every second.

This will be running continuously, and I would like to store this data at the same time (measurement + timestamp).

I have read about TDMS, but the problem here (unless I missed it) is that you can't really filter data when reading it again.

The user will really have to be able to specify a certain time range, and select certain channels. He will then be presented with the selected channel's data within that time range.

From what I can find, it seems like citadel is the fastest way of storing data while still being able to retreive time related values. Is this correct? And if so, can I use citadel as a "stand alone" database. All documentation I have found so fare keeps referring to the DSC module and related processes.

Any information is welcome

Thanks,

Vincent

08-25-2015 05:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Vincent,

I would prohaps have a look at the concurrent access to TDMA file.vi in the example finder (in LabVIEW tool bar, help>>Find examples) that will a bit of changes to add in your DAQ in the top look instead of the sine simulation, will give you the abblity to sroll through the data live.

I would have a read into TDMA and also DIAdem that works well with TDMS files.

http://www.ni.com/white-paper/3727/en/

Hopefully that helps you

08-25-2015 02:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@VintageWillis wrote:

Hi Vincent,

I would prohaps have a look at the concurrent access to TDMA file.vi in the example finder (in LabVIEW tool bar, help>>Find examples) that will a bit of changes to add in your DAQ in the top look instead of the sine simulation, will give you the abblity to sroll through the data live.

I would have a read into TDMA and also DIAdem that works well with TDMS files.

http://www.ni.com/white-paper/3727/en/

Hopefully that helps you

Hello

Thank you for the help. The problem with a TDMS file is, that you cannot filter on date and time, that's correct right? I looked at the example, but there too they just use the scrollbar value, to then get the 1000 values before the scroll bar value.

I probably should've given some more background: there will be a web service linked to this vi. On the web service the user will choose a start and end time. This will then be used by labview to get all saved values within that time range, to then send them to the web service.

I downloaded the DSC Module trial, and using the Write trace and Read trace VI's looks to be doing what I need. The only big problem I am facing now, is that every second, there is one milisecond that is skipped?? When reading back this data this produces a "NaN" value, which in turn produces an error when parsing the JSON.

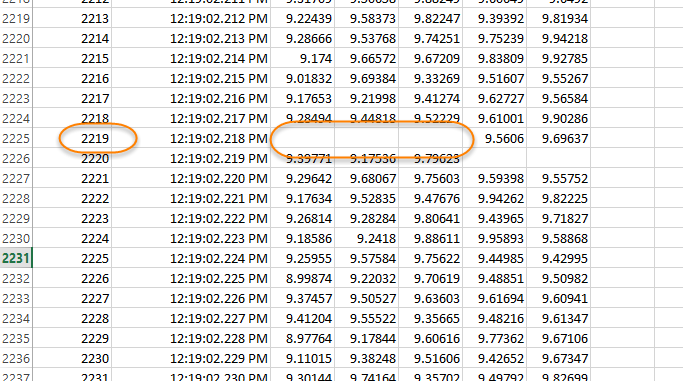

As far as I can tell, this is probably a problem with the DAQ assistant not getting a value every milisecond. The weird thing is though, that during a single "run" of the vi, it occurs at the exact same milisecond. I have written the data to an excel file and attached screenshots below to illustrate the problem. I hope someone can help with this.

08-25-2015 03:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I don't understand your reasoning for using dsc and citadel. You have extra licensing and slower write speeds. If you want a database, you don't need dsc. You really also need to look closer at tdms.

08-25-2015 04:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I agree with Dennis -- inspection of your data indicates that there is probably a bug in your code (if I had to guess, which I do, since I can't see the code, I'd guess that the acquisition and the data writing are in the same loop, possibly even sequential, hence you might miss points when writing ...).

Show us the code and we can suggest "fixes".

Bob Schor

08-25-2015 04:18 PM - edited 08-25-2015 04:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

So basically what I'm looking for is the most efficient and fastest way to store 5 daq channels, each running at 1000 samples a second. The added must have though, is that I have to be able to quickly and efficiently filter this data by time. I came across TDMS, but as I said before, I can't find how I would retreive data from the TDMS, when all I'll have is a certain timespan (e.g. get the data from 12pm to 12.10pm today).

Looking into this, I learned about citadel, which I read works in a manner similar to TDMS, but adds the posibility to filter by time, while still being very efficient.

Please let me know if anything I'm saying here is wrong.

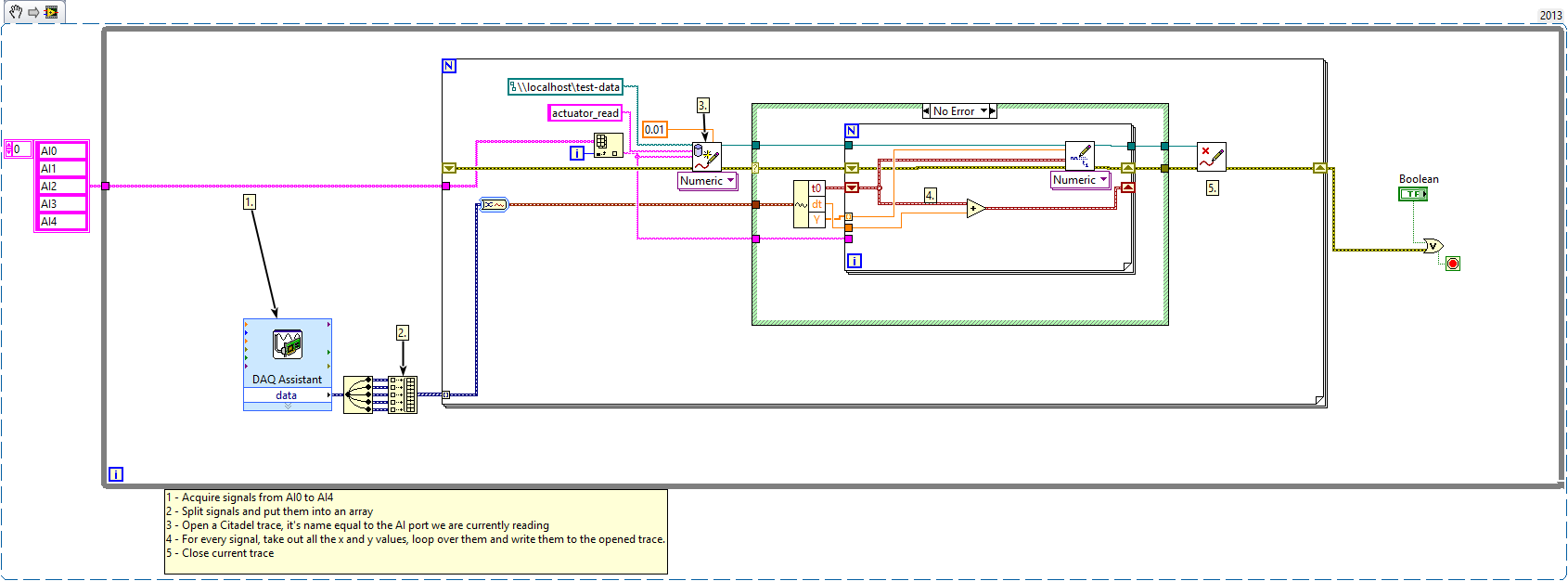

I have attached the read and write vi I currently have. To run it, you will need a citadel database called test-data, and a simulated USB-6009 in MAX.

Oh and the time fields in the "read-citadel" vi are strings, because in the complete program this vi is part of a web service.

Thank you for taking the time to help me!

Writing the data (it is indeed sequential. I figured it was a problem with the daq missing a value, but didn't know what exactly I could improve). The loop only takes about 130ms to execute, so I thought it was fast enough.

Reading the data:

08-26-2015 12:15 AM - edited 08-26-2015 12:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

What you do is not efficient at all in LabVIEW. Do NOT use DAQ Assistant Express VI. For proper DAQ, use the proper DAQmx VIs!

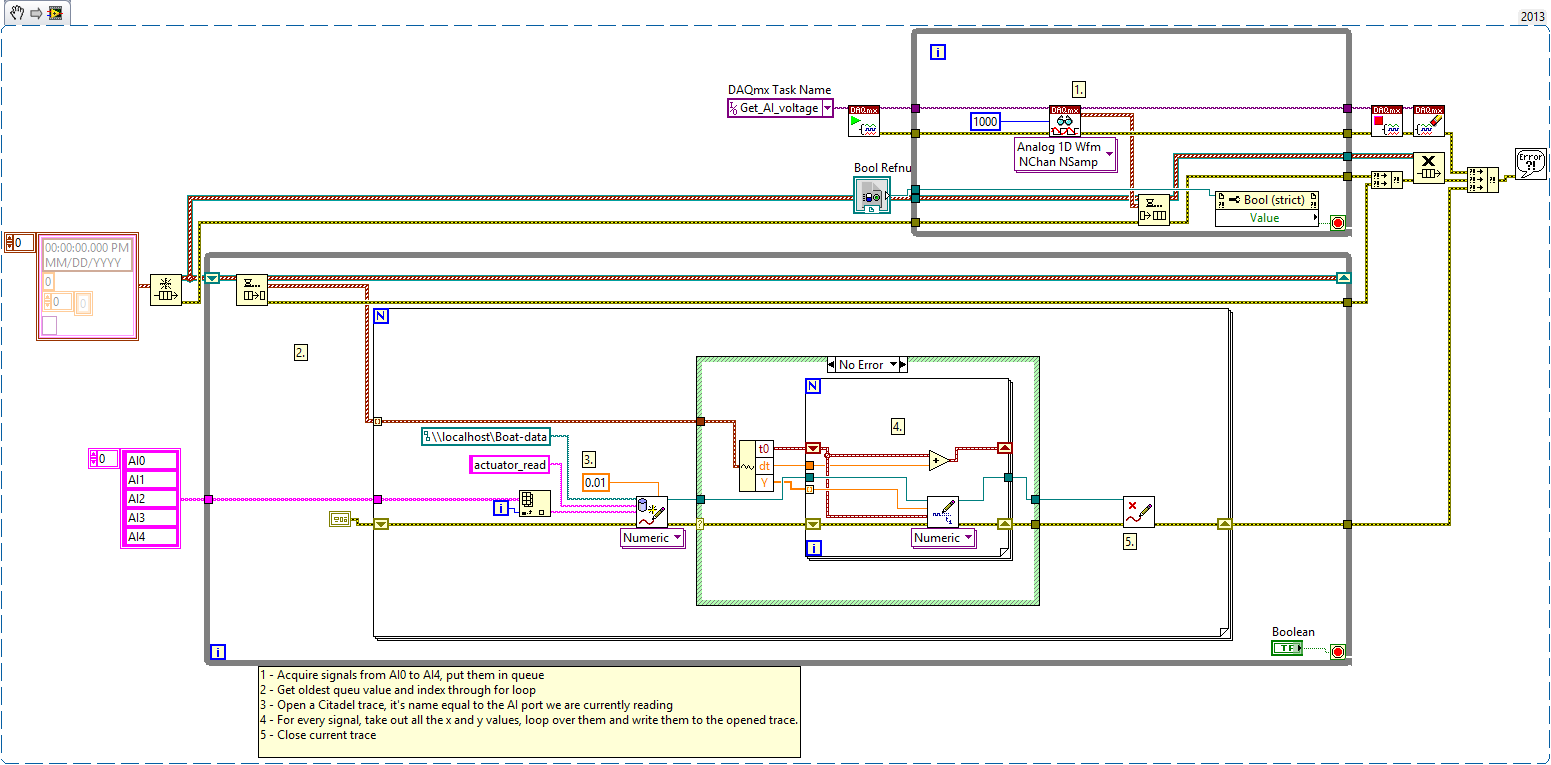

Beside, you need to DECOUPLE the DAQ which produces the data and the data logging. This you can do with two parallel while loops, using the Producer/consumer pattern. In this case the data is broadcasted via a Queue. Have a look at the shipped examples in LabVIEW.

http://www.ni.com/white-paper/3023/en/

edit: I never used that citadel file logging, but as I see in your block diagram, you open, write, and close the file EVERY single iteration of the FOR loop, so 5 times per While loop iteration. Why? Usually what you want, is to open a file for logging BEFORE your DAQ task starts, during acquisition you write data to file, and in the END of the task, after your while loop, you close the file reference. And of course, you need a producer/consumer so any potentionally time consuming file operation (OS decides how the file write is buffered) is is separated from the DAQ loop.

08-26-2015 02:29 PM - edited 08-26-2015 02:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you for the suggestions. I created the producer/consumer pattern, and got rid of the DAQ Assistant. I completely understand I should've done this a long time.

However, the NaN values are still there strange enough. Every second, for every channel, there is an empty value as demonstrated in the screenshot above. For the same data storing session (I don't know how to explain this more clearly) this occurance is allways on the same milisecond (+1000 of course). I have found I can ignore them by setting the "skip data breaks" option to true when reading, however I'm still curious why this happens.

About the constantly opening and closing of the file: There's a different file (or trace actually) for every channel. For one, I would have to open them all seperately and have separate write operations in stead of just 1 FOR loop, if you guys think that's a big reliablity/performance improvement, then I could do that since it's only 5 channels and it will never be more. The second reason, is that I don't know if you can read from citadel, if the trace is opened somewhere else, which could cause problems.

This is how my write VI is looking now:

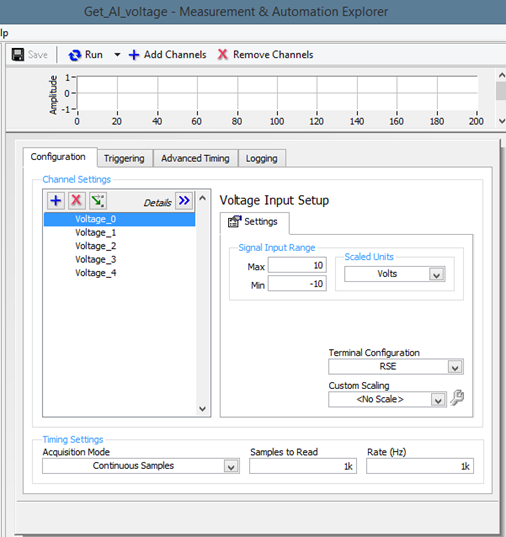

And this is what the used task looks like:

08-27-2015 04:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I've been watching this thread for a while but haven't replied yet.

Citadel is a database engine....NOT a file. When you write to the citadel database, you've actually communicating with the citadel process running on the PC. A few people seem to think that you're writing to files directly which isn't true. In any case, it's always more efficient to do your open/close (of files, resources, connections) outside of loops (unless you have a specific reason for deliberately taking that performance hit - e.g. to free up the resource for use elsewhere).

Yes, once the data is in the citadel database you can read it without interfering with your writes. I haven't actually tried reading data back into LabVIEW but the viewer in MAX isn't too bad and it's well supported by Diadem for reporting/analysis.

I dabbled with Citadel a while ago as it has some nice features (designed for storing time series data, connection redundancy to an external server) but I didn't get very far with figuring out what the performance is like or how easy it is to export the data into something non-NI (e.g. Excel). The other thing that concerned me was that NI doesn't seem to advertise/push it very much which always makes me nervous (e.g. dropping support or some other reason I don't know about). It also cannot be installed as an independent component - you need (at least) a DSC Run-Time license if you want to deploy it remotely.

I suspect that the reason for your gaps might be that the millisecond rolls over while recording the data?

08-27-2015 09:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I too noticed the citadel database doesn't seem to get a lot of support, and hasn't been updated in a long time. This is mainly why I started this thread, I really don't know what alternative would provide the same functionality with equal performance? I tried the database toolkit before, but constantly executing sql queries was obviously way slower than this.

Good to know I can read from the databse while writing. I'll look into finding the best way to open the traces before the while loop. Would it make sense to open them and add the references to an array? That way I can just get the correct refference while looping over the different channels. (I'm not sure if creating an array like that could actually cause performance issues in stead of solving them?)

I agree with your milisecond rollover theory, I didnt' really know what to call it. I assume there's really not much I can do about that within a non RT environment? The "skip data breaks" option seems to be an easy workaround, so I guess I'l just have to leave it at that. I'll be sending the data to a website (using labview web service), and the empty values showed up in the json as "NaN", which in turn throws an error when processing the json on the website.