- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Appending to a Datalog file

Solved!11-25-2009 01:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I am unable to append data to the datalog file in the attached example. It saves the data on the first save iteration but will not append data on subsequent save iterations. In the atteched exmple, the save iteration is 5 seconds. In the actual application the save iteration will be hourly because a few day's worth data is needed to be logged at a 50 mSec rate.

Please advise how I can get this to append the data and how the example can be improved.

I'm using Win-XP and LV 8.6.

Thanks,

Dave

Solved! Go to Solution.

11-25-2009 08:51 PM - edited 11-25-2009 08:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

I am suspecting a race condition between when data is read written to the "logged data" array indicator vs. when the user event is triggered vs. when the data is read from the local variable of the "logged data" indicator. If the user event gets fired and the logged data local variable is read before the latest iteration of upper loop data is fed into it, the event structure is going to get stale data. Rather than using a user event and an event structure, why not pass the data from one loop to another using a queue?

I really don't understand your logic behind the double shift registers and the select statements in the upper loop, so I don't know in what way that could be affecting things.

Please watch your wiring as in several places you have wires running under indicators and overlapping other functions and even the lower loops stop terminal making it difficult to determine the path of the wire. There is also a Rube Goldberg in the way you index out the first dimension size of the array by passing it through a cluster.

11-27-2009 08:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi Ravens Fan,

The purpose for the second shift register is to avoid what I think is the race problem. It ensures the data is available for the bottom loop before the first shift register is cleared. I'm not familiar with the Queue function; and I clearly should be. I found the example vi titled Queue to Pass Data.vi and will learn this function.

In the mean time however, I cannot get the Write to Datalog function to append data past the first saved set. Even if I replace the Logged Data variable in the lower loop with a constant that contains data, the data will get logged to the file only on the first save iteration. Subsequent save iterations will not append the data. Am I misunderstanding the Write to Datalog function? For example, it's not clear from the function help if the datalog file must remain open (and never closed) to append data or if I can append data after the file had been closed and then reopened.

The point behind this project is to continually save long-term data to on a periodic basis (i.e. hourly) and have another application view the logged data while this applicaiton continues to log data.

Please advise on the Write to Datalog function.

Thanks,

Dave

11-27-2009 10:12 AM - edited 11-27-2009 10:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi dj,

you are writing to the datalog file - it grows with time...

The problem is: you're reading only the first datablock!

You write datablocks of 100 rows and so you read datablocks of 100 rows.

As you always open the file again you always read the first datablock... And the read count is unwired, so the default value of ! kicks in!

I would suggest:

- Use a queue to move data between loops.

- Use a different dataformat like simple binary files. What happens when you will save 25 rows of data, but want to read 100 rows of data? (I.e. What happens when datatype of write and read will be different?)

11-27-2009 07:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The attached vi is changed based on the advice given. This vi uses I32 instead of DBL or SGL and logs the data using the binary format in an effort to reduce logged file size. (The largest number that would be logged is 1,000,000 for any of the four logged values and are integers.) In the final application, the Log Loop determines when to save the data and subsequently clears the Logged Data shift register, which is the reason for the Event Case in the Main Loop.

I appreciate the response and the opportunity to learn new features such as the Queue function and the ability to work with binary files.

The data retrieval and array manipulation code is for demonstration. Is there a more elegant or easier way to obtain the final logged data array than using the For loop in Event Case #1?

11-30-2009 12:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

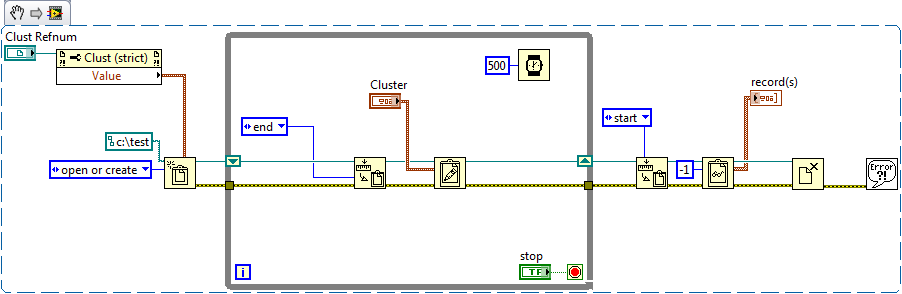

If you want to "append" data to a datalog, you have to set the file position of the datalog. In your current code, you are writing the the beginning of the file each time by default. Take a look at the example below. When the data is read at the end, it is returned as an array of clusters.You will also notice that I set the datalog position once again after the loop to make sure that the read operation reads the entire file from the beginning.

-Zach

11-30-2009 12:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Thank you for your response. I'm now logging to binary files in the interest of more efficient logging. However, when retrieving the data it takes a long time to read the file. I think it's taking a long time because of the For loop that creates the new array. Is there a better way to do this?

12-01-2009 11:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hello,

What is the size of your file when trying to read it? If you read the file in a separate VI, do you get the same behavior? The delete from array function is going to perform memory allocations in order to handle the operations you are performing. This takes additional resources and could be causing the hang. If you don't remove the data sets with the for loop and just display the entire set, is it slow?

-Zach

12-01-2009 12:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The file size is almost 23 MBytes, which equates to an array size of approximately 1,500,000 by 4 values. The freeze problem has occured with a separate vi. I've not attempted this with the example vi. I have subsequently been able to successfully open a file that is 6 MBytes in size. It requires about two minutes.

When I bypass the For loop function, the opening of the file (including the 23 MB) ocurrs very rapidly without problems.

In the mean time (because it's needed soon) I prepared the application to save segmented files. In other words, it creates a new file when the current file exceeds a limit. In this case a new file is created when there are more than 72,000 samples. The resulting files are about a 1MB in size. Opening and subsequently processing this file size requires about 13 seconds, which is tolerable.

12-01-2009 01:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Hi dj,

do you read the file row by row (e.g. 4 values per iteration)? That would explain the long loading time...

Read larger chunks of data - or read the whole file as 1D array and use ReshapeArray to make it a 2D array of needed size...