- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

64 bit Labview 2015 memory access

02-22-2017 02:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

So far my change solution is using the In Plane Element (reverse Array).

Altenbach, complex single is an excellent idea. As you know, I'll cut my array size in half and less processing but I'm using the same amount of Bytes... Do some number crutching to set Cartesian plot! I think I'll go that route as I time permits writing the VI.

Rolf, your code is correct on processing the Instrument data. The flatten data is "native, host order" not "little endian" as I initially mentioned. Your Flatten data then Resize Array shows 2 buffer allocation. My code of TypeCast(single) then In Plane Element (IPE) (reverseArray) then Decimate Array and finally Build Array shows 4 buffer allocation. I'll subtract 1 buffer alloc, due to the IPE, that leaves 3 buffer alloc vs your 2. So as you say in theory your code uses less buffer alloc.

I ran both version of the code and eyeballed the Used Memory from Win7 Task Manager. Your code hangs processing the data to the WFGraph. Prior to hanging the used memory goes up to 10Gig. My code is successful plotting to the WFGraph. I disconnected the WFGraph to make my comparsion since plotting using Unflatten Data then processing the WFGraph causes LV to hang. Both version of code begin with 150KByte RAM, and end at 1.26G (no WFGraph). The difference is during execution using your Unflatten data uses 2.0G using my TypeCast data uses 2.2G. Looks like only 200MB difference of memory alloc, the data size.

But with all that said, using Unflatten Data, doesn't process well with the WFGraph plot. Does anyone have theories why not? Maybe going from Resize Array to Bundle causes memory problems... This VI will be used as a subVI so WFGraph plot will not be an immediate issue till I decide to show it on the Top Level VI. The plot here was necessary to confirm the data is correct.

Rich J

02-23-2017 04:32 AM - edited 02-23-2017 04:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@richjoh wrote:

Rolf, your code is correct on processing the Instrument data. The flatten data is "native, host order" not "little endian" as I initially mentioned.

You are making a fundamental thought mistake here. That device you are talking to is not going to magically change Endianess when you run this code on a computer that uses Big Endian as preferred byte order. Little Endian means that the string intput (byte array) data to the Unflatten is in Litte Endian, Big Endian is analogue but Native Byte Order means to use whatever is the native byte order of the computer the LabVIEW software is running on. Granted in the current world all desktop versions of LabVIEW are running on x86 where little endian and native endian are equivalent, but if you ever happen to port that code to the cRIO for whatever reason, it will behave wrong, when you happen to use one of the older PPC based cRIO (or sbRIO) devices. So while in practice you likely will not see any problem for a very long time, it is principally wrong to use native byte order for this Unflatten function and you should explicitly use the selection that matches what your device uses.

I ran both version of the code and eyeballed the Used Memory from Win7 Task Manager. Your code hangs processing the data to the WFGraph. Prior to hanging the used memory goes up to 10Gig. My code is successful plotting to the WFGraph. I disconnected the WFGraph to make my comparsion since plotting using Unflatten Data then processing the WFGraph causes LV to hang. Both version of code begin with 150KByte RAM, and end at 1.26G (no WFGraph). The difference is during execution using your Unflatten data uses 2.0G using my TypeCast data uses 2.2G. Looks like only 200MB difference of memory alloc, the data size.

That's one buffer size so allocating that, filling it and copying it back does take some time. The 1.26GB of data you end up with without Graph would indicate that you have at least one or more intermediate controls somewhere for debug reasons.

Every front panel control creates a copy of the entire data it is passed to in order to be able to draw the data while the original data has traveled further and often been modified in place.

It would probably help to remove them for such performance comparisons. One or two extra buffer copy seems not so bad if you have about 10 others going on as well, but if you reduce it to the absolute minimum necessary then that extra buffer copy may suddenly feel like a real difference also in runtime speed.

But with all that said, using Unflatten Data, doesn't process well with the WFGraph plot. Does anyone have theories why not? Maybe going from Resize Array to Bundle causes memory problems... This VI will be used as a subVI so WFGraph plot will not be an immediate issue till I decide to show it on the Top Level VI. The plot here was necessary to confirm the data is correct.

That's pretty strange. Have you tried to directly unflatten to complex data as altenbach suggested and display that in the graph?

Wait! You accounted for the fact that the output of the Reshape Array function is transposed to your original code? It doesn't seem like that! You would for that have to wire the output of the Transpose Array to the graph and the wire from the Reshape Array directly to the terminal for upstream use.

I could imagine that the graph is not really prepared to deal with data that seems to contain 25 million plots of 2 datapoints!

02-23-2017 04:13 PM - edited 02-23-2017 04:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

<blockquote>but if you ever happen to port that code to the cRIO for whatever reason, it will behave wrong, when you happen to use one of the older PPC based cRIO (or sbRIO) devices. So while in practice you likely will not see any problem for a very long time, it is principally wrong to use native byte order for this Unflatten function and you should explicitly use the selection that matches what your device uses.</blockquote>

All data is coming from a Real Time Spectrum Analyzer over Ethernet. Big Endian or Little Endian setting gives the wrong data, only Network Order setting for Unflatten Data works here. From my understanding of Network order some function is used to determine the order, Exchanging Data Btwn Machines.

<blockquote>It would probably help to remove them for such performance comparisons. One or two extra buffer copy seems not so bad if you have about 10 others going on as well, but if you reduce it to the absolute minimum necessary then that extra buffer copy may suddenly feel like a real difference also in runtime speed.</blockquote>

I did exactly that as I mentioned in the response (ran using Unflatten Data compared to Typecast(). The WFGraph is disconnected from the data to run a comparison. Calculation processing time wise I can't find a difference (actually I did read on), only your method fails since the WFGraph accepts normal 2D Array and my Indicator shows Transpose Array therefore answering your quote below, the Tranpose goes on either the WFGraph using Reshape or it goes to the Indicator using TypeCast.

<blockquote>That's pretty strange. Have you tried to directly unflatten to complex data as altenbach suggested and display that in the graph? Wait! You accounted for the fact that the output of the Reshape Array function is transposed to your original code? It doesn't seem like that! You would for that have to wire the output of the Transpose Array to the graph and the wire from the Reshape Array directly to the terminal for upstream use. I could imagine that the graph is not really prepared to deal with data that seems to contain 25 million plots of 2 datapoints! </blockquote>

So I did notice that change with the Transpose when I made the changes but got distracted by my multi-tasking and the VI hanging due to miswire. Anyhow, if I include the WFGraph, your code turned out faster and less memory usage. My guess is most the memory processing is to the WFGraph. When I removed the WFGraph the difference was 200MB as I mentioned.

Using 200MByte Data

Unflatten Data, 4.4GB usage, 3.4GB end ~25 seconds

TypeCast Data 5.7G usage, 4.4GB end ~55 seconds

this is all a crude estimate from eyeballing the clock.

02-23-2017 05:18 PM - edited 02-23-2017 05:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@richjoh wrote:

All data is coming from a Real Time Spectrum Analyzer over Ethernet. Big Endian or Little Endian setting gives the wrong data, only Network Order setting for Unflatten Data works here. From my understanding of Network order some function is used to determine the order, Exchanging Data Btwn Machines.

Something sure doesn't quite match up here!

Network Byte order normally is another name for Big Endian, while Little Endian is what is used on Intel x86/64 systems and by the ARM based RIO systems from NI.

Last but not least: Native byte order means for the Unflatten Function to assume whatever the underlying CPU architecture prefers for the current target your LabVIEW software is executing on. On a Windows desktop systems this definitely is Little Endian.

So when you run this under LabVIEW for Windows, Mac OS X or Linux, native is equivalent to little endian, on a cRIO (sbRIO) PPC target native would do the same as big endian.

So if your data is in little endian you should see no difference on a Windows system from using little endian or native byte order selection for the Unflatten function. But native would be still technically wrong, unless the flattened data comes from the same computer system that you run this software on and doesn't use a specific endianess other than its default. Since you use VISA to read in that data that seems highly unlikely. Your Real Time Spectrum Analyzer doesn't know on what system your LabVIEW software is running and therefore doesn't magically adapt to the endianes of this system.

Also deducing from the original code you had developed it is definitely clear that the data must be in little endian format. You reverse the bytes to undo the byte swapping that Typecast does and then end up with little endian data again, because that is what LabVIEW needs, if your version of LabVIEW is not a very ancient one that runs on Mac OS Classic, Mac OS X PPC machines, Sun Solaris Sparc or HP Unix systems. None of these was available after LabVIEW 7 or so.

So I did notice that change with the Transpose when I made the changes but got distracted by my multi-tasking and the VI hanging due to miswire. Anyhow, if I include the WFGraph, your code turned out faster and less memory usage. My guess is most the memory processing is to the WFGraph. When I removed the WFGraph the difference was 200MB as I mentioned.

There is still the string control that shows the data as received from the device through VISA, going from the image in your previous response. That makes also a 200 MB copy for basically no good use once you confirmed that data is coming from VISA Read at all. It does contain binary data anyhow so is not really readable at all.

02-24-2017 06:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@rolfk wrote:

I started to use LabVIEW in 1992 and have used the Flatten, Unflatten and Typecast functions many times in those 25 years, so you can safely assume that I know what I'm talking about.

Listen to this sentence and memorise it.

Listen to what rolf is telling you because there's a statistically insignificant chance that he's not correct. ![]()

![]()

02-24-2017 11:09 AM - edited 02-24-2017 11:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@rolfk wrote:

There is still the string control that shows the data as received from the device through VISA, going from the image in your previous response. That makes also a 200 MB copy for basically no good use once you confirmed that data is coming from VISA Read at all. It does contain binary data anyhow so is not really readable at all.

OK, no problem there with the "read buffer" indicator, its there for me to observe the data points for my viewing. As you know, once this is used as a subVI and compiled to machine code, the compiler will take care of unused indicators.

I've read that native, host order is the same as Big Endian yes. I've also read that native, host order can either call a function, host to network (hton) or vice versa to determine the order or use a Byte Order Mark. This is to make your code portable (but not portable I guess the NI RIO you mentioned). So this appear to work and I can only give you more info that describes the setup. The RSA sends Little Endian Bytes via Ethernet Cable. Ethernet uses Big Endian aka network order. Visa receive buffer sends the data to UNFlatten using network order. But is network order always Big Endian (I don't know). Network Order is only setting that produces correct values at the time I write this. So for those looking to implement this tailor (convert) the data for your platform.

02-24-2017 06:09 PM - edited 02-24-2017 06:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

@richjoh wrote:

OK, no problem there with the "read buffer" indicator, its there for me to observe the data points for my viewing. As you know, once this is used as a subVI and compiled to machine code, the compiler will take care of unused indicators.

I've read that native, host order is the same as Big Endian yes. I've also read that native, host order can either call a function, host to network (hton) or vice versa to determine the order or use a Byte Order Mark. This is to make your code portable (but not portable I guess the NI RIO you mentioned). So this appear to work and I can only give you more info that describes the setup. The RSA sends Little Endian Bytes via Ethernet Cable. Ethernet uses Big Endian aka network order. Visa receive buffer sends the data to UNFlatten using network order. But is network order always Big Endian (I don't know). Network Order is only setting that produces correct values at the time I write this. So for those looking to implement this tailor (convert) the data for your platform.

No, "native, host order" is NOT the same as Big Endian, it is whatever the CPU on which LabVIEW is running is using. On LabVIEW for Windows, "native, host order" is ALWAYS equivalent to Little Endian!

Ethernet in itself is totally endian neutral. Everything that is transfered over ethernet is ultimately a byte stream and therefore has no endianess. There is only one single byte per unit and there is no way to order a single byte in different ways. The TCP/IP network protocols uses big endian for the protocol headers. But those headers you will never see unless you go actually sniffing on the network card interface with a library like libpcap (Winpcap for Windows).

The payload of the TCP/IP frames is again a byte stream and the network stack does absolutely nothing in terms of endianess with it. For the stack that is simply a bunch of bytes and here again you have no endianess.

It is the responsibility of the two endpoints how those bytes are to be interpreted. So your RSA most likely is itself using a Little Endian CPU and decides to dump the IQ data simply into the TCP/IP payload as it has it in memory. Therefore the data in the TCP/IP payload IS in Little Endian format. And until after the VISA Read the data you read in LabVIEW is simply a byte stream again, but you now know that it contains an array of complex single precision data in little endian format. In order for LabVIEW to be able to use this data properly it needs to be turned into an array of (complex) single precision floating point values. This does the Unflatten from String function and you tell this function what the endianess of the data in the String (byte stream) is supposed to be.

LabVIEW then "typecasts" the data into the desired datatype and if the endianess type that you specified as input for the Unflatten from String function does NOT match the native endianness on the machine LabVIEW is currently running, it will byte swap every value as needed.

If you specify "native, host order" as input type to the Unflatten from String function, this function will NEVER byte swap any data. For your case that seems to work, since the data you receive from the device is in Little Endian and LabVIEW for Windows needs Little Endian too, but it would go wrong as soon as you execute that VI on a platform that uses Big Endian as native format.

LabVIEW doesn't only run on Windows! Originally, when LabVIEW was released the only platform it was running on used Big Endian CPUs. Then Windows, Sun Solaris on Sparc and HP Unix got added, but except on Windows all other platforms still used Big Endian. Then Linux for x86 got added to the supported platforms, while Sun Solaris and HP Unix were discontinued since there were almost no LabVIEW users on them anymore, later Apple did turn to the dark side and used x86 CPUs too in their machines. So currently most platforms you can run LabVIEW on are running on an x86 (or x64) CPU and are therefore using Little Endian as their native endian format.

But that has not always been like that and LabVIEW is internally platform independent enough to pretty easily add other CPU architectures, if they should somehow gain enough traction, that NI sees a business case in supporting them. They only recently added ARM support for their embedded controller platform. So it is smart to code your VIs correctly from the beginning, even if you don't believe that it will ever run on anything else than your current PC you have.

02-25-2017 05:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Not sure why all the rant, I was describing the setup here, all your information in this post is trivial knowledge, little, big endian, ethernet etc, etc. We all know LV runs off of Little Endian (from MAC OS originally little endian) so now MS Win is Big Endian, nothing new here. I'm not a history buff on the timeline of LV platform but my work area uses MS Windows. I don't know what the Unflatten is actually doing I'm going off of the correctness of the data. If Unflatten doesn't need to byte swap because LV needs Little Endian then great. If I use Little Endian to the Unflatten, the data is wrong thus my inclined to think there is byte swapping. But hey, (I can't read what Unflatten is actually doing with data for native, host order).... its my guess.

@rolfk wrote:

No, "native, host order" is NOT the same as Big Endian, it is whatever the CPU on which LabVIEW is running is using. On LabVIEW for Windows, "native, host order" is ALWAYS equivalent to Little Endian!

Ethernet in itself is totally endian neutral. Everything that is transfered over ethernet is ultimately a byte stream and therefore has no endianess. There is only one single byte per unit and there is no way to order a single byte in different ways. The TCP/IP network protocols uses big endian for the protocol headers. But those headers you will never see unless you go actually sniffing on the network card interface with a library like libpcap (Winpcap for Windows).

The payload of the TCP/IP frames is again a byte stream and the network stack does absolutely nothing in terms of endianess with it. For the stack that is simply a bunch of bytes and here again you have no endianess.

It is the responsibility of the two endpoints how those bytes are to be interpreted. So your RSA most likely is itself using a Little Endian CPU and decides to dump the IQ data simply into the TCP/IP payload as it has it in memory. Therefore the data in the TCP/IP payload IS in Little Endian format. And until after the VISA Read the data you read in LabVIEW is simply a byte stream again, but you now know that it contains an array of complex single precision data in little endian format. In order for LabVIEW to be able to use this data properly it needs to be turned into an array of (complex) single precision floating point values. This does the Unflatten from String function and you tell this function what the endianess of the data in the String (byte stream) is supposed to be.

LabVIEW then "typecasts" the data into the desired datatype and if the endianess type that you specified as input for the Unflatten from String function does NOT match the native endianness on the machine LabVIEW is currently running, it will byte swap every value as needed.

If you specify "native, host order" as input type to the Unflatten from String function, this function will NEVER byte swap any data. For your case that seems to work, since the data you receive from the device is in Little Endian and LabVIEW for Windows needs Little Endian too, but it would go wrong as soon as you execute that VI on a platform that uses Big Endian as native format.

LabVIEW doesn't only run on Windows! Originally, when LabVIEW was released the only platform it was running on used Big Endian CPUs. Then Windows, Sun Solaris on Sparc and HP Unix got added, but except on Windows all other platforms still used Big Endian. Then Linux for x86 got added to the supported platforms, while Sun Solaris and HP Unix were discontinued since there were almost no LabVIEW users on them anymore, later Apple did turn to the dark side and used x86 CPUs too in their machines. So currently most platforms you can run LabVIEW on are running on an x86 (or x64) CPU and are therefore using Little Endian as their native endian format.

But that has not always been like that and LabVIEW is internally platform independent enough to pretty easily add other CPU architectures, if they should somehow gain enough traction, that NI sees a business case in supporting them. They only recently added ARM support for their embedded controller platform. So it is smart to code your VIs correctly from the beginning, even if you don't believe that it will ever run on anything else than your current PC you have.

02-25-2017 06:05 PM - edited 02-25-2017 06:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

You got it all the wrong way.

MacOS Classic worked on the Motorola 68000 and later the PowerPC which were both Big Endian versions. The PowerPC is really a Bi-Endian CPU that could run both, but MacOS Classic and the MacOS X versions for PowerPC used it in Big Endian mode. Intel x86 is a Little Endian CPU.

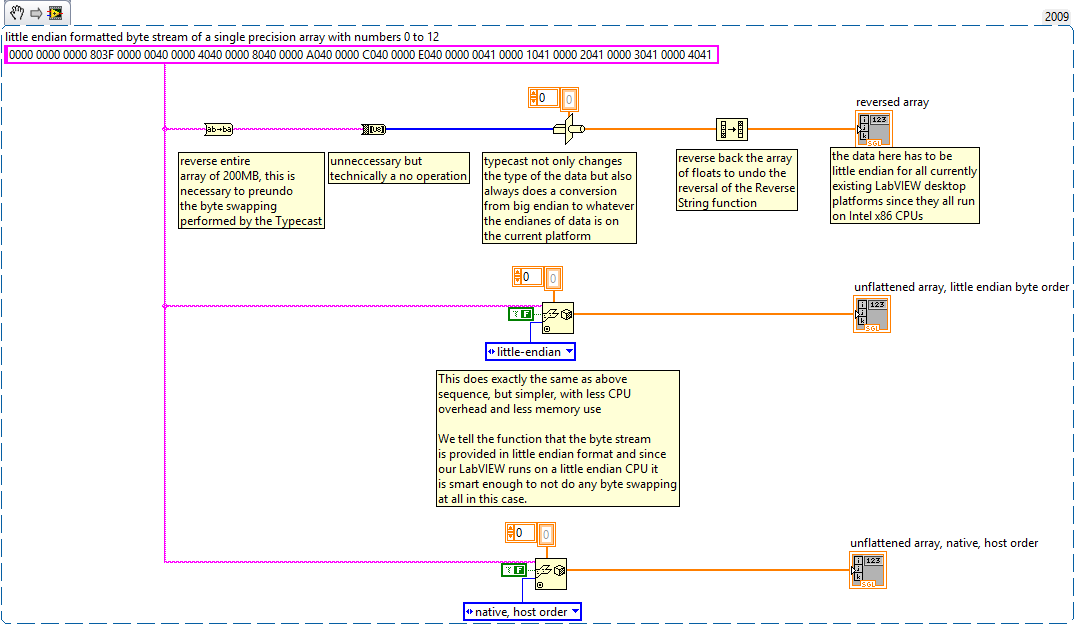

Look at this snippet and run it on your machine and then tell me if the little endian unflattened array looks different than the native, host order unflattened array, because that is what you were claiming all the time and which I don't believe.

But since your data arrives as little endian, using explicit little endian for the Unflatten function is the correct thing to do. Native is only correct if the data was generated on the same machine than the one you run this program on. Otherwise you get easily a mismatch.

02-26-2017 06:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

If I can find time I'll post sample data from a Tektronix RSA 6105 (I think is the model, running WinXP), but that could be awhile, since I'm off to another task not accessible to the instrument.

I don't think your following that LV "native host order" calls a function to determine the "host order". Same as you would do if your writing in C and needed to determine the endian. Whatever way is determining this it is the correct order. Your example will work for you, you not getting the data from the RSA as I described.