- New 2,936

- In Development 0

- In Beta 1

- Declined 2,616

- Duplicate 698

- Completed 323

- Already Implemented 111

- Archived 0

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

Maintain Mutation History as part of Enum Type Definition

This Idea was spawned from my quest for the über-migration experience when upconverting previous versions of enum data to the most recent version - in other words, providing backwards compatibility for data sources (networked clients/servers, databases, files...) that use a previous version of an enum.

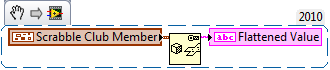

When you flatten an enum, the result is the only the numeric value of the name-value pair. Once flattened, an enum is indistinguishable from a vanilla numeric, containing no additional information:

(Yes, the snippet works in 2010, even with the cameo, but it's slow because the "Favorite Scrabble Word" enum has 47k+ items)

Looking at the flattened value, the first 4 bytes (00000001) represent the value of the I32, the next two represent the 6809th enum element (1A99), the next two represent the decimal year in hex (0797), and the final two bytes represent the birth month enum index and day of month respectively. That's all the bytes there is - 20 hex nibbles of flattened data to describe the value.

Storing this data structure as-is will be problematic if the type definition of the Scrabble Club Member ever changes. Fortunately, a typedef can be converted into a class, and the class definition will natively retain data structure mutation history, meaning flattened object data can be upconverted from previous versions. Cool!

Above, we can see that if the data structure is converted to a class, the red-underlined represents byte-for-byte the same information as the flattened cluster, but with flattened classes there are additional goodies buried in the data, such as the version of the data underlined in blue.

From Preserving Class Data:

"LabVIEW, as a graphical programming environment, has an advantage over other programming languages. When you edit the class, LabVIEW records the edits that you make. LabVIEW is context aware as you change inheritance, rename classes, and modify private data clusters. This allows LabVIEW to create the mutation routine for you. LabVIEW records the version number of the data as part of the flattened data, so that when LabVIEW unflattens the data, LabVIEW knows how to mutate it into the current version of the class."

But what if you mutate one of the enums, by adding items, removing items, rearranging items, or renaming items? Now you're in a pickle, because Enums currently do not store mutation history as part of their definition.

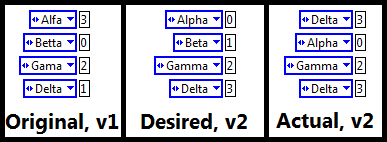

Consider the simple enum, GreekLetters, as part of a project developed by So-So Engineering, Inc. The SSEI developers launch the code into production with version 1 of the enum: v1: { Alfa, Betta, Hero, Delta } (The So-So engineers aren't too good at Greek, but so-so engineering brings home the bacon at SSEI).

- v2: { Alpha, Beta, Gyro, Delta }

- v3: { Alpha, Beta, Gyro, Delta, Gamma }

- v4: { Alpha, Beta, Delta, Gamma }

- v5: { Alpha, Beta, Gamma, Delta }

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.