Inactive

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report to a Moderator

Build Custom Ethernet Driver to Time Sync Multiple DAQ Chassis, Without 1588, or LabVIEW TimeSync

Problem:

- Many applications need multiple DAQ chassis synced across 100s of meters. Ethernet is not used due to its indeterminism.

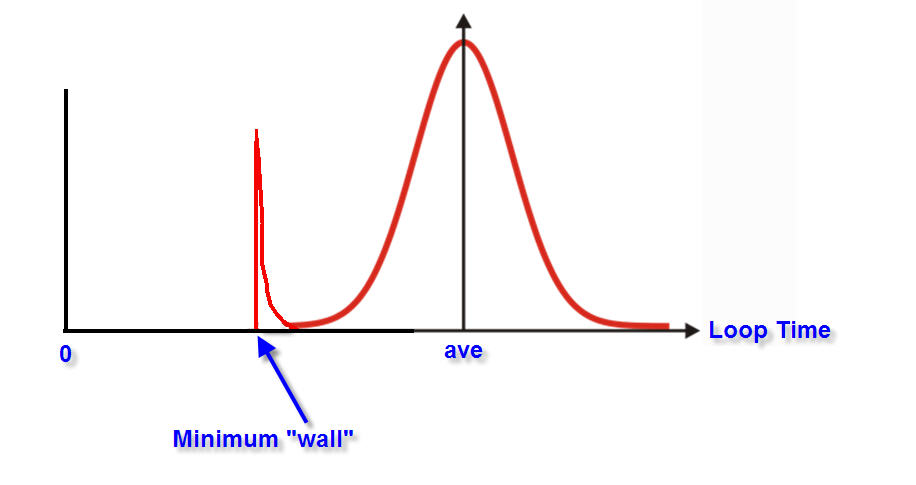

- While NI's TimeSync uses special hardware (&1588), it seems like NI could build into the Ethernet drivers a way to do time syncing without any other hardware modules (cDAQ, cRIO, PXIe, etc.). The customized NI-Ethernet would do the master-slave timing for you. It would be built into the platform. The key may be to use the lower boundary of histogram distribution in the statistics of loop time. Not using an average loop time, but use the bounded minimum as a special loop time statistic. See the image at the bottom.

- Ethernet time to send a message and receive an answer is not deterministic. But, if all the Ethernet chassis are on a dedicated subnet with no other traffic, then there should be some deterministic MINIMUM time for one chassis to send a packet to another chassis.

Possible solution. Configuration: Suppose you had 5 Ethernet cDAQ 8 slot chassis. Start off by making a simple configuration work first, then extrapolate to more complicating network configurations. Therefore make all the chassis on the same subnet and maybe a dedicated subnet. Each cDAQ is 100s of feet from the other. You want to sample data at 1000 samples per second on all chassis and either lock all the sample clocks, or adjust the clocks on the fly, or know how much the sample clocks differ and adjust the times after the data is transferred.

- LabVIEW tells each slave chassis that it is to be a slave to a particular master cDAQ chassis (gives the IP address and MAC address).

- LabVIEW tells one of the cDAQ chassis to be the master and it gives the IP address (and MAC address) of all the slaves to that master.

- The local Ethernet driver on the chassis then handles all further syncing without any more intervention from LabVIEW. Avoids Windows’ lack of determinism.

- The master chassis sends an Ethernet packet to each slave (one at a time, not broadcast). The slave's Ethernet driver stores the small packet (with a time stamp of when received) and immediately sends a response packet that includes an index to the packet received (and the timestamp when the slave received it). The master stores the response packet and immediately sends a response to the slave response. This last message back to the slave may not be necessary.

- The local Ethernet driver for each cDAQ has stored all 1000 loop times and their associated timestamps.

- Now each master slave combination has a timestamp of the other's clock with a time offset due to the Ethernet delay. But this Ethernet delay is not a constant (it is indeterminate). If it were constant, then syncing would be easy. BUT

- One characteristic of the loop time should be determinant (very repeatable). On a local subnet the minimum loop time should be very consistent. After these loop messages and time stamps are sent 1000 times, the minimum time should be very repeatable. Example: Suppose we only want 10 us timing (one tenth of a sample period). After sending 1000 time stamped looped messages, we find that the minimum loop time falls between 875us and 885 us. We have 127 loop times that fall into this minimum range (like the bottom “bucket” in a histogram plot). If we were to plot the time distribution, we would notice an obvious WALL at the minimum times. We would not have a Gaussian distribution. This 2nd peak in the distribution at the minimum would be another good indication that this lower value is determinant.

- Now the master and slave chassis communicate to make sure they have the same minimum loop times on the same message packet loops. The ones that agree are the ones used to determine the timestamp differences between the master and the slaves. The master then sends to each slave the offsets to use to get the clocks synchronized to one tenth of a sample time.

This continues to go on (in the background) to detect clock drift. Obviously after the data acquisition starts the network traffic will increase, and that will cause the number of minimum packet loop times to be less than 127 out of 1000. But we should be able to determine a minimum number of minimums that will give reliable results, especially since we know beforehand the amount of traffic we will be adding to the network once the data acquisition starts. We may find out that an Ethernet bus utilization of less than 25% will give reliable results. Reliable because we consistently get loop times that are never less than some minimum value (within 10 us).

Remember, a histogram of the loop times should show a second peak at this minimum loop time. This 2nd peak is an indication of whether this idea will work or not. The “tightness” of this 2nd peak is an indication of the accuracy of the timestamp that is possible. This 2nd peak should have a much smaller standard deviation (or even a WALL at the minimum - see image). Since this standard deviation is so much smaller than the overall average of the loop time, then it is a far better value to use to sync the cDAQ chassis. If it is “tight” enough, then maybe additional time syncing can be done (more accuracy, timed to sample clocks, triggers, etc.).

Example, now that the clock are synced to within 10 us, the software initiated start trigger could be sent as a start time, not as a start trigger. In other words, the master cDAQ Ethernet driver would get a start trigger from LabVIEW and send all the slaves a packet telling them to start sampling at a specific time (computed as 75 milliseconds from now).

I mentioned parts of this idea to Chris when I called in today for support (1613269) on the cDAQ Ethernet and LabVIEW 2010 TimeSync. Chris indicated that this idea is not being done on any of your hardware platforms yet. By the way, Chris was very knowledgeable and I am impressed (as usual) with your level of support and talent of your team members.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.