- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Signal Scaling Question

02-10-2017 04:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

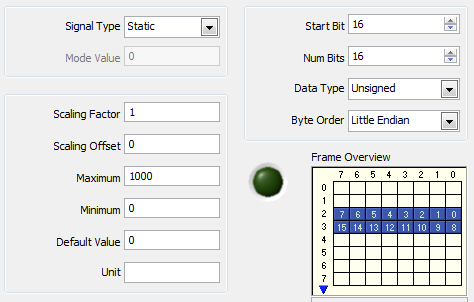

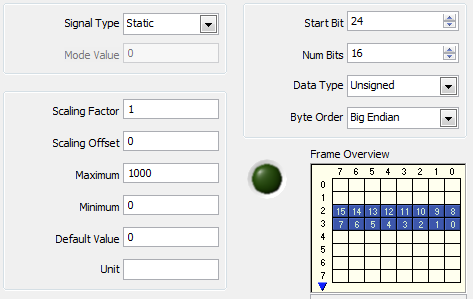

Why does the start bit seem so weird with big endian (Motorola) when encoding a frame into signals? Here is an example. If I have a Start Bit of 0 with the number of bits equal to 16 the visualization is clear and expected with little endian. And as you increment the start bit you see the bits shift farther and farther down. Here is an image at start bit of 16 and number of bits 16.

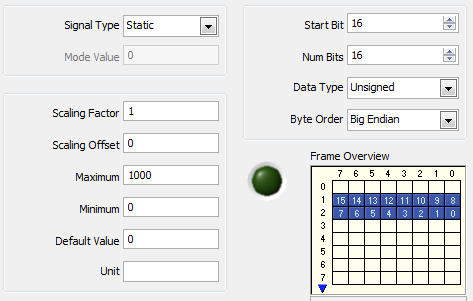

Seems fine. Now if I were to change the byte order to big endian I would expect the same 16 bits to be used, but the order to be reversed, but with CAN this isn't how it is and instead does something else.

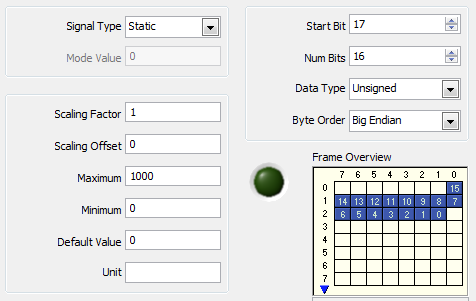

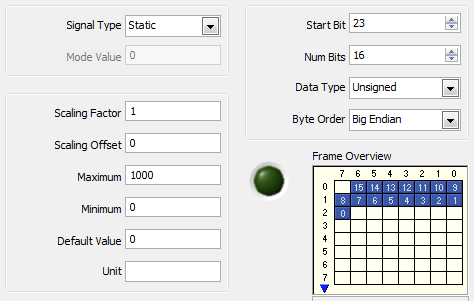

And if I increment the start bit to 17 now I don't even have a valid signal, and actually need to keep incrementing until it is 16 before it jumps down to the next bytes to use. Here is a few more images of what I'm talking about.

I get that this is correct, but why? I ask because I am now reading CAN data using the ISO 15765 protocol and am getting an array of bytes back. And when it has a start bit of 0, regardless of the endianess used and bit length, the first bit is always the one used, where with the CAN Signal conversion it seems the start bit moves depending on the bit length, and endieness. Thanks.

Unofficial Forum Rules and Guidelines

Get going with G! - LabVIEW Wiki.

16 Part Blog on Automotive CAN bus. - Hooovahh - LabVIEW Overlord

02-13-2017 09:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

The start bit represents the location of the least significant bit in the signal.

National Instruments

02-13-2017 10:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

Any reason on why ISO 15765 payloads don't obey the same scheme? Yes the start bit is always correct but the number of bits and bit length are inconsistent with how DBC scales them.

Here is an example. I have a CDD file that defines data types, and has DIDs. One DID I have is for say ID 0x0100. This DID has 12 DID objects and returns 24 bytes, 2 bytes for each object. The first object has a start bit of 0, and a bit length of 16. And the data type for the object is Motorola. This type of signal can't exist in a DBC database but is fine in a CDD. And it seems the reason is, because the endianess comes into play after extracting the data. A start bit of 0 and bit lenght of 16 means grab the first two byte, regardless if it is big or little endian, when reading a DID. But with a DBC the scaling isn't the same, since you can't have a start bit of 0, bit length of 16, and big endian.

Unofficial Forum Rules and Guidelines

Get going with G! - LabVIEW Wiki.

16 Part Blog on Automotive CAN bus. - Hooovahh - LabVIEW Overlord

02-13-2017 03:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report to a Moderator

It may be an artifact of the FIBEX file format that the XNET DB editor uses by default. I asked around to see if I could find a specific reason why we associate the start bit with the LSB but didn't find out anything useful.

National Instruments